Exercise 2.7: Huffman Application for Binary Two-Tuples

The application of the Huffman algorithm in its original form assumes a symbol set size M>2 and is therefore useless for data compression of binary sources.

However, if one combines several consecutive binary characters of the source into a new symbol, one can usefully apply Huffman data compression to the new symbol set.

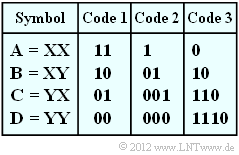

In this task we start from the source symbol set { X, Y } ⇒ M=2 and form two-tuples according to the table on the right with the new symbol set { A, B, C, D } ⇒ (M′=M2=4).

For example, the binary source symbol sequence XYXXYXXXYY thus becomes the quaternary sequence BACAD.

Furthermore, three codes are given in the table, some of which have been created by the Huffman algorithm. The binary output sequences then result for our example as follows:

- Code 1: 1011011100,

- Code 2: 0110011000,

- Code 3: 10011001110.

Again for understanding:

- From the original symbol set { X, Y } a quaternary set with symbol set { A, B, C, D } is obtained by forming two-tuples.

- The sequence length N is thereby halved to N′=N/2 .

- Huffman coding again results in a binary sequence whose symbol set is designated {0, 1} for better differentiation.

- The application of Huffman coding makes sense exactly when the length L of the initial sequence is smaller than N (on statistical average).

This task is intended to clarify which of the given binary codes make sense under which boundary conditions.

- Let the binary message source { X, Y } be memoryless and be described solely by the symbol probability pX .

- The second probability is then always pY=1−pX.

Hints:

- The exercise belongs to the chapter Entropy Coding according to Huffman.

- In particular, reference is made to the page Application of Huffman coding to k-tuples.

- The average code word length per two-tuple is LM′=pA⋅LA+ ... +pD⋅LD. With respect to a source symbol, LM=LM′/2.

- A comparable task with ternary input symbols is treated in Exercise 2.7Z .

- The idea for this task arose during a lecture by Prof. Robert Fischer from the University of Ulm at the Technical University of Munich on the topic of

"Der goldene Schnitt in der Nachrichtentechnik" ⇒ "The golden ratio in communications technology".

Questions

Solution

- LM=[pA⋅LA+pB⋅LB+pC⋅LC+pD⋅LD]/2=[LA+LB+LC+LD]/8.

Taking into account the given assignments, we obtain for

- Code 1: LM=1.000 bit/sourcesymbol_,

- Code 2: LM=1.125 bit/sourcesymbol_,

- Code 3: LM=1.250 bit/sourcesymbol_.

In the course of the task it will become apparent that the first two codes are quite possible as a result of the Huffman algorithm (of course only with suitable symbol probabilities). Code 3 is also prefix-free, but never optimal in terms of average code word length.

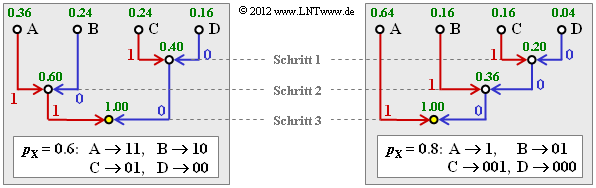

(2) The probabilities of the possible two-tuples are:

- pA=0.62=0.36,pB=0.6⋅0.4=0.24=pC,pD=0.42=0.16.

- This gives the tree diagram on the left (in the adjacent graph) and the following Huffman code:

- A → 11, B → 10, C → 01, D → 00.

- This is Code 1 ⇒ solution suggestion 1.

(3) Each two-tuple is represented by two bits. Thus

- LM=1.000 bit/sourcesymbol_.

(4) Here the probabilities of each two-tuple are:

- pA=0.82=0.64,pB=0.8⋅0.2=0.16,

- pC=pB=0.8=0.16,pD=0.22=0.04.

According to the tree diagram on the right the result is now Code 2 ⇒ solution suggestion 2:

- A → 1, B → 01, C → 001, D → 010.

(5) For Code 2 the mean two-tuple length or the average code word length is:

- LM′=0.64⋅1+0.16⋅2+(0.16+0.04)⋅3=1.56bit/two−tuples⇒LM=LM′/2=0.78bit/sourcesymbol_.

(6) From the earlier subtasks it has been found:

- For pX=0.6, Code 1 is optimal and the average code word length of this code is (independent of pX) LM=1 bit/source symbol.

- For pX=0.8 according to subtask (4), Code 2 is optimal and the average code word length is LM=0.78 bit/source symbol.

The sought maximum value pX, max will thus lie between 0.6 and 0.8 . The determining equation here is that for the limiting case pX=pX, max both codes have exactly the same average code word length LM=1 bit/source symbol, or LM′=2 bit/two-tuple.

- With the abbreviation p=pX, max the corresponding determining equation is:

- LM′(Code2)=p2⋅1+p⋅(1−p)⋅2+p⋅(1−p)⋅3+(1−p)2⋅3!=2.

- This leads to the numerical result:

- p2+p−1!=0⇒pX,max=p=√5−12≈0.618_.

- Since the basic Huffman structure does not change by swapping X and Y the lower bound is:

- pX,min=1−pX,max≈0.382_.

The representation of the tuples of two by bit sequences of different lengths (Code 2) therefore only makes sense if the symbol probabilities of X and Y differ significantly. If, on the other hand, they are between 0.382 and 0.618, Code 1 is to be applied.

Note: The division of a path of length 1 into two sections of length 0.618... and 0.382... is called the Golden ratio, which is encountered again and again in the most diverse fields.