Difference between revisions of "Aufgaben:Exercise 1.11: Syndrome Decoding"

m (Text replacement - "Category:Aufgaben zu Kanalcodierung" to "Category:Channel Coding: Exercises") |

|||

| (15 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{quiz-Header|Buchseite= | + | {{quiz-Header|Buchseite=Channel_Coding/Decoding_of_Linear_Block_Codes}} |

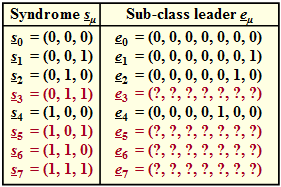

| − | [[File: | + | [[File:EN_KC_A_1_11.png|right|frame|Syndrome and coset leaders <br>$($incomplete list$)$ of $\rm HC \ (7, \, 4, \, 3)$ ]] |

| − | + | To decode a $(7, \, 4, \, 3)$ Hamming code, which is defined by its parity-check matrix | |

| − | :$${ \boldsymbol{\rm H}}_{\rm } = \begin{pmatrix} 1 &1 &0 &1 &1 &0 &0\\ 0 &1 &1 &1 &0 &1 &0\\ 1 &0 &1 &1 &0 &0 &1 \end{pmatrix} \hspace{0.05cm}$$ | + | :$${ \boldsymbol{\rm H}}_{\rm } = \begin{pmatrix} 1 &1 &0 &1 &1 &0 &0\\ 0 &1 &1 &1 &0 &1 &0\\ 1 &0 &1 &1 &0 &0 &1 \end{pmatrix} \hspace{0.05cm},$$ |

| − | + | "syndrome decoding" is also suitable. Since all Hamming codes are perfect, the result is as good as with the $($in the general case$)$ more complicated maximum likelihood decoding. | |

| − | + | For [[Channel_Coding/Decoding_of_Linear_Block_Codes#Principle_of_syndrome_decoding|"syndrome decoding"]] one proceeds as follows: | |

| − | * | + | *From the received vector $\underline{y}$ one forms the syndrome $($it holds $m = n - k)$: |

:$$\underline{s} = \underline{y} \cdot { \boldsymbol{\rm H}}^{\rm T} \in {\rm GF}(2^m) \hspace{0.05cm}.$$ | :$$\underline{s} = \underline{y} \cdot { \boldsymbol{\rm H}}^{\rm T} \in {\rm GF}(2^m) \hspace{0.05cm}.$$ | ||

| − | * | + | *In [[Channel_Coding/Channel_Models_and_Decision_Structures#Binary_Symmetric_Channel_.E2.80.93_BSC|"BSC channel"]] the received word $\underline{y} = \underline{x} \, ({\rm code\:word}) + \underline{e} \, ({\rm error\:vector})$ is also an element of ${\rm GF}(2^n)$, and it holds because of $ \underline{x} · \boldsymbol {{\rm H} }^{\rm T} = |

| − | \underline{0}$ | + | \underline{0}$: |

:$$\underline{s} = \underline{e} \cdot { \boldsymbol{\rm H}}^{\rm T} \hspace{0.05cm}.$$ | :$$\underline{s} = \underline{e} \cdot { \boldsymbol{\rm H}}^{\rm T} \hspace{0.05cm}.$$ | ||

| − | * | + | *Many error patterns $\underline{e}$ lead to the same syndrome $\underline{s}$. One now groups those error patterns with the same syndrome $\underline{s}_{\mu}$ to the coset ${\it \Psi}_{\mu}$. |

| − | * | + | *The coset leader $\underline{e}_{\mu}$ is the fault vector that has the lowest Hamming weight within the class ${\it \Psi}_{\mu}$ and is accordingly the most probable. If there are several of these, one chooses one of them arbitrarily. |

| − | + | The above graph shows the incomplete list of coset leaders $\underline{e}_{\mu}$ for each $\underline{s}_{\mu}$. | |

| − | + | The most likely error vectors | |

| − | *$\underline{e}_{3}$ | + | *$\underline{e}_{3}$ with syndrome $\underline{s}_{3} = (0, 1, 1)$, |

| − | *$\underline{e}_{5}$ | + | *$\underline{e}_{5}$ with syndrome $\underline{s}_{5} = (1, 0, 1)$, |

| − | *$\underline{e}_{6}$ | + | *$\underline{e}_{6}$ with syndrome $\underline{s}_{6} = (1, 1, 0)$, |

| − | *$\underline{e}_{7}$ | + | *$\underline{e}_{7}$ with syndrome $\underline{s}_{7} = (1, 1, 1)$ |

| − | + | are to be determined in the subtasks '''(4)''' and '''(5)'''. | |

| Line 37: | Line 37: | ||

| − | + | Hints: | |

| − | + | * This exercise belongs to the chapter [[Channel_Coding/Decoding_of_Linear_Block_Codes|"Decoding of Linear Block Codes"]]. | |

| − | * | + | |

| − | * | + | * Underlying is a Hamming code with parameters $n = 7$, $k = 4$ ⇒ $m = 3$. All code words have the following format: |

:$$\underline{x} = ( x_1, x_2, x_3, x_4, x_5, x_6, x_7) = ( u_1, u_2, u_3, u_4, p_1, p_2, p_{3}) \hspace{0.05cm}.$$ | :$$\underline{x} = ( x_1, x_2, x_3, x_4, x_5, x_6, x_7) = ( u_1, u_2, u_3, u_4, p_1, p_2, p_{3}) \hspace{0.05cm}.$$ | ||

| − | * | + | *The parity-check equations are illustrated on the information sheet for [[Aufgaben:Exercise_1.11Z:_Syndrome_Decoding_again|"Exercise 1.11Z"]] which considers the same constellation as in the present exercise. |

| − | * | + | |

| + | *In the last subtask '''(6)''', use the BSC parameter $ \varepsilon = 0.1$. | ||

| Line 49: | Line 50: | ||

| − | === | + | ===Questions=== |

<quiz display=simple> | <quiz display=simple> | ||

| − | { | + | {How many received words $(N_{0})$ lead to the syndrome $\underline{s} = \underline{s}_{0} = (0, 0, 0)$? |

|type="{}"} | |type="{}"} | ||

$N_{0} \ = \ $ { 16 3% } | $N_{0} \ = \ $ { 16 3% } | ||

| − | { | + | {How many received words $(N_{7})$ lead to the syndrome $\underline{s} = \underline{s}_{7} = (1, 1, 1)$? |

|type="{}"} | |type="{}"} | ||

$N_{7} \ = \ $ { 16 3% } | $N_{7} \ = \ $ { 16 3% } | ||

| − | { | + | {What are the characteristics of all coset leaders $\underline{e}_{\mu}$? |

|type="[]"} | |type="[]"} | ||

| − | - | + | - The last three bits of $\underline{e}_{\mu}$ are identical to $\underline{s}_{\mu}$ . |

| − | - | + | - All $\underline{e}_{\mu}$ contain a single "$1$" each. |

| − | + | + | + All $\underline{e}_{\mu}$ include at most one "$1$". |

| − | { | + | {What syndrome $\underline{s}_{\mu}$ does the error vector $(1, 0, 0, 0, 0, 0, 0)$ lead to? |

|type="{}"} | |type="{}"} | ||

$\underline{e} = (1, 0, 0, 0, 0, 0, 0) \text{:} \hspace{0.4cm} {\rm Index} \ \mu \ = \ $ { 5 } | $\underline{e} = (1, 0, 0, 0, 0, 0, 0) \text{:} \hspace{0.4cm} {\rm Index} \ \mu \ = \ $ { 5 } | ||

| − | { | + | {Calculate the syndrome $\underline{s}_{\mu}$ $($Input: Index $\mu)$ for |

|type="{}"} | |type="{}"} | ||

$\ \underline{e} = (0, 1, 0, 0, 0, 0, 0) \text{:} \hspace{0.4cm} {\rm Index} \ \mu \ = \ $ { 6 } | $\ \underline{e} = (0, 1, 0, 0, 0, 0, 0) \text{:} \hspace{0.4cm} {\rm Index} \ \mu \ = \ $ { 6 } | ||

| Line 76: | Line 77: | ||

| − | { | + | {What is the block error probability for the BSC model with falsification probability $\varepsilon = 0.1$? |

|type="{}"} | |type="{}"} | ||

| − | ${\rm Pr( | + | ${\rm Pr(block\:error)} \ = \ $ { 15 3% } $\ \%$ |

</quiz> | </quiz> | ||

| − | === | + | ===Solution=== |

{{ML-Kopf}} | {{ML-Kopf}} | ||

| − | '''(1)''' | + | '''(1)''' There are a total of $2^7 = 128$ different code words $\underline{x}$ and according to the BSC model also $2^7$ different received words $y$ and as many error vectors $\underline{e}$. |

| − | * | + | *With $m = 3$ parity bits, there are $2^3 = 8$ distinct values for the syndrome, |

:$$\underline{s} \hspace{0.05cm} \in \hspace{0.05cm} \{ \underline{s}_0, \underline{s}_1,\hspace{0.05cm} \text{...} \hspace{0.05cm} , \underline{s}_7\} = \{ \underline{s}_{\mu} \}\hspace{0.05cm}, \hspace{0.2cm} \mu = 0, \hspace{0.05cm} \text{...} \hspace{0.05cm} , 7 \hspace{0.05cm},$$ | :$$\underline{s} \hspace{0.05cm} \in \hspace{0.05cm} \{ \underline{s}_0, \underline{s}_1,\hspace{0.05cm} \text{...} \hspace{0.05cm} , \underline{s}_7\} = \{ \underline{s}_{\mu} \}\hspace{0.05cm}, \hspace{0.2cm} \mu = 0, \hspace{0.05cm} \text{...} \hspace{0.05cm} , 7 \hspace{0.05cm},$$ | ||

| − | : | + | :and just as many "cosets". |

| − | * | + | *Since in the Hamming code, which is perfect, all error vectors belong to one of the eight cosets ${\it \Psi}_{\mu}$ and, moreover, the number of all vectors in all cosets is the same ("Why should it be different?" Is that enough proof for you?), we get: |

:$$ N_0 = \frac{2^n}{2^m} = 2^k \hspace{0.15cm} \underline{= 16} \hspace{0.05cm}.$$ | :$$ N_0 = \frac{2^n}{2^m} = 2^k \hspace{0.15cm} \underline{= 16} \hspace{0.05cm}.$$ | ||

| − | * | + | *The cosets ${\it \Psi}_{0}$ include for example - see sample solution to [[Aufgaben:Exercise_1.11Z:_Syndrome_Decoding_again|"Exercise 1.11Z"]] - the vectors |

:$$\underline{e} = (1, 1, 0, 0, 0, 0, 1),$$ | :$$\underline{e} = (1, 1, 0, 0, 0, 0, 1),$$ | ||

| − | :$$\underline{e} = (1, 1, | + | :$$\underline{e} = (1, 1, 1, 1, 1, 1, 1).$$ |

| − | '''(2)''' | + | '''(2)''' According to the comments of the last partial result, the following applies equally: $N_{7} \ \underline{= 16}$. |

| − | '''(3)''' | + | '''(3)''' Correct is <u>answer 3</u>: |

| − | * | + | *The coset leader $\underline{e}_{\mu}$ is the error vector $\underline{e}$ with the lowest [[Channel_Coding/Objective_of_Channel_Coding#Important_definitions_for_block_coding|"Hamming weight"]] $w_{\rm H}(\underline{e})$ that leads to the syndrome $\underline{s}_{\mu}$. |

| − | * | + | |

| − | :* | + | *The (7, 4, 3) Hamming code considered here is perfect. That is, all eight coset leaders therefore contain. |

| − | :* | + | :*either no "one" $(\underline{e}_{0}$ ⇒ no correction is required$)$, or |

| + | :*exactly a single "one" $(\underline{e}_{1}, \hspace{0.05cm} \text{...} \hspace{0.05cm} , \underline{e}_{7}$ ⇒ an information or parity bit must be corrected$)$. | ||

| − | '''(4)''' | + | '''(4)''' It holds $\underline{s} = \underline{e} · \boldsymbol{\rm H}^{\rm T}$: |

:$$\underline{s} = \begin{pmatrix} 1 &0 &0 &0 &0 &0 &0 \end{pmatrix} \cdot \begin{pmatrix} 1 &0 &1\\ 1 &1 &0\\ 0 &1 &1\\ 1 &1 &1\\ 1 &0 &0\\ 0 &1 &0\\ 0 &0 &1 \end{pmatrix} = \begin{pmatrix} 1 &0 &1 \end{pmatrix} = \underline{s}_5 \hspace{0.3cm} \Rightarrow\hspace{0.3cm} \hspace{0.15cm} \underline{ \mu= 5} \hspace{0.05cm}.$$ | :$$\underline{s} = \begin{pmatrix} 1 &0 &0 &0 &0 &0 &0 \end{pmatrix} \cdot \begin{pmatrix} 1 &0 &1\\ 1 &1 &0\\ 0 &1 &1\\ 1 &1 &1\\ 1 &0 &0\\ 0 &1 &0\\ 0 &0 &1 \end{pmatrix} = \begin{pmatrix} 1 &0 &1 \end{pmatrix} = \underline{s}_5 \hspace{0.3cm} \Rightarrow\hspace{0.3cm} \hspace{0.15cm} \underline{ \mu= 5} \hspace{0.05cm}.$$ | ||

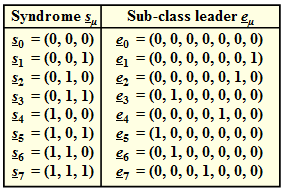

| − | [[File: | + | [[File:EN_KC_A_1_11d_v2.png|right|frame|All coset leaders of the $\rm HC (7, \, 4, \, 3)$ ]] |

| − | '''(5)''' | + | '''(5)''' A comparison with the solution to the last subtask shows that $(0, 1, 0, 0, 0, 0, 0) \cdot \boldsymbol{\rm H}^{\rm T}$ as a syndrome gives the second row of the transposed matrix: |

:$$\begin{pmatrix} 0 &1 &0 &0 &0 \hspace{0.2cm}\text{... }\end{pmatrix} \cdot { \boldsymbol{\rm H}}^{\rm T} = \begin{pmatrix} 1 &1 &0 \end{pmatrix} = \underline{s}_6$$ | :$$\begin{pmatrix} 0 &1 &0 &0 &0 \hspace{0.2cm}\text{... }\end{pmatrix} \cdot { \boldsymbol{\rm H}}^{\rm T} = \begin{pmatrix} 1 &1 &0 \end{pmatrix} = \underline{s}_6$$ | ||

| − | :$$\Rightarrow\hspace{0.45cm} \underline{ \mu= 6} \hspace{0.05cm}, \hspace{0. | + | :$$\Rightarrow\hspace{0.45cm} \underline{ \mu= 6} \hspace{0.05cm}, \hspace{0.5cm} \underline{e}_6 = \begin{pmatrix} 0 &1 &0 &0 &0 &0 &0 \end{pmatrix}\hspace{0.05cm}.$$ |

:$$\begin{pmatrix} 0 &0 &1 &0 &0 \hspace{0.2cm}\text{... }\end{pmatrix} \cdot { \boldsymbol{\rm H}}^{\rm T} = \begin{pmatrix} 0 &1 &1 \end{pmatrix} = \underline{s}_3$$ | :$$\begin{pmatrix} 0 &0 &1 &0 &0 \hspace{0.2cm}\text{... }\end{pmatrix} \cdot { \boldsymbol{\rm H}}^{\rm T} = \begin{pmatrix} 0 &1 &1 \end{pmatrix} = \underline{s}_3$$ | ||

| − | :$$\Rightarrow\hspace{0.45cm} \underline{ \mu= 3} \hspace{0.05cm}, \hspace{0. | + | :$$\Rightarrow\hspace{0.45cm} \underline{ \mu= 3} \hspace{0.05cm}, \hspace{0.5cm} \underline{e}_3 = \begin{pmatrix} 0 &0 &1 &0 &0 &0 &0 \end{pmatrix}\hspace{0.05cm}.$$ |

:$$\begin{pmatrix} 0 &0 &0 &1 &0 \hspace{0.2cm}\text{... } \end{pmatrix} \cdot { \boldsymbol{\rm H}}^{\rm T} = \begin{pmatrix} 1 &1 &1 \end{pmatrix} = \underline{s}_7$$ | :$$\begin{pmatrix} 0 &0 &0 &1 &0 \hspace{0.2cm}\text{... } \end{pmatrix} \cdot { \boldsymbol{\rm H}}^{\rm T} = \begin{pmatrix} 1 &1 &1 \end{pmatrix} = \underline{s}_7$$ | ||

| − | :$$\Rightarrow\hspace{0.45cm} \underline{ \mu= 7} \hspace{0.05cm}, \hspace{0. | + | :$$\Rightarrow\hspace{0.45cm} \underline{ \mu= 7} \hspace{0.05cm}, \hspace{0.5cm} \underline{e}_7 = \begin{pmatrix} 0 &0 &0 &1 &0 &0 &0 \end{pmatrix}\hspace{0.05cm}.$$ |

| − | + | The adjacent graph summarizes again the results of the subtasks '''(4)''' and '''(5)'''. | |

| − | '''(6)''' | + | '''(6)''' For the $(7, \, 4, \, 3)$ Hamming code under consideration, the correct information word is decided if at most one bit within the code word is falsified during transmission. It follows: |

| − | :$${ \rm Pr( | + | :$${ \rm Pr(block\:errors)} \hspace{-0.15cm}\ = \ \hspace{-0.15cm} { \rm Pr(\ge 2\hspace{0.15cm}bit\hspace{0.15cm}errors\hspace{0.15cm})} =1 - { \rm Pr(0\hspace{0.15cm}bit\hspace{0.15cm}errors)} - { \rm Pr(1\hspace{0.15cm}bit\hspace{0.15cm}errors)} =1 - 0.9^7 - 7 \cdot 0.1 \cdot 0.9^7 \hspace{0.15cm} \underline{ = 0.15} \hspace{0.05cm}.$$ |

| − | * | + | *Uncoded transmission of a block with $n = k = 4$ bits would result in the same BSC channel: |

| − | :$${ \rm Pr( | + | :$${ \rm Pr(block\:errors)}= 1 - 0.9^4 \approx 0.344 \hspace{0.05cm}.$$ |

| − | * | + | *The comparison is not entirely fair, however, since with $n = 4$ a smaller falsification probability $\varepsilon$ would have to be applied than with $n = 7$ $($smaller symbol rate ⇒ smaller bandwidth ⇒ smaller noise power$)$. |

{{ML-Fuß}} | {{ML-Fuß}} | ||

| − | [[Category:Channel Coding: Exercises|^1.5 | + | [[Category:Channel Coding: Exercises|^1.5 Linear Block Code Decoding^]] |

Latest revision as of 13:03, 21 April 2023

To decode a $(7, \, 4, \, 3)$ Hamming code, which is defined by its parity-check matrix

- $${ \boldsymbol{\rm H}}_{\rm } = \begin{pmatrix} 1 &1 &0 &1 &1 &0 &0\\ 0 &1 &1 &1 &0 &1 &0\\ 1 &0 &1 &1 &0 &0 &1 \end{pmatrix} \hspace{0.05cm},$$

"syndrome decoding" is also suitable. Since all Hamming codes are perfect, the result is as good as with the $($in the general case$)$ more complicated maximum likelihood decoding.

For "syndrome decoding" one proceeds as follows:

- From the received vector $\underline{y}$ one forms the syndrome $($it holds $m = n - k)$:

- $$\underline{s} = \underline{y} \cdot { \boldsymbol{\rm H}}^{\rm T} \in {\rm GF}(2^m) \hspace{0.05cm}.$$

- In "BSC channel" the received word $\underline{y} = \underline{x} \, ({\rm code\:word}) + \underline{e} \, ({\rm error\:vector})$ is also an element of ${\rm GF}(2^n)$, and it holds because of $ \underline{x} · \boldsymbol {{\rm H} }^{\rm T} = \underline{0}$:

- $$\underline{s} = \underline{e} \cdot { \boldsymbol{\rm H}}^{\rm T} \hspace{0.05cm}.$$

- Many error patterns $\underline{e}$ lead to the same syndrome $\underline{s}$. One now groups those error patterns with the same syndrome $\underline{s}_{\mu}$ to the coset ${\it \Psi}_{\mu}$.

- The coset leader $\underline{e}_{\mu}$ is the fault vector that has the lowest Hamming weight within the class ${\it \Psi}_{\mu}$ and is accordingly the most probable. If there are several of these, one chooses one of them arbitrarily.

The above graph shows the incomplete list of coset leaders $\underline{e}_{\mu}$ for each $\underline{s}_{\mu}$.

The most likely error vectors

- $\underline{e}_{3}$ with syndrome $\underline{s}_{3} = (0, 1, 1)$,

- $\underline{e}_{5}$ with syndrome $\underline{s}_{5} = (1, 0, 1)$,

- $\underline{e}_{6}$ with syndrome $\underline{s}_{6} = (1, 1, 0)$,

- $\underline{e}_{7}$ with syndrome $\underline{s}_{7} = (1, 1, 1)$

are to be determined in the subtasks (4) and (5).

Hints:

- This exercise belongs to the chapter "Decoding of Linear Block Codes".

- Underlying is a Hamming code with parameters $n = 7$, $k = 4$ ⇒ $m = 3$. All code words have the following format:

- $$\underline{x} = ( x_1, x_2, x_3, x_4, x_5, x_6, x_7) = ( u_1, u_2, u_3, u_4, p_1, p_2, p_{3}) \hspace{0.05cm}.$$

- The parity-check equations are illustrated on the information sheet for "Exercise 1.11Z" which considers the same constellation as in the present exercise.

- In the last subtask (6), use the BSC parameter $ \varepsilon = 0.1$.

Questions

Solution

- With $m = 3$ parity bits, there are $2^3 = 8$ distinct values for the syndrome,

- $$\underline{s} \hspace{0.05cm} \in \hspace{0.05cm} \{ \underline{s}_0, \underline{s}_1,\hspace{0.05cm} \text{...} \hspace{0.05cm} , \underline{s}_7\} = \{ \underline{s}_{\mu} \}\hspace{0.05cm}, \hspace{0.2cm} \mu = 0, \hspace{0.05cm} \text{...} \hspace{0.05cm} , 7 \hspace{0.05cm},$$

- and just as many "cosets".

- Since in the Hamming code, which is perfect, all error vectors belong to one of the eight cosets ${\it \Psi}_{\mu}$ and, moreover, the number of all vectors in all cosets is the same ("Why should it be different?" Is that enough proof for you?), we get:

- $$ N_0 = \frac{2^n}{2^m} = 2^k \hspace{0.15cm} \underline{= 16} \hspace{0.05cm}.$$

- The cosets ${\it \Psi}_{0}$ include for example - see sample solution to "Exercise 1.11Z" - the vectors

- $$\underline{e} = (1, 1, 0, 0, 0, 0, 1),$$

- $$\underline{e} = (1, 1, 1, 1, 1, 1, 1).$$

(2) According to the comments of the last partial result, the following applies equally: $N_{7} \ \underline{= 16}$.

(3) Correct is answer 3:

- The coset leader $\underline{e}_{\mu}$ is the error vector $\underline{e}$ with the lowest "Hamming weight" $w_{\rm H}(\underline{e})$ that leads to the syndrome $\underline{s}_{\mu}$.

- The (7, 4, 3) Hamming code considered here is perfect. That is, all eight coset leaders therefore contain.

- either no "one" $(\underline{e}_{0}$ ⇒ no correction is required$)$, or

- exactly a single "one" $(\underline{e}_{1}, \hspace{0.05cm} \text{...} \hspace{0.05cm} , \underline{e}_{7}$ ⇒ an information or parity bit must be corrected$)$.

(4) It holds $\underline{s} = \underline{e} · \boldsymbol{\rm H}^{\rm T}$:

- $$\underline{s} = \begin{pmatrix} 1 &0 &0 &0 &0 &0 &0 \end{pmatrix} \cdot \begin{pmatrix} 1 &0 &1\\ 1 &1 &0\\ 0 &1 &1\\ 1 &1 &1\\ 1 &0 &0\\ 0 &1 &0\\ 0 &0 &1 \end{pmatrix} = \begin{pmatrix} 1 &0 &1 \end{pmatrix} = \underline{s}_5 \hspace{0.3cm} \Rightarrow\hspace{0.3cm} \hspace{0.15cm} \underline{ \mu= 5} \hspace{0.05cm}.$$

(5) A comparison with the solution to the last subtask shows that $(0, 1, 0, 0, 0, 0, 0) \cdot \boldsymbol{\rm H}^{\rm T}$ as a syndrome gives the second row of the transposed matrix:

- $$\begin{pmatrix} 0 &1 &0 &0 &0 \hspace{0.2cm}\text{... }\end{pmatrix} \cdot { \boldsymbol{\rm H}}^{\rm T} = \begin{pmatrix} 1 &1 &0 \end{pmatrix} = \underline{s}_6$$

- $$\Rightarrow\hspace{0.45cm} \underline{ \mu= 6} \hspace{0.05cm}, \hspace{0.5cm} \underline{e}_6 = \begin{pmatrix} 0 &1 &0 &0 &0 &0 &0 \end{pmatrix}\hspace{0.05cm}.$$

- $$\begin{pmatrix} 0 &0 &1 &0 &0 \hspace{0.2cm}\text{... }\end{pmatrix} \cdot { \boldsymbol{\rm H}}^{\rm T} = \begin{pmatrix} 0 &1 &1 \end{pmatrix} = \underline{s}_3$$

- $$\Rightarrow\hspace{0.45cm} \underline{ \mu= 3} \hspace{0.05cm}, \hspace{0.5cm} \underline{e}_3 = \begin{pmatrix} 0 &0 &1 &0 &0 &0 &0 \end{pmatrix}\hspace{0.05cm}.$$

- $$\begin{pmatrix} 0 &0 &0 &1 &0 \hspace{0.2cm}\text{... } \end{pmatrix} \cdot { \boldsymbol{\rm H}}^{\rm T} = \begin{pmatrix} 1 &1 &1 \end{pmatrix} = \underline{s}_7$$

- $$\Rightarrow\hspace{0.45cm} \underline{ \mu= 7} \hspace{0.05cm}, \hspace{0.5cm} \underline{e}_7 = \begin{pmatrix} 0 &0 &0 &1 &0 &0 &0 \end{pmatrix}\hspace{0.05cm}.$$

The adjacent graph summarizes again the results of the subtasks (4) and (5).

(6) For the $(7, \, 4, \, 3)$ Hamming code under consideration, the correct information word is decided if at most one bit within the code word is falsified during transmission. It follows:

- $${ \rm Pr(block\:errors)} \hspace{-0.15cm}\ = \ \hspace{-0.15cm} { \rm Pr(\ge 2\hspace{0.15cm}bit\hspace{0.15cm}errors\hspace{0.15cm})} =1 - { \rm Pr(0\hspace{0.15cm}bit\hspace{0.15cm}errors)} - { \rm Pr(1\hspace{0.15cm}bit\hspace{0.15cm}errors)} =1 - 0.9^7 - 7 \cdot 0.1 \cdot 0.9^7 \hspace{0.15cm} \underline{ = 0.15} \hspace{0.05cm}.$$

- Uncoded transmission of a block with $n = k = 4$ bits would result in the same BSC channel:

- $${ \rm Pr(block\:errors)}= 1 - 0.9^4 \approx 0.344 \hspace{0.05cm}.$$

- The comparison is not entirely fair, however, since with $n = 4$ a smaller falsification probability $\varepsilon$ would have to be applied than with $n = 7$ $($smaller symbol rate ⇒ smaller bandwidth ⇒ smaller noise power$)$.