Contents

Calculation as ensemble average or time average

The probabilities and the relative frequencies provide extensive information about a discrete random variable. Reduced information is obtained by the so-called moments $m_k$, where $k$ represents a natural number.

$\text{Two alternative ways of calculation:}$

Under the Ergodicity implied here, there are two different calculation possibilities for the moment $k$-th order:

- the ensemble averaging' or expected value formation ⇒ averaging over all possible values $\{ z_\mu\}$ with the index $\mu = 1 , \hspace{0.1cm}\text{ ...} \hspace{0.1cm} , M$:

- $$m_k = {\rm E} \big[z^k \big] = \sum_{\mu = 1}^{M}p_\mu \cdot z_\mu^k \hspace{2cm} \rm with \hspace{0.1cm} {\rm E\big[\text{ ...} \big]\hspace{-0.1cm}:} \hspace{0.3cm} \rm expected\hspace{0.1cm}value ;$$

- the time averaging over the random sequence $\langle z_ν\rangle$ with the index $ν = 1 , \hspace{0.1cm}\text{ ...} \hspace{0.1cm} , N$:

- $$m_k=\overline{z_\nu^k}=\hspace{0.01cm}\lim_{N\to\infty}\frac{1}{N}\sum_{\nu=\rm 1}^{\it N}z_\nu^k\hspace{1.7cm}\rm with\hspace{0.1cm}horizontal\hspace{0.1cm}line\hspace{-0.1cm}:\hspace{0.1cm}time\hspace{0.1cm}average.$$

Note:

- Both types of calculations lead to the same asymptotic result for sufficiently large values of $N$ .

- For finite $N$ , a comparable error results as when the probability is approximated by the relative frequency.

Linear mean - DC component

$\text{Definition:}$ With $k = 1$ we obtain from the general equation for moments the linear mean:

- $$m_1 =\sum_{\mu=1}^{M}p_\mu\cdot z_\mu =\lim_{N\to\infty}\frac{1}{N}\sum_{\nu=1}^{N}z_\nu.$$

- The left part of this equation describes the ensemble averaging (over all possible values),

- while the right equation gives the determination as time average.

- In the context of signals, this quantity is also referred to as the DC component

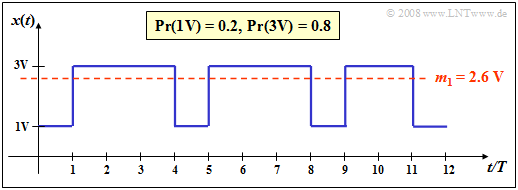

$\text{Example 1:}$ A binary signal $x(t)$ with the two possible amplitude values.

- $1\hspace{0.03cm}\rm V$ $($for the symbol $\rm L)$,

- $3\hspace{0.03cm}\rm V$ $($for the symbol $\rm H)$

as well as the occurrence probabilities $p_{\rm L} = 0.2$ respectively $p_{\rm H} = 0.8$ has the linear mean (DC)

- $$m_1 = 0.2 \cdot 1\,{\rm V}+ 0.8 \cdot 3\,{\rm V}= 2.6 \,{\rm V}. $$

This is drawn as a red line in the graph.

If we determine this parameter by time averaging over the displayed $N = 12$ signal values, we obtain a slightly smaller value:

- $$m_1\hspace{0.01cm}' = 4/12 \cdot 1\,{\rm V}+ 8/12 \cdot 3\,{\rm V}= 2.33 \,{\rm V}. $$

Here, the probabilities of occurrence $p_{\rm L} = 0.2$ and $p_{\rm H} = 0.8$ were replaced by the corresponding frequencies $h_{\rm L} = 4/12$ and $h_{\rm H} = 8/12$ respectively. The relative error due to insufficient sequence length $N$ is greater than $10\%$ in the example.

Note about our (admittedly somewhat unusual) nomenclature:.

We denote binary symbols here as in circuit theory with $\rm L$ (Low) and $\rm H$ (High) to avoid confusion.

- In coding theory, it is useful to map $\{ \text{L, H}\}$ to $\{0, 1\}$ to take advantage of the possibilities of modulo algebra.

- In contrast, to describe modulation with bipolar (antipodal) signals, one better chooses the mapping $\{ \text{L, H}\}$ ⇔ $ \{-1, +1\}$.

Quadratic Mean – Variance – Dispersion

$\text{Definitions:}$

- Analogous to the linear mean, $k = 2$ is obtained for the root mean square:

- $$m_2 =\sum_{\mu=\rm 1}^{\it M}p_\mu\cdot z_\mu^2 =\lim_{N\to\infty}\frac{\rm 1}{\it N}\sum_{\nu=\rm 1}^{\it N}z_\nu^2.$$

- Together with the DC component $m_1$ , the variance $σ^2$ can be determined from this as a further parameter (Steiner's Theorem):

- $$\sigma^2=m_2-m_1^2.$$

- In statistics, the dispersion $σ$ is the square root of the variance; sometimes this quantity is also called standard deviation :

- $$\sigma=\sqrt{m_2-m_1^2}.$$

Notes on units:

- For message signals, $m_2$ indicates the (average) power power of a random signal, referenced to $1 \hspace{0.03cm} Ω$ resistance.

- If $z$ describes a voltage, $m_2$ accordingly has the unit${\rm V}^2$.

- The variance $σ^2$ of a random signal corresponds physically to the alternating power and the dispersion $σ$ to therms (root mean square) value.

- These definitions are based on the reference resistance $1 \hspace{0.03cm} Ω$ zugrunde.

The (German) learning video Momentenberechnung bei diskreten Zufallsgrößen $\Rightarrow$ Moment Calculation for Discrete Random Variables, illustrates the defined quantities using the example of a digital signal.

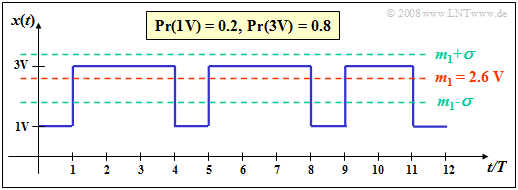

$\text{Example 2:}$ A binary signal $x(t)$ mit den Amplitudenwerten

- $1\hspace{0.03cm}\rm V$ $($für das Symbol $\rm L)$,

- $3\hspace{0.03cm}\rm V$ $($für das Symbol $\rm H)$

sowie den Auftrittswahrscheinlichkeiten $p_{\rm L} = 0.2$ bzw. $p_{\rm H} = 0.8$ besitzt die gesamte Signalleistung

- $$P_{\rm Gesamt} = 0.2 \cdot (1\,{\rm V})^2+ 0.8 \cdot (3\,{\rm V})^2 = 7.4 \hspace{0.05cm}{\rm V}^2,$$

wenn man vom Bezugswiderstand $R = 1 \hspace{0.05cm} Ω$ ausgeht.

Mit dem Gleichanteil $m_1 = 2.6 \hspace{0.05cm}\rm V$ $($siehe $\text{Beispiel 1})$ folgt daraus für

- die Wechselleistung (Varianz) $P_{\rm W} = σ^2 = 7.4 \hspace{0.05cm}{\rm V}^2 - \big [2.6 \hspace{0.05cm}\rm V\big ]^2 = 0.64\hspace{0.05cm} {\rm V}^2$,

- den Effektivwert $s_{\rm eff} = σ = 0.8 \hspace{0.05cm} \rm V$.

- Einschub: Bei anderem Bezugswiderstand ⇒ $R \ne 1 \hspace{0.1cm} Ω$ gelten nicht alle diese Berechnungen. Beispielsweise haben mit $R = 50 \hspace{0.1cm} Ω$ die Leistung $P_{\rm Gesamt} $, die Wechselleistung $P_{\rm W}$ und der Effektivwert $s_{\rm eff}$ folgende Werte:

- $$P_{\rm Gesamt} \hspace{-0.05cm}= \hspace{-0.05cm} \frac{m_2}{R} \hspace{-0.05cm}= \hspace{-0.05cm} \frac{7.4\,{\rm V}^2}{50\,{\rm \Omega} } \hspace{-0.05cm}= \hspace{-0.05cm}0.148\,{\rm W},\hspace{0.5cm} P_{\rm W} \hspace{-0.05cm} = \hspace{-0.05cm} \frac{\sigma^2}{R} \hspace{-0.05cm}= \hspace{-0.05cm}12.8\,{\rm mW} \hspace{0.05cm},\hspace{0.5cm} s_{\rm eff} \hspace{-0.05cm} = \hspace{-0.05cm}\sqrt{R \cdot P_{\rm W} } \hspace{-0.05cm}= \hspace{-0.05cm} \sigma \hspace{-0.05cm}= \hspace{-0.05cm} 0.8\,{\rm V}.$$

- Einschub: Bei anderem Bezugswiderstand ⇒ $R \ne 1 \hspace{0.1cm} Ω$ gelten nicht alle diese Berechnungen. Beispielsweise haben mit $R = 50 \hspace{0.1cm} Ω$ die Leistung $P_{\rm Gesamt} $, die Wechselleistung $P_{\rm W}$ und der Effektivwert $s_{\rm eff}$ folgende Werte:

Die gleiche Varianz und der gleiche Effektivwert $s_{\rm eff}$ ergeben sich für die Amplituden $0\hspace{0.05cm}\rm V$ $($für das Symbol $\rm L)$ und $2\hspace{0.05cm}\rm V$ $($für das Symbol $\rm H)$ , vorausgesetzt, die Auftrittswahrscheinlichkeiten $p_{\rm L} = 0.2$ und $p_{\rm H} = 0.8$ bleiben gleich. Nur der Gleichanteil und die Gesamtleistung ändern sich:

- $$m_1 = 1.6 \hspace{0.05cm}{\rm V}, \hspace{0.5cm}P_{\rm Gesamt} = P_{\rm W} + {m_1}^2 = 3.2 \hspace{0.05cm}{\rm V}^2.$$

Aufgaben zum Kapitel

Aufgabe 2.2: Mehrstufensignale

Aufgabe 2.2Z: Diskrete Zufallsgrößen