Difference between revisions of "Aufgaben:Exercise 1.15: Distance Spectra of HC (7, 4, 3) and HC (8, 4, 4)"

| Line 1: | Line 1: | ||

{{quiz-Header|Buchseite=Channel_Coding/Limits_for_Block_Error_Probability}} | {{quiz-Header|Buchseite=Channel_Coding/Limits_for_Block_Error_Probability}} | ||

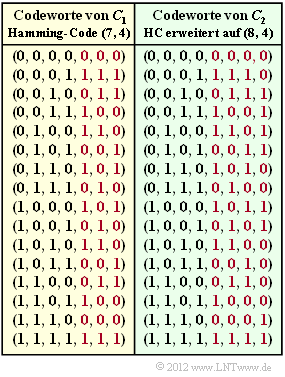

| − | [[File:P_ID2407__KC_A_1_9.png|right|frame|Code tables of the $(7, 4, 3)$ Hamming code and the $(8, 4, 4)$ extension | + | [[File:P_ID2407__KC_A_1_9.png|right|frame|Code tables of the $(7, 4, 3)$ Hamming code and the $(8, 4, 4)$ extension '''Korrektur''']] |

We consider as in the [[Aufgaben:Exercise_1.09:_Extended_Hamming_Code|"Exercise 1.9"]] | We consider as in the [[Aufgaben:Exercise_1.09:_Extended_Hamming_Code|"Exercise 1.9"]] | ||

| − | *the $(7, 4, 3)$ | + | *the $(7, 4, 3)$ Hamming code and |

| − | |||

| + | *the extended $(8, 4, 4)$ Hamming code. | ||

| − | |||

| − | *The integer $W_{i}$ specifies the number of | + | The graphic shows the corresponding code tables. In [[Aufgaben:Exercise_1.12:_Hard_Decision_vs._Soft_Decision|"Exercise 1.12"]], the syndrome decoding of these two codes has already been covered. In this exercise, the differences regarding the distance spectrum $\{W_{i}\}$ shall now be worked out. For the indexing variable $i = 0, \ \text{...} \ , n$: |

| − | *For the linear code considered here, $W_{i}$ simultaneously describes the number of | + | |

| + | *The integer $W_{i}$ specifies the number of code words $\underline{x}$ with the [[Channel_Coding/Objective_of_Channel_Coding#Important_definitions_for_block_coding|"Hamming weight"]] $\underline{w}_{\rm H}( \underline{x} ) = i$. | ||

| + | |||

| + | *For the linear code considered here, $W_{i}$ simultaneously describes the number of code words with the [[Channel_Coding/Limits_for_Block_Error_Probability#Distance_spectrum_of_a_linear_code|"Hamming distance"]] $i$ from the all-zero word. | ||

| + | |||

*Often one assigns to the number set $\{W_i\}$ a pseudo-function called [[Channel_Coding/Limits_for_Block_Error_Probability#Distance_spectrum_of_a_linear_code|"weight enumerator function"]] : | *Often one assigns to the number set $\{W_i\}$ a pseudo-function called [[Channel_Coding/Limits_for_Block_Error_Probability#Distance_spectrum_of_a_linear_code|"weight enumerator function"]] : | ||

:$$\left \{ \hspace{0.05cm} W_i \hspace{0.05cm} \right \} \hspace{0.3cm} \Leftrightarrow \hspace{0.3cm} W(X) = \sum_{i=0 }^{n} W_i \cdot X^{i} = W_0 + W_1 \cdot X + W_2 \cdot X^{2} + ... \hspace{0.05cm} + W_n \cdot X^{n}\hspace{0.05cm}.$$ | :$$\left \{ \hspace{0.05cm} W_i \hspace{0.05cm} \right \} \hspace{0.3cm} \Leftrightarrow \hspace{0.3cm} W(X) = \sum_{i=0 }^{n} W_i \cdot X^{i} = W_0 + W_1 \cdot X + W_2 \cdot X^{2} + ... \hspace{0.05cm} + W_n \cdot X^{n}\hspace{0.05cm}.$$ | ||

| − | Bhattacharyya has used the pseudo-function $W(X)$ to specify a channel-independent (upper) bound on the block error probability: | + | Bhattacharyya has used the pseudo-function $W(X)$ to specify a channel-independent (upper) bound on the block error probability: |

:$${\rm Pr(block\:error)} \le{\rm Pr(Bhattacharyya)} = W(\beta) -1 \hspace{0.05cm}.$$ | :$${\rm Pr(block\:error)} \le{\rm Pr(Bhattacharyya)} = W(\beta) -1 \hspace{0.05cm}.$$ | ||

| − | The so-called | + | The so-called "Bhattacharyya parameter" is given as follows: |

:$$\beta = \left\{ \begin{array}{c} \lambda \\ \\ 2 \cdot \sqrt{\varepsilon \cdot (1- \varepsilon)}\\ \\ {\rm e}^{- R \hspace{0.05cm}\cdot \hspace{0.05cm}E_{\rm B}/N_0} \end{array} \right.\quad \begin{array}{*{1}c} {\rm for\hspace{0.15cm} the \hspace{0.15cm}BEC\:model},\\ \\ {\rm for\hspace{0.15cm} the \hspace{0.15cm}BSC\:model}, \\ \\{\rm for\hspace{0.15cm} the \hspace{0.15cm}AWGN\:model}. \end{array}$$ | :$$\beta = \left\{ \begin{array}{c} \lambda \\ \\ 2 \cdot \sqrt{\varepsilon \cdot (1- \varepsilon)}\\ \\ {\rm e}^{- R \hspace{0.05cm}\cdot \hspace{0.05cm}E_{\rm B}/N_0} \end{array} \right.\quad \begin{array}{*{1}c} {\rm for\hspace{0.15cm} the \hspace{0.15cm}BEC\:model},\\ \\ {\rm for\hspace{0.15cm} the \hspace{0.15cm}BSC\:model}, \\ \\{\rm for\hspace{0.15cm} the \hspace{0.15cm}AWGN\:model}. \end{array}$$ | ||

| − | It should be noted that the Bhattacharyya | + | It should be noted that the "Bhattacharyya Bound" is generally very pessimistic. The actual block error probability is often significantly lower. |

| Line 33: | Line 36: | ||

Hints: | Hints: | ||

*This exercise refers to the chapter [[Channel_Coding/Limits_for_Block_Error_Probability|"Bounds for Block Error Probability"]]. | *This exercise refers to the chapter [[Channel_Coding/Limits_for_Block_Error_Probability|"Bounds for Block Error Probability"]]. | ||

| − | *A similar topic is covered in [[Aufgaben:Exercise_1.14:_Bhattacharyya_Bound_for_BEC|"Exercise 1.14"]] and in [[Aufgaben:Exercise_1.16:_Block_Error_Probability_Bounds_for_AWGN| | + | |

| + | *A similar topic is covered in [[Aufgaben:Exercise_1.14:_Bhattacharyya_Bound_for_BEC|"Exercise 1.14"]] and in [[Aufgaben:Exercise_1.16:_Block_Error_Probability_Bounds_for_AWGN|"Exercise 1.16"]]. | ||

| + | |||

* The channels to be considered are: | * The channels to be considered are: | ||

| − | ** the [[Channel_Coding/Channel_Models_and_Decision_Structures#Binary_Symmetric_Channel_.E2.80.93_BSC|"BSC model"]] ( | + | ** the [[Channel_Coding/Channel_Models_and_Decision_Structures#Binary_Symmetric_Channel_.E2.80.93_BSC|"BSC model"]] ("Binary Symmetric Channel"), |

| − | ** the [[Channel_Coding/Channel_Models_and_Decision_Structures#Binary_Erasure_Channel_.E2.80.93_BEC|"BEC model"]] ( | + | ** the [[Channel_Coding/Channel_Models_and_Decision_Structures#Binary_Erasure_Channel_.E2.80.93_BEC|"BEC model"]] ("Binary Erasure Channel"), |

** the [[Channel_Coding/Channel_Models_and_Decision_Structures#AWGN_channel_at_Binary_Input|"AWGN channel model"]]. | ** the [[Channel_Coding/Channel_Models_and_Decision_Structures#AWGN_channel_at_Binary_Input|"AWGN channel model"]]. | ||

| Line 53: | Line 58: | ||

$W_{7} \ = \ ${ 1 } | $W_{7} \ = \ ${ 1 } | ||

| − | {What is the Bhattacharyya | + | {What is the Bhattacharyya Bound for the $(7, 4, 3)$ Hamming code and the BSC model with $\varepsilon = 0.01$? |

|type="{}"} | |type="{}"} | ||

${\rm Pr(Bhattacharyya)} \ = \ $ { 6.6 3% } $\ \%$ | ${\rm Pr(Bhattacharyya)} \ = \ $ { 6.6 3% } $\ \%$ | ||

| − | {Given the same channel, what is the bound of the extended $(8, 4, 4)$ Hamming code? | + | {Given the same channel, what is the bound of the extended $(8, 4, 4)$ Hamming code? |

|type="{}"} | |type="{}"} | ||

${\rm Pr(Bhattacharyya)} \ = \ ${ 2.2 3% } $\ \%$ | ${\rm Pr(Bhattacharyya)} \ = \ ${ 2.2 3% } $\ \%$ | ||

| − | {With which BEC parameter $\lambda$ do you get the exact same barriers? | + | {With which BEC parameter $\lambda$ do you get the exact same barriers? |

|type="{}"} | |type="{}"} | ||

$\lambda \ = \ $ { 0.199 3% } | $\lambda \ = \ $ { 0.199 3% } | ||

| − | {We continue to consider the extended $(8, 4, 4)$ Hamming code, but now the AWGN model. | + | {We continue to consider the extended $(8, 4, 4)$ Hamming code, but now the AWGN model. |

| − | <br>Determine $E_{\rm B} / N_{0}$ (in dB) such that the same Bhattacharyya | + | <br>Determine $E_{\rm B} / N_{0}$ (in dB) such that the same Bhattacharyya Bound results. |

|type="{}"} | |type="{}"} | ||

$10 · \lg {E_{\rm B}/N_0} \ = \ $ { 5 3% }$ \ \rm dB$ | $10 · \lg {E_{\rm B}/N_0} \ = \ $ { 5 3% }$ \ \rm dB$ | ||

| − | {Now determine the AWGN parameter $(10 · \lg {E_{\rm B}/N_0})$ for the $(7, 4, 3)$ Hamming code. | + | {Now determine the AWGN parameter $(10 · \lg {E_{\rm B}/N_0})$ for the $(7, 4, 3)$ Hamming code. |

|type="{}"} | |type="{}"} | ||

$10 · \lg {E_{\rm B}/N_0} \ = \ $ { 4.417 3% }$ \ \rm dB$ | $10 · \lg {E_{\rm B}/N_0} \ = \ $ { 4.417 3% }$ \ \rm dB$ | ||

Revision as of 17:45, 4 August 2022

We consider as in the "Exercise 1.9"

- the $(7, 4, 3)$ Hamming code and

- the extended $(8, 4, 4)$ Hamming code.

The graphic shows the corresponding code tables. In "Exercise 1.12", the syndrome decoding of these two codes has already been covered. In this exercise, the differences regarding the distance spectrum $\{W_{i}\}$ shall now be worked out. For the indexing variable $i = 0, \ \text{...} \ , n$:

- The integer $W_{i}$ specifies the number of code words $\underline{x}$ with the "Hamming weight" $\underline{w}_{\rm H}( \underline{x} ) = i$.

- For the linear code considered here, $W_{i}$ simultaneously describes the number of code words with the "Hamming distance" $i$ from the all-zero word.

- Often one assigns to the number set $\{W_i\}$ a pseudo-function called "weight enumerator function" :

- $$\left \{ \hspace{0.05cm} W_i \hspace{0.05cm} \right \} \hspace{0.3cm} \Leftrightarrow \hspace{0.3cm} W(X) = \sum_{i=0 }^{n} W_i \cdot X^{i} = W_0 + W_1 \cdot X + W_2 \cdot X^{2} + ... \hspace{0.05cm} + W_n \cdot X^{n}\hspace{0.05cm}.$$

Bhattacharyya has used the pseudo-function $W(X)$ to specify a channel-independent (upper) bound on the block error probability:

- $${\rm Pr(block\:error)} \le{\rm Pr(Bhattacharyya)} = W(\beta) -1 \hspace{0.05cm}.$$

The so-called "Bhattacharyya parameter" is given as follows:

- $$\beta = \left\{ \begin{array}{c} \lambda \\ \\ 2 \cdot \sqrt{\varepsilon \cdot (1- \varepsilon)}\\ \\ {\rm e}^{- R \hspace{0.05cm}\cdot \hspace{0.05cm}E_{\rm B}/N_0} \end{array} \right.\quad \begin{array}{*{1}c} {\rm for\hspace{0.15cm} the \hspace{0.15cm}BEC\:model},\\ \\ {\rm for\hspace{0.15cm} the \hspace{0.15cm}BSC\:model}, \\ \\{\rm for\hspace{0.15cm} the \hspace{0.15cm}AWGN\:model}. \end{array}$$

It should be noted that the "Bhattacharyya Bound" is generally very pessimistic. The actual block error probability is often significantly lower.

Hints:

- This exercise refers to the chapter "Bounds for Block Error Probability".

- A similar topic is covered in "Exercise 1.14" and in "Exercise 1.16".

- The channels to be considered are:

- the "BSC model" ("Binary Symmetric Channel"),

- the "BEC model" ("Binary Erasure Channel"),

- the "AWGN channel model".

Questions

Solution

- $W_{0} \ \underline{ = \ 1}$ codeword does not contain a one (the zero word),

- $W_{3} \ \underline{ = \ 7}$ codewords contain three ones,

- $W_{4} \ \underline{ = \ 7}$ codewords contain four ones,

- $W_{7} \ \underline{ = \ 1}$ codeword consists of only ones.

$W_{i}$ simultaneously specifies the number of codewords that differ from the zero word in $i \ \rm bit$.

(2) The Bhattacharyya bound reads:

- $${\rm Pr(Blockfehler)} \le{\rm Pr(Bhattacharyya)} = W(\beta) -1 \hspace{0.05cm}.$$

- The weight function is defined by the subtask (1):

- $$W(X) = 1+ 7 \cdot X^{3} + 7 \cdot X^{4} + X^{7}\hspace{0.3cm} \Rightarrow \hspace{0.3cm} {\rm Pr(Bhattacharyya)} = 7 \cdot \beta^{3} + 7 \cdot \beta^{4} + \beta^{7} \hspace{0.05cm}.$$

- For the Bhattacharyya parameter of the BSC model:

- $$\beta = 2 \cdot \sqrt{\varepsilon \cdot (1- \varepsilon)} = 2 \cdot \sqrt{0.01 \cdot 0.99} = 0.199\hspace{0.3cm} \Rightarrow \hspace{0.3cm} {\rm Pr(Bhattacharyya)} = 7 \cdot 0.199^{3} + 7 \cdot 0.199^{4} + 0.199^{7} \hspace{0.15cm} \underline{ \approx 6.6\%} \hspace{0.05cm}.$$

- A comparison with the actual block error probability as calculated in "Exercise 1.12",

- $${\rm Pr(block\:error)} \approx 21 \cdot \varepsilon^2 = 2.1 \cdot 10^{-3} \hspace{0.05cm},$$

- shows that Bhattacharyya provides only a rough bound. In the present case, this bound is more than a factor of $30$ higher than the actual value.

(3) From the code table of the $(8, 4, 4)$ code, the following results are obtained:

- $$W(X) = 1+ 14 \cdot X^{4} + X^{8}\hspace{0.3cm} \Rightarrow \hspace{0.3cm} {\rm Pr(Bhattacharyya)} = 14 \cdot \beta^{4} + \beta^{8} = 14 \cdot 0.199^{4} + 0.199^{8} \hspace{0.15cm} \underline{ \approx 2.2\%} \hspace{0.05cm}.$$

(4) The equation for the Bhattacharyya parameter is:

- $$\beta = \left\{ \begin{array}{c} \lambda \\ \\ 2 \cdot \sqrt{ \varepsilon \cdot (1- \varepsilon)}\\ \\ {\rm e}^{- R \cdot E_{\rm B}/N_0} \end{array} \right.\quad \begin{array}{*{1}c} {\rm for\hspace{0.15cm} the \hspace{0.15cm}BEC model},\\ \\ {\rm for\hspace{0.15cm} the \hspace{0.15cm}BSC model}, \\ \\{\rm for\hspace{0.15cm} the \hspace{0.15cm}AWGN model}. \end{array}$$

With the BEC model, exactly the same bound is obtained when the erasure probability is $\lambda = \beta \ \underline{= 0.199}$.

(5) According to the above equation must apply:

- $$\beta = {\rm e}^{- R \hspace{0.05cm}\cdot \hspace{0.05cm} E_{\rm B}/N_0} = 0.199 \hspace{0.3cm} \Rightarrow \hspace{0.3cm} R \cdot E_{\rm B}/N_0 = 10^{0.199} = 1.58 \hspace{0.05cm}.$$

- The code rate of the extended $(8, 4, 4)$ Hamming code is $R = 0.5$:

- $$E_{\rm B}/N_0 = 3.16 \hspace{0.3cm} \Rightarrow \hspace{0.3cm} 10 \cdot {\rm lg} \hspace{0.1cm} E_{\rm B}/N_0 \hspace{0.15cm} \underline{\approx 5\,{\rm dB}} \hspace{0.05cm}.$$

(6) Using the code rate $R = 4/7$ of the $(7, 4, 3)$ Hamming code, we obtain:

- $$E_{\rm B}/N_0 = 7/4 \cdot 1.58 = 2.765 \hspace{0.3cm} \Rightarrow \hspace{0.3cm} 10 \cdot {\rm lg} \hspace{0.1cm} E_{\rm B}/N_0 \hspace{0.15cm} \underline{\approx 4.417\,{\rm dB}} \hspace{0.05cm}.$$