Difference between revisions of "Aufgaben:Exercise 1.5: SPC (5, 4) and BEC Model"

| (4 intermediate revisions by 2 users not shown) | |||

| Line 7: | Line 7: | ||

For this exercise it is required: | For this exercise it is required: | ||

| − | *The [[Channel_Coding/Examples_of_Binary_Block_Codes#Single_Parity_Check_Codes| | + | *The [[Channel_Coding/Examples_of_Binary_Block_Codes#Single_Parity_Check_Codes|single parity-check code]] with parameters $k = 4$ and $n = 5$ ⇒ $\rm SPC \ (5, 4)$ adds to the information bits $u_{1}$, ... , $u_{4}$ a check bit $p$ so that an even number of ones occurs in each code word $\underline{x}$ : |

:$$x_1 \oplus x_2 \oplus x_3 \oplus x_4 \oplus x_5 = 0 \hspace{0.05cm},$$ | :$$x_1 \oplus x_2 \oplus x_3 \oplus x_4 \oplus x_5 = 0 \hspace{0.05cm},$$ | ||

:$$ u_1 \oplus u_2 \oplus u_3 \oplus u_4 \oplus p = 0 \hspace{0.05cm}.$$ | :$$ u_1 \oplus u_2 \oplus u_3 \oplus u_4 \oplus p = 0 \hspace{0.05cm}.$$ | ||

| − | *The [[Channel_Coding/Channel_Models_and_Decision_Structures#Binary_Erasure_Channel_.E2.80.93_BEC|Binary Erasure Channel]] (BEC) - with binary input values $x_{i} \in \{0, \ 1\}$ and ternary output $y_{i} \in \{0, 1, \rm E\}$ leads with probability $\lambda = 0.1$ to an | + | *The [[Channel_Coding/Channel_Models_and_Decision_Structures#Binary_Erasure_Channel_.E2.80.93_BEC|Binary Erasure Channel]] $\rm (BEC)$ - with binary input values $x_{i} \in \{0, \ 1\}$ and ternary output $y_{i} \in \{0,\ 1,\ \rm E\}$ leads with probability $\lambda = 0.1$ to an erasure $\rm E$. |

| − | *Furthermore, ${\rm Pr}(y_{i} = x_{i}) = 1 - \lambda = 0.9$ holds. A real transmission error is excluded: | + | |

| + | *Furthermore, ${\rm Pr}(y_{i} = x_{i}) = 1 - \lambda = 0.9$ holds. A real transmission error is excluded: | ||

:$$ {\rm Pr} \big[(x_i = 0)\cap (y_i = 1)\big] = {\rm Pr} \big[(x_i = 1)\cap (y_i = 0)\big] = 0\hspace{0.05cm}.$$ | :$$ {\rm Pr} \big[(x_i = 0)\cap (y_i = 1)\big] = {\rm Pr} \big[(x_i = 1)\cap (y_i = 0)\big] = 0\hspace{0.05cm}.$$ | ||

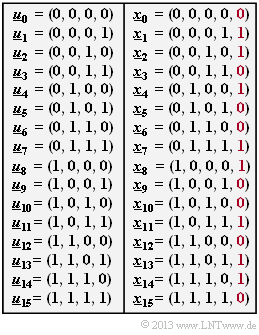

| − | The relationship between the information word $\underline{u}$ and the | + | The relationship between the information word $\underline{u}$ and the code word $\underline{x}$ is given by the table. From the receive word $\underline{y}$ the vector $\underline{v}$ of information bits at the sink is formed by maximum likelihood decision, which should match the information word $\underline{u}$ as much as possible. |

The following nomenclature applies: | The following nomenclature applies: | ||

| − | :$$\underline{u} \ \in \ \{\underline{u}_0, \underline{u}_1,\hspace{0.15cm} \text{...} \hspace{0.2cm}, \underline{u}_{15}\} \hspace{0.05cm},$$ | + | :$$\underline{u} \ \in \ \{\underline{u}_0,\ \underline{u}_1,\hspace{0.15cm} \text{...} \hspace{0.2cm},\ \underline{u}_{15}\} \hspace{0.05cm},$$ |

| − | :$$ \underline{v} \ \in \ \{\underline{v}_0, \underline{v}_1, \hspace{0.15cm}\text{...} \hspace{0.2cm}, \underline{v}_{15}, \underline{\rm E}\} \hspace{0.05cm}.$$ | + | :$$ \underline{v} \ \in \ \{\underline{v}_0,\ \underline{v}_1, \hspace{0.15cm}\text{...} \hspace{0.2cm},\ \underline{v}_{15},\ \underline{\rm E}\} \hspace{0.05cm}.$$ |

| − | The result $\underline{v} =\underline{\rm E} = {\rm (E, E, E, E)}$ indicates that decoding of the code word is not possible due to too many erasures. | + | The result $\underline{v} =\underline{\rm E} = {\rm (E,\ E,\ E,\ E)}$ indicates that decoding of the code word is not possible due to too many erasures. |

| Line 29: | Line 30: | ||

Hints: | Hints: | ||

| − | *This exercise belongs to the chapter [[Channel_Coding/Examples_of_Binary_Block_Codes|Examples of binary block codes]]. | + | *This exercise belongs to the chapter [[Channel_Coding/Examples_of_Binary_Block_Codes|"Examples of binary block codes"]]. |

| − | *Reference is also made to the chapter [[Channel_Coding/Channel_Models_and_Decision_Structures|Channel Models and Decision Structures]]. | + | |

| − | *The check bits of $u_{0}$, $u_{4}$ and $u_{13}$ are to be determined in the subtask '''(1)''''. | + | *Reference is also made to the chapter [[Channel_Coding/Channel_Models_and_Decision_Structures|"Channel Models and Decision Structures"]]. |

| + | |||

| + | *The check bits of $u_{0}$, $u_{4}$ and $u_{13}$ are to be determined in the subtask '''(1)''''. | ||

| Line 39: | Line 42: | ||

<quiz display=simple> | <quiz display=simple> | ||

| − | {What is the check bit $p$ for each of the following information words $\underline{u}$ ? | + | {What is the check bit $p$ for each of the following information words $\underline{u}$ ? |

|type="{}"} | |type="{}"} | ||

$\underline{u} = \underline{u_{0}}\text{:}\hspace{0.4cm}p \ = \ $ { 0. } | $\underline{u} = \underline{u_{0}}\text{:}\hspace{0.4cm}p \ = \ $ { 0. } | ||

| Line 45: | Line 48: | ||

$\underline{u} = \underline{u_{13}}\text{:}\hspace{0.25cm}p \ = \ $ { 1 } | $\underline{u} = \underline{u_{13}}\text{:}\hspace{0.25cm}p \ = \ $ { 1 } | ||

| − | { Let $ \underline{y} = (0, 0, 0, {\rm E})$. What information word was sent? | + | { Let $ \underline{y} = (0,\ 0,\ 0,\ 0,\ {\rm E})$. What information word was sent? |

|type="()"} | |type="()"} | ||

+ $ \underline{u}_{0}$, | + $ \underline{u}_{0}$, | ||

| Line 52: | Line 55: | ||

| − | { | + | {Let $ \underline{y} = (0,\ {\rm E},\ 0,\ 0,\ 1)$. What information word was sent? |

|type="()"} | |type="()"} | ||

- $\underline{u}_{0}$, | - $\underline{u}_{0}$, | ||

| Line 58: | Line 61: | ||

- $\underline{u}_{13}$. | - $\underline{u}_{13}$. | ||

| − | {With what probability does $\underline{y}$ match the | + | {With what probability does $\underline{y}$ match the code word $\underline{x}$ ? |

|type="{}"} | |type="{}"} | ||

$\ {\rm Pr} (\underline{y} = \underline{x}) \ = \ ${ 59.1 3% } $\ \%$ | $\ {\rm Pr} (\underline{y} = \underline{x}) \ = \ ${ 59.1 3% } $\ \%$ | ||

| Line 68: | Line 71: | ||

{What is the probability of a detected error? | {What is the probability of a detected error? | ||

|type="{}"} | |type="{}"} | ||

| − | $\ {\rm Pr} (\underline{\upsilon} = {\rm {\underline{ E}}) \ = \ $ { 8.1 3% } $\ \%$ | + | $\ {\rm Pr} (\underline{\upsilon} = {\rm {\underline{ E}}}) \ = \ $ { 8.1 3% } $\ \%$ |

| Line 76: | Line 79: | ||

{{ML-Kopf}} | {{ML-Kopf}} | ||

| − | '''(1)''' | + | '''(1)''' The check bit $p$ is determined in the "single parity-check code" in such a way, that the sum of all ones in the code word $\underline{x} = (u_{1},\ u_{2}, ... ,\ u_{4},\ p)$ is even. <br>For example, one gets: |

:$$\underline{u}_0 \hspace{-0.1cm}\ = \ \hspace{-0.1cm} (0, 0, 0, 0) \hspace{0.3cm} \Rightarrow \hspace{0.3cm} \underline{x}_0 = (0, 0, 0, 0, 0)\hspace{0.3cm} \Rightarrow \hspace{0.3cm} p \hspace{0.15cm} \underline{= 0} \hspace{0.05cm},$$ | :$$\underline{u}_0 \hspace{-0.1cm}\ = \ \hspace{-0.1cm} (0, 0, 0, 0) \hspace{0.3cm} \Rightarrow \hspace{0.3cm} \underline{x}_0 = (0, 0, 0, 0, 0)\hspace{0.3cm} \Rightarrow \hspace{0.3cm} p \hspace{0.15cm} \underline{= 0} \hspace{0.05cm},$$ | ||

:$$ \underline{u}_4 \hspace{-0.1cm}\ = \ \hspace{-0.1cm} (0, 1, 0, 0) \hspace{0.3cm} \Rightarrow \hspace{0.3cm} \underline{x}_4 = (0, 1, 0, 0, 1)\hspace{0.3cm} \Rightarrow \hspace{0.3cm} p \hspace{0.15cm} \underline{= 1} \hspace{0.05cm},$$ | :$$ \underline{u}_4 \hspace{-0.1cm}\ = \ \hspace{-0.1cm} (0, 1, 0, 0) \hspace{0.3cm} \Rightarrow \hspace{0.3cm} \underline{x}_4 = (0, 1, 0, 0, 1)\hspace{0.3cm} \Rightarrow \hspace{0.3cm} p \hspace{0.15cm} \underline{= 1} \hspace{0.05cm},$$ | ||

| Line 82: | Line 85: | ||

| − | '''(2)''' | + | '''(2)''' Correct is <u>answer 1</u>: |

| − | *Because of the fact that the number of ones must be even, the erased check bit $p = 0$. Thus, $\underline{u}_{0}$ was sent. | + | *Because of the fact that the number of ones must be even, the erased check bit $p = 0$. Thus, $\underline{u}_{0}$ was sent. |

| − | '''(3)''' Correct is <u>answer 2</u>: | + | '''(3)''' Correct is <u>answer 2</u>: |

| − | *According to the same considerations as in the last subtask, we arrive | + | *According to the same considerations as in the last subtask, we arrive with $\underline{y} = (0,\ |

| − | {\rm E}, 0, 0, 1)$ to the result | + | {\rm E},\ 0,\ 0,\ 1)$ to the result |

:$$\underline{x} = \underline{x}_{4} = (0, 1, 0, 0, 1) ⇒ \underline{u}_{4} = (0, 1, 0, 0).$$ | :$$\underline{x} = \underline{x}_{4} = (0, 1, 0, 0, 1) ⇒ \underline{u}_{4} = (0, 1, 0, 0).$$ | ||

| − | '''(4)''' The event $\underline{y} = \underline{x}$ occurs only if none of the $n = 5$ code bits is | + | '''(4)''' The event $\underline{y} = \underline{x}$ occurs only if none of the $n = 5$ code bits is erased by the BEC channel: |

:$${\rm Pr}(\underline{y} = \underline{x}) = (1 - \lambda)^5 = 0.9^5 \hspace{0.15cm} \underline{= 59.1\%} \hspace{0.05cm}.$$ | :$${\rm Pr}(\underline{y} = \underline{x}) = (1 - \lambda)^5 = 0.9^5 \hspace{0.15cm} \underline{= 59.1\%} \hspace{0.05cm}.$$ | ||

| − | '''(5)''' The event $v = u$ then occurs, | + | '''(5)''' The event $v = u$ then occurs, |

*if all code bits are transmitted correctly ⇒ ${\rm Pr}(\underline{y} = \underline{x})$, | *if all code bits are transmitted correctly ⇒ ${\rm Pr}(\underline{y} = \underline{x})$, | ||

| − | *but also when only one | + | *but also when only one code bit is erased. According to the binomial distribution, there are five possibilities for this: |

| − | :$${\rm Pr}(\underline{v} = \underline{u}) \hspace{-0.1cm}\ = \ \hspace{-0. 1cm} {\rm Pr}(\underline{y} = \underline{x}) + 5 \cdot (1 - \lambda)^4 \cdot \lambda = 0.591 + 5 \cdot 0.656^4 \cdot 0.1 \hspace{0.15cm} \underline{= 91.9 \%} \hspace{0.05cm}.$$ | + | :$${\rm Pr}(\underline{v} = \underline{u}) \hspace{-0.1cm}\ = \ \hspace{-0.1cm} {\rm Pr}(\underline{y} = \underline{x}) + 5 \cdot (1 - \lambda)^4 \cdot \lambda = 0.591 + 5 \cdot 0.656^4 \cdot 0.1 \hspace{0.15cm} \underline{= 91.9 \%} \hspace{0.05cm}.$$ |

| − | '''(6)''' Due to the BEC model, the | + | '''(6)''' Due to the BEC model, the falsification of a code word $\underline{x}$ is per se impossible, since none of the bits can be falsified from $0 → 1$ or from $1 → 0$. Rather: |

:$${\rm Pr}(\underline{v} = \underline{u}) + {\rm Pr}(\underline{v} = {\rm\underline{ E}}) = 1 \hspace{0.3cm} \Rightarrow \hspace{0.3cm} {\rm Pr}(\underline{v} = {\rm\underline{ E}}) = 1 - {\rm Pr}(\underline{v} = \underline{u}) \hspace{0.15cm} \underline{= 8.1\%} \hspace{0.05cm}.$$ | :$${\rm Pr}(\underline{v} = \underline{u}) + {\rm Pr}(\underline{v} = {\rm\underline{ E}}) = 1 \hspace{0.3cm} \Rightarrow \hspace{0.3cm} {\rm Pr}(\underline{v} = {\rm\underline{ E}}) = 1 - {\rm Pr}(\underline{v} = \underline{u}) \hspace{0.15cm} \underline{= 8.1\%} \hspace{0.05cm}.$$ | ||

Latest revision as of 17:26, 23 January 2023

For this exercise it is required:

- The single parity-check code with parameters $k = 4$ and $n = 5$ ⇒ $\rm SPC \ (5, 4)$ adds to the information bits $u_{1}$, ... , $u_{4}$ a check bit $p$ so that an even number of ones occurs in each code word $\underline{x}$ :

- $$x_1 \oplus x_2 \oplus x_3 \oplus x_4 \oplus x_5 = 0 \hspace{0.05cm},$$

- $$ u_1 \oplus u_2 \oplus u_3 \oplus u_4 \oplus p = 0 \hspace{0.05cm}.$$

- The Binary Erasure Channel $\rm (BEC)$ - with binary input values $x_{i} \in \{0, \ 1\}$ and ternary output $y_{i} \in \{0,\ 1,\ \rm E\}$ leads with probability $\lambda = 0.1$ to an erasure $\rm E$.

- Furthermore, ${\rm Pr}(y_{i} = x_{i}) = 1 - \lambda = 0.9$ holds. A real transmission error is excluded:

- $$ {\rm Pr} \big[(x_i = 0)\cap (y_i = 1)\big] = {\rm Pr} \big[(x_i = 1)\cap (y_i = 0)\big] = 0\hspace{0.05cm}.$$

The relationship between the information word $\underline{u}$ and the code word $\underline{x}$ is given by the table. From the receive word $\underline{y}$ the vector $\underline{v}$ of information bits at the sink is formed by maximum likelihood decision, which should match the information word $\underline{u}$ as much as possible.

The following nomenclature applies:

- $$\underline{u} \ \in \ \{\underline{u}_0,\ \underline{u}_1,\hspace{0.15cm} \text{...} \hspace{0.2cm},\ \underline{u}_{15}\} \hspace{0.05cm},$$

- $$ \underline{v} \ \in \ \{\underline{v}_0,\ \underline{v}_1, \hspace{0.15cm}\text{...} \hspace{0.2cm},\ \underline{v}_{15},\ \underline{\rm E}\} \hspace{0.05cm}.$$

The result $\underline{v} =\underline{\rm E} = {\rm (E,\ E,\ E,\ E)}$ indicates that decoding of the code word is not possible due to too many erasures.

Hints:

- This exercise belongs to the chapter "Examples of binary block codes".

- Reference is also made to the chapter "Channel Models and Decision Structures".

- The check bits of $u_{0}$, $u_{4}$ and $u_{13}$ are to be determined in the subtask (1)'.

Questions

Solution

(1) The check bit $p$ is determined in the "single parity-check code" in such a way, that the sum of all ones in the code word $\underline{x} = (u_{1},\ u_{2}, ... ,\ u_{4},\ p)$ is even.

For example, one gets:

- $$\underline{u}_0 \hspace{-0.1cm}\ = \ \hspace{-0.1cm} (0, 0, 0, 0) \hspace{0.3cm} \Rightarrow \hspace{0.3cm} \underline{x}_0 = (0, 0, 0, 0, 0)\hspace{0.3cm} \Rightarrow \hspace{0.3cm} p \hspace{0.15cm} \underline{= 0} \hspace{0.05cm},$$

- $$ \underline{u}_4 \hspace{-0.1cm}\ = \ \hspace{-0.1cm} (0, 1, 0, 0) \hspace{0.3cm} \Rightarrow \hspace{0.3cm} \underline{x}_4 = (0, 1, 0, 0, 1)\hspace{0.3cm} \Rightarrow \hspace{0.3cm} p \hspace{0.15cm} \underline{= 1} \hspace{0.05cm},$$

- $$\underline{u}_{13} \hspace{-0.1cm}\ = \ \hspace{-0.1cm} (1, 1, 0, 1) \hspace{0.15cm} \Rightarrow \hspace{0.15cm} \underline{x}_{13} = (1, 1, 0, 1, 1)\hspace{0.3cm} \Rightarrow \hspace{0.3cm} p \hspace{0.15cm} \underline{= 1} \hspace{0.05cm}.$$

(2) Correct is answer 1:

- Because of the fact that the number of ones must be even, the erased check bit $p = 0$. Thus, $\underline{u}_{0}$ was sent.

(3) Correct is answer 2:

- According to the same considerations as in the last subtask, we arrive with $\underline{y} = (0,\ {\rm E},\ 0,\ 0,\ 1)$ to the result

- $$\underline{x} = \underline{x}_{4} = (0, 1, 0, 0, 1) ⇒ \underline{u}_{4} = (0, 1, 0, 0).$$

(4) The event $\underline{y} = \underline{x}$ occurs only if none of the $n = 5$ code bits is erased by the BEC channel:

- $${\rm Pr}(\underline{y} = \underline{x}) = (1 - \lambda)^5 = 0.9^5 \hspace{0.15cm} \underline{= 59.1\%} \hspace{0.05cm}.$$

(5) The event $v = u$ then occurs,

- if all code bits are transmitted correctly ⇒ ${\rm Pr}(\underline{y} = \underline{x})$,

- but also when only one code bit is erased. According to the binomial distribution, there are five possibilities for this:

- $${\rm Pr}(\underline{v} = \underline{u}) \hspace{-0.1cm}\ = \ \hspace{-0.1cm} {\rm Pr}(\underline{y} = \underline{x}) + 5 \cdot (1 - \lambda)^4 \cdot \lambda = 0.591 + 5 \cdot 0.656^4 \cdot 0.1 \hspace{0.15cm} \underline{= 91.9 \%} \hspace{0.05cm}.$$

(6) Due to the BEC model, the falsification of a code word $\underline{x}$ is per se impossible, since none of the bits can be falsified from $0 → 1$ or from $1 → 0$. Rather:

- $${\rm Pr}(\underline{v} = \underline{u}) + {\rm Pr}(\underline{v} = {\rm\underline{ E}}) = 1 \hspace{0.3cm} \Rightarrow \hspace{0.3cm} {\rm Pr}(\underline{v} = {\rm\underline{ E}}) = 1 - {\rm Pr}(\underline{v} = \underline{u}) \hspace{0.15cm} \underline{= 8.1\%} \hspace{0.05cm}.$$