Difference between revisions of "Aufgaben:Exercise 2.6Z: Again on the Huffman Code"

m (Nabil verschob die Seite Zusatzaufgaben:2.06 Nochmals zum Huffman–Code nach 2.06Z Nochmals zum Huffman–Code) |

|||

| (32 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{quiz-Header|Buchseite= | + | {{quiz-Header|Buchseite=Information_Theory/Entropy_Coding_According_to_Huffman |

}} | }} | ||

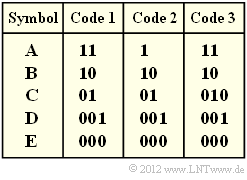

| − | [[File:P_ID2453__Inf_Z_2_6.png|right|]] | + | [[File:P_ID2453__Inf_Z_2_6.png|right|frame|Three codes to choose from ]] |

| − | + | [https://en.wikipedia.org/wiki/David_A._Huffman David Albert Huffman's] algorithm implements entropy coding with the following properties: | |

| − | + | * The resulting binary code is prefix-free and thus easily (and immediately) decodable. | |

| + | * With a memoryless source, the code leads to the smallest possible average code word length $L_{\rm M}$. | ||

| + | * However, $L_{\rm M}$ is never smaller than the source entropy $H$. | ||

| + | * These two quantities can be calculated from the $M$ symbol probabilities alone. | ||

| − | |||

| − | : | + | For this exercise, we assume a memoryless source with the symbol set size $M = 5$ and the alphabet |

| + | :$$\{ {\rm A},\ {\rm B},\ {\rm C},\ {\rm D},\ {\rm E} \}.$$ | ||

| − | + | In the above diagram, three binary codes are given. You are to decide which of these codes were (or could be) created by applying the Huffman algorithm. | |

| − | |||

| − | === | + | |

| + | |||

| + | |||

| + | |||

| + | <u>Hints:</u> | ||

| + | *The exercise belongs to the chapter [[Information_Theory/Entropiecodierung_nach_Huffman|Entropy Coding according to Huffman]]. | ||

| + | *Further information on the Huffman algorithm can also be found in the information sheet for [[Aufgaben:Exercise_2.6:_About_the_Huffman_Coding|Exercise 2.6]]. | ||

| + | *To check your results, please refer to the (German language) SWF module [[Applets:Huffman_Shannon_Fano|Coding according to Huffman and Shannon/Fano]]. | ||

| + | |||

| + | |||

| + | |||

| + | ===Questions=== | ||

<quiz display=simple> | <quiz display=simple> | ||

| − | { | + | {Which codes could have arisen according to Huffman for $p_{\rm A} = p_{\rm B} = p_{\rm C} = 0.3$ and $p_{\rm D} = p_{\rm E} = 0.05$? |

|type="[]"} | |type="[]"} | ||

| − | + Code 1, | + | + $\text{Code 1}$, |

| − | - Code 2, | + | - $\text{Code 2}$, |

| − | - Code 3. | + | - $\text{Code 3}$. |

| − | { | + | {How are the average code word length $L_{\rm M}$ and the entropy $H$ related for the given probabilities? |

| − | |type=" | + | |type="()"} |

| − | - | + | - $L_{\rm M} < H$, |

| − | - | + | - $L_{\rm M} \ge H$, |

| − | + | + | + $L_{\rm M} > H$. |

| − | { | + | {Consider $\text{Code 1}$. With what symbol probabilities would $L_{\rm M} = H$ hold? |

|type="{}"} | |type="{}"} | ||

| − | $ | + | $\ p_{\rm A} \ = \ $ { 0.25 3% } |

| − | $ | + | $\ p_{\rm B} \ = \ $ { 0.25 3% } |

| − | $ | + | $\ p_{\rm C} \ = \ $ { 0.25 3% } |

| − | $ | + | $\ p_{\rm D} \ = \ $ { 0.125 3% } |

| − | $ | + | $\ p_{\rm E} \ = \ $ { 0.125 3% } |

| − | { | + | {The probabilities calculated in subtask '''(3)''' still apply. <br>However, the average code word length is now determined for a sequence of length $N = 40$ ⇒ $L_{\rm M}\hspace{0.03cm}'$. What is possible? |

|type="[]"} | |type="[]"} | ||

| − | + | + | + $L_{\rm M}\hspace{0.01cm}' < L_{\rm M}$, |

| − | + | + | + $L_{\rm M}\hspace{0.01cm}' = L_{\rm M}$, |

| − | + | + | + $L_{\rm M}\hspace{0.01cm}' > L_{\rm M}$. |

| − | { | + | {Which code could possibly be a Huffman code? |

|type="[]"} | |type="[]"} | ||

| − | + Code 1, | + | + $\text{Code 1}$, |

| − | - Code 2, | + | - $\text{Code 2}$, |

| − | - Code 3. | + | - $\text{Code 3}$. |

| Line 60: | Line 73: | ||

</quiz> | </quiz> | ||

| − | === | + | ===Solution=== |

{{ML-Kopf}} | {{ML-Kopf}} | ||

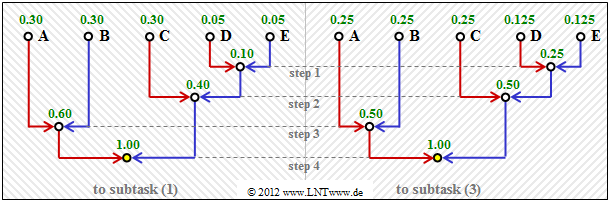

| − | + | [[File:EN_Inf_Z_2_6a_v2.png|right|frame|Huffman tree diagrams for subtasks '''(1)''' and '''(3)''']] | |

| − | + | '''(1)''' <u>Solution suggestion 1</u> is correct. | |

| − | + | *The diagram shows the construction of the Huffman code by means of a tree diagram. | |

| + | *With the assignment red → <b>1</b> and blue → <b>0</b> one obtains: <br>$\rm A$ → <b>11</b>, $\rm B$ → <b>10</b>, $\rm C$ → <b>01</b>, $\rm D$ → <b>001</b>, $\rm E$ → <b>000</b>. | ||

| + | *The left diagram applies to the probabilities according to subtask '''(1)'''. | ||

| + | *The diagram on the right belongs to subtask '''(3)''' with slightly different probabilities. | ||

| + | *However, it provides exactly the same code. | ||

| + | <br clear=all> | ||

| + | '''(2)''' <u>Proposed solution 3</u> is correct, as the following calculation also shows: | ||

| + | :$$L_{\rm M} \hspace{0.2cm} = \hspace{0.2cm} (0.3 + 0.3 + 0.3) \cdot 2 + (0.05 + 0.05) \cdot 3 = 2.1\,{\rm bit/source \:symbol}\hspace{0.05cm},$$ | ||

| + | :$$H \hspace{0.2cm} = \hspace{0.2cm} 3 \cdot 0.3 \cdot {\rm log_2}\hspace{0.15cm}(1/0.3) + 2 \cdot 0.05 \cdot {\rm log_2}\hspace{0.15cm}(1/0.05) | ||

| + | \approx 2.0\,{\rm bit/source \:symbol}\hspace{0.05cm}.$$ | ||

| − | + | *According to the source coding theorem, $L_{\rm M} \ge H$ always holds. | |

| − | + | *However, a prerequisite for $L_{\rm M} = H$ is that all symbol probabilities can be represented in the form $2^{-k} \ (k = 1, \ 2, \ 3,\ \text{ ...})$ . | |

| − | + | *This does not apply here. | |

| − | |||

| − | |||

| − | + | ||

| + | '''(3)''' $\rm A$, $\rm B$ and $\rm C$ are represented by two bits in $\text{Code 1}$ , $\rm E$ and $\rm F$ by three bits. Thus one obtains for | ||

| + | |||

| + | * the average code word length | ||

:$$L_{\rm M} = p_{\rm A}\cdot 2 + p_{\rm B}\cdot 2 + p_{\rm C}\cdot 2 + p_{\rm D}\cdot 3 + p_{\rm E}\cdot 3 | :$$L_{\rm M} = p_{\rm A}\cdot 2 + p_{\rm B}\cdot 2 + p_{\rm C}\cdot 2 + p_{\rm D}\cdot 3 + p_{\rm E}\cdot 3 | ||

\hspace{0.05cm},$$ | \hspace{0.05cm},$$ | ||

| − | + | * for the source entropy: | |

| − | :$$H = p_{\rm A}\cdot {\rm | + | :$$H = p_{\rm A}\cdot {\rm log_2}\hspace{0.15cm}\frac{1}{p_{\rm A}} + p_{\rm B}\cdot {\rm log_2}\hspace{0.15cm}\frac{1}{p_{\rm B}} + p_{\rm C}\cdot |

| − | {\rm | + | {\rm log_2}\hspace{0.15cm}\frac{1}{p_{\rm C}} + p_{\rm D}\cdot {\rm log_2}\hspace{0.15cm}\frac{1}{p_{\rm D}} + p_{\rm E}\cdot {\rm log_2}\hspace{0.15cm}\frac{1}{p_{\rm E}} |

\hspace{0.05cm}.$$ | \hspace{0.05cm}.$$ | ||

| − | + | By comparing all the terms, we arrive at the result: | |

| − | :$$p_{\rm A}= p_{\rm B}= p_{\rm C}\hspace{0.15cm}\underline{= 0.25} \hspace{0.05cm}, \hspace{0.2cm}p_{\rm D}= p_{\rm E}\hspace{0.15cm}\underline{= 0.125} | + | :$$p_{\rm A}= p_{\rm B}= p_{\rm C}\hspace{0.15cm}\underline{= 0.25} \hspace{0.05cm}, \hspace{0.2cm}p_{\rm D}= p_{\rm E}\hspace{0.15cm}\underline{= 0.125}\hspace{0.3cm} |

| − | + | \Rightarrow\hspace{0.3cm} L_{\rm M} = H = 2.25\,{\rm bit/source \:symbol} \hspace{0.05cm}.$$ | |

| − | + | It can be seen: | |

| + | *With these "more favourable" probabilities, there is even a larger average code word length than with the "less favourable" ones. | ||

| + | *The equality $(L_{\rm M} = H)$ is therefore solely due to the now larger source entropy. | ||

| + | |||

| + | |||

| + | |||

| + | '''(4)''' For example, one (of many) simulations with the probabilities according to subtask '''(3)''' yields the sequence with $N = 40$ character: | ||

| + | :$$\rm EBDCCBDABEBABCCCCCBCAABECAACCBAABBBCDCAB.$$ | ||

| + | |||

| + | *This results in $L_{\rm M}\hspace{0.01cm}' = ( 34 \cdot 2 + 6 \cdot 3)/50 = 2.15$ bit/source symbol, i.e. a smaller value than for the unlimited sequence $(L_{\rm M} = 2.25$ bit/source symbol$)$. | ||

| + | *However, with a different starting value of the random generator, $(L_{\rm M}\hspace{0.03cm}' \ge L_{\rm M})$ is also possible. | ||

| + | *This means: <u>All statements</u> are correct. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | :* Code 1 | + | '''(5)''' Only <u>solution suggestion 1</u>: |

| + | * $\text{Code 1}$ is a Huffman code, as has already been shown in the previous subtasks. <br>This is not true for all symbol probabilities, but at least for the parameter sets according to subtasks '''(1)''' and '''(3)'''. | ||

| − | + | * $\text{Code 2}$ is not a Huffman code, since such a code would always have to be prefix-free. <br>However, the prefix freedom is not given here, since <b>1</b> is the beginning of the code word <b>10</b> . | |

| − | + | * $\text{Code 3}$ is also not a Huffman code, since it has an average code word length that is $p_{\rm C}$ longer than required $($see $\text{Code 1})$. It is not optimal <br>There are no symbol probabilities $p_{\rm A}$, ... , $p_{\rm E}$, that would justify coding the symbol $\rm C$ with <b>010</b> instead of <b>01</b> . | |

{{ML-Fuß}} | {{ML-Fuß}} | ||

| − | [[Category: | + | [[Category:Information Theory: Exercises|^2.3 Entropy Coding according to Huffman^]] |

Latest revision as of 16:55, 1 November 2022

David Albert Huffman's algorithm implements entropy coding with the following properties:

- The resulting binary code is prefix-free and thus easily (and immediately) decodable.

- With a memoryless source, the code leads to the smallest possible average code word length $L_{\rm M}$.

- However, $L_{\rm M}$ is never smaller than the source entropy $H$.

- These two quantities can be calculated from the $M$ symbol probabilities alone.

For this exercise, we assume a memoryless source with the symbol set size $M = 5$ and the alphabet

- $$\{ {\rm A},\ {\rm B},\ {\rm C},\ {\rm D},\ {\rm E} \}.$$

In the above diagram, three binary codes are given. You are to decide which of these codes were (or could be) created by applying the Huffman algorithm.

Hints:

- The exercise belongs to the chapter Entropy Coding according to Huffman.

- Further information on the Huffman algorithm can also be found in the information sheet for Exercise 2.6.

- To check your results, please refer to the (German language) SWF module Coding according to Huffman and Shannon/Fano.

Questions

Solution

(1) Solution suggestion 1 is correct.

- The diagram shows the construction of the Huffman code by means of a tree diagram.

- With the assignment red → 1 and blue → 0 one obtains:

$\rm A$ → 11, $\rm B$ → 10, $\rm C$ → 01, $\rm D$ → 001, $\rm E$ → 000. - The left diagram applies to the probabilities according to subtask (1).

- The diagram on the right belongs to subtask (3) with slightly different probabilities.

- However, it provides exactly the same code.

(2) Proposed solution 3 is correct, as the following calculation also shows:

- $$L_{\rm M} \hspace{0.2cm} = \hspace{0.2cm} (0.3 + 0.3 + 0.3) \cdot 2 + (0.05 + 0.05) \cdot 3 = 2.1\,{\rm bit/source \:symbol}\hspace{0.05cm},$$

- $$H \hspace{0.2cm} = \hspace{0.2cm} 3 \cdot 0.3 \cdot {\rm log_2}\hspace{0.15cm}(1/0.3) + 2 \cdot 0.05 \cdot {\rm log_2}\hspace{0.15cm}(1/0.05) \approx 2.0\,{\rm bit/source \:symbol}\hspace{0.05cm}.$$

- According to the source coding theorem, $L_{\rm M} \ge H$ always holds.

- However, a prerequisite for $L_{\rm M} = H$ is that all symbol probabilities can be represented in the form $2^{-k} \ (k = 1, \ 2, \ 3,\ \text{ ...})$ .

- This does not apply here.

(3) $\rm A$, $\rm B$ and $\rm C$ are represented by two bits in $\text{Code 1}$ , $\rm E$ and $\rm F$ by three bits. Thus one obtains for

- the average code word length

- $$L_{\rm M} = p_{\rm A}\cdot 2 + p_{\rm B}\cdot 2 + p_{\rm C}\cdot 2 + p_{\rm D}\cdot 3 + p_{\rm E}\cdot 3 \hspace{0.05cm},$$

- for the source entropy:

- $$H = p_{\rm A}\cdot {\rm log_2}\hspace{0.15cm}\frac{1}{p_{\rm A}} + p_{\rm B}\cdot {\rm log_2}\hspace{0.15cm}\frac{1}{p_{\rm B}} + p_{\rm C}\cdot {\rm log_2}\hspace{0.15cm}\frac{1}{p_{\rm C}} + p_{\rm D}\cdot {\rm log_2}\hspace{0.15cm}\frac{1}{p_{\rm D}} + p_{\rm E}\cdot {\rm log_2}\hspace{0.15cm}\frac{1}{p_{\rm E}} \hspace{0.05cm}.$$

By comparing all the terms, we arrive at the result:

- $$p_{\rm A}= p_{\rm B}= p_{\rm C}\hspace{0.15cm}\underline{= 0.25} \hspace{0.05cm}, \hspace{0.2cm}p_{\rm D}= p_{\rm E}\hspace{0.15cm}\underline{= 0.125}\hspace{0.3cm} \Rightarrow\hspace{0.3cm} L_{\rm M} = H = 2.25\,{\rm bit/source \:symbol} \hspace{0.05cm}.$$

It can be seen:

- With these "more favourable" probabilities, there is even a larger average code word length than with the "less favourable" ones.

- The equality $(L_{\rm M} = H)$ is therefore solely due to the now larger source entropy.

(4) For example, one (of many) simulations with the probabilities according to subtask (3) yields the sequence with $N = 40$ character:

- $$\rm EBDCCBDABEBABCCCCCBCAABECAACCBAABBBCDCAB.$$

- This results in $L_{\rm M}\hspace{0.01cm}' = ( 34 \cdot 2 + 6 \cdot 3)/50 = 2.15$ bit/source symbol, i.e. a smaller value than for the unlimited sequence $(L_{\rm M} = 2.25$ bit/source symbol$)$.

- However, with a different starting value of the random generator, $(L_{\rm M}\hspace{0.03cm}' \ge L_{\rm M})$ is also possible.

- This means: All statements are correct.

(5) Only solution suggestion 1:

- $\text{Code 1}$ is a Huffman code, as has already been shown in the previous subtasks.

This is not true for all symbol probabilities, but at least for the parameter sets according to subtasks (1) and (3).

- $\text{Code 2}$ is not a Huffman code, since such a code would always have to be prefix-free.

However, the prefix freedom is not given here, since 1 is the beginning of the code word 10 .

- $\text{Code 3}$ is also not a Huffman code, since it has an average code word length that is $p_{\rm C}$ longer than required $($see $\text{Code 1})$. It is not optimal

There are no symbol probabilities $p_{\rm A}$, ... , $p_{\rm E}$, that would justify coding the symbol $\rm C$ with 010 instead of 01 .