Difference between revisions of "Aufgaben:Exercise 4.7: Weighted Sum and Difference"

From LNTwww

| Line 77: | Line 77: | ||

===Solution=== | ===Solution=== | ||

{{ML-Kopf}} | {{ML-Kopf}} | ||

| − | '''(1)''' Since the random variables $u$ and $v$ are zero mean $(m = 0)$, the random variable $x$ is also zero mean: | + | '''(1)''' Since the random variables $u$ and $v$ are zero mean $(m = 0)$, the random variable $x$ is also zero mean: |

:$$m_x = (A +B) \cdot m \hspace{0.15cm}\underline{ =0}.$$ | :$$m_x = (A +B) \cdot m \hspace{0.15cm}\underline{ =0}.$$ | ||

*For the variance and standard deviation: | *For the variance and standard deviation: | ||

| Line 84: | Line 84: | ||

| − | '''(2)''' Since $u$ and $v$ have the same standard deviation, so does $\sigma_y =\sigma_x \hspace{0.15cm}\underline{ \approx 2.236}$. | + | '''(2)''' Since $u$ and $v$ have the same standard deviation, so does $\sigma_y =\sigma_x \hspace{0.15cm}\underline{ \approx 2.236}$. |

| − | *Because $m=0$ also $m_y = m_x \hspace{0.15cm}\underline{ =0} | + | *Because $m=0$ also $m_y = m_x \hspace{0.15cm}\underline{ =0}$. |

| − | *For mean-valued random variable $u$ and $v$ on the other hand, for $m_y = (A -B) \cdot m$ adds up to a different value than for $m_x = (A +B) \cdot m$. | + | *For mean-valued random variable $u$ and $v$ on the other hand, for $m_y = (A -B) \cdot m$ adds up to a different value than for $m_x = (A +B) \cdot m$. |

| − | '''(3)''' We assume here in the sample solution the more general case $m \ne 0$ Then for the common moment holds: | + | '''(3)''' We assume here in the sample solution the more general case $m \ne 0$. Then, for the common moment holds: |

:$$m_{xy} = {\rm E} \big[x \cdot y \big] = {\rm E} \big[(A \cdot u + B \cdot v) (A \cdot u - B \cdot v)\big] . $$ | :$$m_{xy} = {\rm E} \big[x \cdot y \big] = {\rm E} \big[(A \cdot u + B \cdot v) (A \cdot u - B \cdot v)\big] . $$ | ||

| − | *According to the general calculation rules for expected values, it follows: | + | *According to the general calculation rules for expected values, it follows: |

:$$m_{xy} = A^2 \cdot {\rm E} \big[u^2 \big] - B^2 \cdot {\rm E} \big[v^2 \big] = (A^2 - B^2)(m^2 + \sigma^2).$$ | :$$m_{xy} = A^2 \cdot {\rm E} \big[u^2 \big] - B^2 \cdot {\rm E} \big[v^2 \big] = (A^2 - B^2)(m^2 + \sigma^2).$$ | ||

| − | *This gives the covariance to | + | *This gives the covariance to |

:$$\mu_{xy} = m_{xy} - m_{x} \cdot m_{y}= (A^2 - B^2)(m^2 + \sigma^2) - (A + B)(A-B) \cdot m^2 = (A^2 - B^2) \cdot \sigma^2.$$ | :$$\mu_{xy} = m_{xy} - m_{x} \cdot m_{y}= (A^2 - B^2)(m^2 + \sigma^2) - (A + B)(A-B) \cdot m^2 = (A^2 - B^2) \cdot \sigma^2.$$ | ||

| − | *With $\sigma = 1$, $A = 1$ and $B = 2$ we get $\mu_{xy} \hspace{0.15cm}\underline{ =-3}$ | + | *With $\sigma = 1$, $A = 1$ and $B = 2$ we get $\mu_{xy} \hspace{0.15cm}\underline{ =-3}$. Tthis is independent of the mean $m$ of the variables $u$ and $v$. |

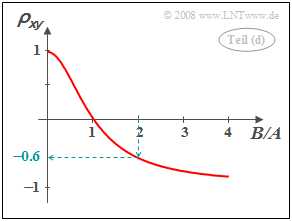

| − | [[File:P_ID403__Sto_A_4_7_d_neu.png|right|frame|correlation coefficient as a function of quotient $B/A$]] | + | [[File:P_ID403__Sto_A_4_7_d_neu.png|right|frame|correlation coefficient as a function of the quotient $B/A$]] |

| − | '''(4)''' The correlation coefficient is obtained as | + | '''(4)''' The correlation coefficient is obtained as |

:$$\rho_{xy} =\frac{\mu_{xy}}{\sigma_x \cdot \sigma_y} = \frac{(A^2 - B^2) \cdot \sigma^2}{(A^2 +B^2) \cdot \sigma^2} | :$$\rho_{xy} =\frac{\mu_{xy}}{\sigma_x \cdot \sigma_y} = \frac{(A^2 - B^2) \cdot \sigma^2}{(A^2 +B^2) \cdot \sigma^2} | ||

| − | \hspace{0.5 cm}\ | + | \hspace{0.5 cm}\Rightarrow \hspace{0.5 cm}\rho_{xy} =\frac{1 - (B/A)^2} {1 +(B/A)^2}.$$ |

*With $B/A = 2$ it follows $\rho_{xy} \hspace{0.15cm}\underline{ =-0.6}$. | *With $B/A = 2$ it follows $\rho_{xy} \hspace{0.15cm}\underline{ =-0.6}$. | ||

| Line 113: | Line 113: | ||

'''(5)''' Correct are <u>statements 1, 3, and 4</u>: | '''(5)''' Correct are <u>statements 1, 3, and 4</u>: | ||

| − | *From $B= 0$ follows $\rho_{xy} = 1$ (strict correlation). It can be further seen that in this case $x = u$ and $y = u$ are identical random variables. | + | *From $B= 0$ follows $\rho_{xy} = 1$ ("strict correlation"). It can be further seen that in this case $x = u$ and $y = u$ are identical random variables. |

*The second statement is not true: For $A = 1$ and $B= -2$ also results $\rho_{xy} = -0.6$. | *The second statement is not true: For $A = 1$ and $B= -2$ also results $\rho_{xy} = -0.6$. | ||

*So the sign of the quotient does not matter because in the equation calculated in subtask '''(4)'''' the quotient $B/A$ occurs only quadratically. | *So the sign of the quotient does not matter because in the equation calculated in subtask '''(4)'''' the quotient $B/A$ occurs only quadratically. | ||

| − | *If $B \gg A$, both $x$ and $y$ are determined almost exclusively by the random variable | + | *If $B \gg A$, both $x$ and $y$ are determined almost exclusively by the random variable $v$ and it is $ y \approx -x$. This corresponds to the correlation coefficient $\rho_{xy} = -1$. |

*In contrast, $B/A = 1$ always yields the correlation coefficient $\rho_{xy} = 0$ and thus the uncorrelatedness between $x$ and $y$. | *In contrast, $B/A = 1$ always yields the correlation coefficient $\rho_{xy} = 0$ and thus the uncorrelatedness between $x$ and $y$. | ||

| − | '''(6)''' <u>Both statements | + | '''(6)''' <u>Both statements</u> are true: |

| − | *When $A=B$ | + | *When $A=B$ ⇒ $x$ and $y$ are always uncorrelated $($for any PDF of the variables $u$ and $v)$. |

*The new random variables $x$ and $y$ are therefore also distributed randomly. | *The new random variables $x$ and $y$ are therefore also distributed randomly. | ||

| − | *For Gaussian randomness, however, statistical independence follows from uncorrelatedness, and vice versa. | + | *For Gaussian randomness, however, statistical independence follows from uncorrelatedness, and vice versa. |

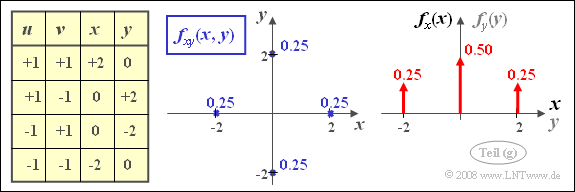

| − | [[File:P_ID404__Sto_A_4_7_g.png|right|frame| | + | [[File:P_ID404__Sto_A_4_7_g.png|right|frame|Joint PDF and edge PDFs]] |

| − | '''(7)''' Here, only <u>statement 1</u> is true: | + | '''(7)''' Here, only <u>statement 1</u> is true: |

| − | *The correlation coefficient results with $A=B= 1$ | + | *The correlation coefficient results with $A=B= 1$ to $\rho_{xy} = 0$. That is: $x$ and $y$ are uncorrelated. |

| − | * | + | *But it can be seen from the sketched two-dimensional PDF that the condition of statistical independence no longer applies in the present case: |

| − | $$f_{xy}(x, y) \ne f_{x}(x) \cdot f_{y}(y).$$ | + | :$$f_{xy}(x, y) \ne f_{x}(x) \cdot f_{y}(y).$$ |

Revision as of 15:42, 25 February 2022

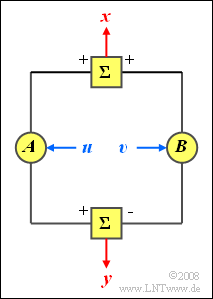

Let the random variables $u$ and $v$ be statistically independent of each other, each with mean $m$ and variance $\sigma^2$.

- Both variables have equal probability density function $\rm (PDF)$ and cumulative distribution function $\rm (CDF)$.

- Nothing is known about the course of these functions for the time being.

Now two new random variables $x$ and $y$ are formed according to the following equations:

- $$x = A \cdot u + B \cdot v,$$

- $$y= A \cdot u - B \cdot v.$$

Here, $A$ and $B$ denote (any) constant values.

- For the subtasks (1) to (4) let $m= 0$, $\sigma = 1$, $A = 1$ and $B = 2$.

- In subtask (6) $u$ and $v$ are each uniformly distributed with $m= 1$ and $\sigma = 0.5$. For the constants, $A = B = 1$.

- For subtask (7) it is still valid $A = B = 1$. Here the random variables $u$ and $v$ are symmetrically two-point distributed on $\pm$1:

- $${\rm Pr}(u=+1) = {\rm Pr}(u=-1) = {\rm Pr}(v=+1) = {\rm Pr}(v=-1) =0.5.$$

Note:

- The exercise belongs to the chapter Linear Combinations of Random Variables.

Questions

Solution

(1) Since the random variables $u$ and $v$ are zero mean $(m = 0)$, the random variable $x$ is also zero mean:

- $$m_x = (A +B) \cdot m \hspace{0.15cm}\underline{ =0}.$$

- For the variance and standard deviation:

- $$\sigma_x^2 = (A^2 +B^2) \cdot \sigma^2 = 5; \hspace{0.5cm} \sigma_x = \sqrt{5}\hspace{0.15cm}\underline{ \approx 2.236}.$$

(2) Since $u$ and $v$ have the same standard deviation, so does $\sigma_y =\sigma_x \hspace{0.15cm}\underline{ \approx 2.236}$.

- Because $m=0$ also $m_y = m_x \hspace{0.15cm}\underline{ =0}$.

- For mean-valued random variable $u$ and $v$ on the other hand, for $m_y = (A -B) \cdot m$ adds up to a different value than for $m_x = (A +B) \cdot m$.

(3) We assume here in the sample solution the more general case $m \ne 0$. Then, for the common moment holds:

- $$m_{xy} = {\rm E} \big[x \cdot y \big] = {\rm E} \big[(A \cdot u + B \cdot v) (A \cdot u - B \cdot v)\big] . $$

- According to the general calculation rules for expected values, it follows:

- $$m_{xy} = A^2 \cdot {\rm E} \big[u^2 \big] - B^2 \cdot {\rm E} \big[v^2 \big] = (A^2 - B^2)(m^2 + \sigma^2).$$

- This gives the covariance to

- $$\mu_{xy} = m_{xy} - m_{x} \cdot m_{y}= (A^2 - B^2)(m^2 + \sigma^2) - (A + B)(A-B) \cdot m^2 = (A^2 - B^2) \cdot \sigma^2.$$

- With $\sigma = 1$, $A = 1$ and $B = 2$ we get $\mu_{xy} \hspace{0.15cm}\underline{ =-3}$. Tthis is independent of the mean $m$ of the variables $u$ and $v$.

(4) The correlation coefficient is obtained as

- $$\rho_{xy} =\frac{\mu_{xy}}{\sigma_x \cdot \sigma_y} = \frac{(A^2 - B^2) \cdot \sigma^2}{(A^2 +B^2) \cdot \sigma^2} \hspace{0.5 cm}\Rightarrow \hspace{0.5 cm}\rho_{xy} =\frac{1 - (B/A)^2} {1 +(B/A)^2}.$$

- With $B/A = 2$ it follows $\rho_{xy} \hspace{0.15cm}\underline{ =-0.6}$.

(5) Correct are statements 1, 3, and 4:

- From $B= 0$ follows $\rho_{xy} = 1$ ("strict correlation"). It can be further seen that in this case $x = u$ and $y = u$ are identical random variables.

- The second statement is not true: For $A = 1$ and $B= -2$ also results $\rho_{xy} = -0.6$.

- So the sign of the quotient does not matter because in the equation calculated in subtask (4)' the quotient $B/A$ occurs only quadratically.

- If $B \gg A$, both $x$ and $y$ are determined almost exclusively by the random variable $v$ and it is $ y \approx -x$. This corresponds to the correlation coefficient $\rho_{xy} = -1$.

- In contrast, $B/A = 1$ always yields the correlation coefficient $\rho_{xy} = 0$ and thus the uncorrelatedness between $x$ and $y$.

(6) Both statements are true:

- When $A=B$ ⇒ $x$ and $y$ are always uncorrelated $($for any PDF of the variables $u$ and $v)$.

- The new random variables $x$ and $y$ are therefore also distributed randomly.

- For Gaussian randomness, however, statistical independence follows from uncorrelatedness, and vice versa.

(7) Here, only statement 1 is true:

- The correlation coefficient results with $A=B= 1$ to $\rho_{xy} = 0$. That is: $x$ and $y$ are uncorrelated.

- But it can be seen from the sketched two-dimensional PDF that the condition of statistical independence no longer applies in the present case:

- $$f_{xy}(x, y) \ne f_{x}(x) \cdot f_{y}(y).$$