Difference between revisions of "Channel Coding/Reed-Solomon Decoding for the Erasure Channel"

(Die Seite wurde neu angelegt: „ {{Header |Untermenü=Reed–Solomon–Codes und deren Decodierung |Vorherige Seite=Definition und Eigenschaften von Reed–Solomon–Codes |Nächste Seite=Fe…“) |

|||

| (34 intermediate revisions by 8 users not shown) | |||

| Line 1: | Line 1: | ||

{{Header | {{Header | ||

| − | |Untermenü=Reed–Solomon–Codes | + | |Untermenü=Reed–Solomon–Codes and Their Decoding |

| − | |Vorherige Seite=Definition | + | |Vorherige Seite=Definition and Properties of Reed-Solomon Codes |

| − | |Nächste Seite= | + | |Nächste Seite=Error Correction According to Reed-Solomon Coding |

}} | }} | ||

| − | == | + | == Block diagram and requirements for Reed-Solomon error detection == |

<br> | <br> | ||

| − | + | In the [[Channel_Coding/Decoding_of_Linear_Block_Codes#Decoding_at_the_Binary_Erasure_Channel|"Decoding at the Binary Erasure Channel"]] chapter we showed for the binary block codes, which calculations the decoder has to perform to decode from an incomplete received word $\underline{y}$ the transmitted code word $\underline{x}$ in the best possible way. In the Reed–Solomon chapter we renamed $\underline{x}$ to $\underline{c}$. | |

| − | + | [[File:EN_KC_T_2_4_S1_2neu.png|right|frame|Transmission system with Reed-Solomon coding/decoding and erasure channel|class=fit]] | |

| − | [[ | + | # This chapter is based on the [[Channel_Coding/Channel_Models_and_Decision_Structures#Binary_Erasure_Channel_.E2.80.93_BEC| $\text{BEC model}$]] ("Binary Erasure Channel"), which marks an uncertain bit as "erasure" $\rm E$. |

| + | # In contrast to the [[Channel_Coding/Channel_Models_and_Decision_Structures#Binary_Symmetric_Channel_.E2.80.93_BSC|$\text{BSC model}$]] ("Binary Symmetric Channel") and [[Channel_Coding/Channel_Models_and_Decision_Structures#AWGN_channel_at_binary_input|$\text{AWGN model}$]] ("Additive White Gaussian Noise"), bit errors $(y_i ≠ c_i)$ are excluded here. | ||

| − | + | ||

| − | * | + | Each bit of a received word |

| + | *thus matches the corresponding bit of the code word $(y_i = c_i)$, or | ||

| + | |||

| + | *is already marked as a cancellation $(y_i = \rm E)$.<br> | ||

| + | |||

| + | |||

| + | |||

| + | The graphic shows the block diagram, which is slightly different from the model in chapter [[Channel_Coding/Decoding_of_Linear_Block_Codes#Block_diagram_and_requirements|$\text{Decoding oflinear block codes}$]]: | ||

| + | *Since Reed–Solomon codes are linear block codes, the information word $\underline{u}$ and the code word $\underline{c}$ are also related via the generator matrix $\boldsymbol{\rm G}$ and following equation: | ||

::<math>\underline {c} = {\rm enc}(\underline {u}) = \underline {u} \cdot { \boldsymbol{\rm G}} | ::<math>\underline {c} = {\rm enc}(\underline {u}) = \underline {u} \cdot { \boldsymbol{\rm G}} | ||

| − | \hspace{0.3cm} {\rm | + | \hspace{0.3cm} {\rm with} \hspace{0.3cm}\underline {u} = (u_0, u_1,\hspace{0.05cm}\text{ ... }\hspace{0.1cm}, u_i, \hspace{0.05cm}\text{ ... }\hspace{0.1cm}, u_{k-1})\hspace{0.05cm}, \hspace{0.2cm} |

| − | \underline {c} = (c_0, c_1, ... \hspace{0. | + | \underline {c} = (c_0, c_1, \hspace{0.05cm}\text{ ... }\hspace{0.1cm}, c_i, \hspace{0.05cm}\text{ ... }\hspace{0.1cm}, c_{n-1}) |

\hspace{0.05cm}.</math> | \hspace{0.05cm}.</math> | ||

| − | * | + | *For the individual symbols of information block and code word, Reed–Solomon coding applies: |

| − | ::<math>u_i \in {\rm GF}(q)\hspace{0.05cm},\hspace{0.2cm}c_i \in {\rm GF}(q)\hspace{0.3cm}{\rm | + | ::<math>u_i \in {\rm GF}(q)\hspace{0.05cm},\hspace{0.2cm}c_i \in {\rm GF}(q)\hspace{0.3cm}{\rm with}\hspace{0.3cm} q = n+1 = 2^m |

\hspace{0.3cm} \Rightarrow \hspace{0.3cm} n = 2^m - 1\hspace{0.05cm}. </math> | \hspace{0.3cm} \Rightarrow \hspace{0.3cm} n = 2^m - 1\hspace{0.05cm}. </math> | ||

| − | + | *Each code symbol $c_i$ is thus represented by $m ≥ 2$ binary symbols. For comparison: For the binary block codes hold $q=2$, $m=1$ and the code word length $n$ is freely selectable.<br> | |

| + | |||

| + | *When encoding at symbol level, the BEC model must be extended to the "m-BEC model": | ||

| + | :*With probability $\lambda_m ≈ m \cdot\lambda$ a code symbol $c_i$ is erased $(y_i = \rm E)$ and it holds ${\rm Pr}(y_i = c_i) = 1 - \lambda_m$. | ||

| + | :*For more details on the conversion of the two models, see [[Aufgaben:Exercise_2.11Z:_Erasure_Channel_for_Symbols|"Exercise 2.11Z"]].<br> | ||

| + | *In the following, we deal only with the block "code word finder" $\rm (CWF)$, which extracts from the received vector $\underline{y}$ the vector $\underline{z} ∈ \mathcal{C}_{\rm RS}$: | ||

| + | :*If the number $e$ of erasures in the vector $\underline{y}$ is sufficiently small, the entire code word can be found with certainty: $(\underline{z}=\underline{c})$. | ||

| + | :*If too many symbols of the received word $\underline{y}$ are erased, the decoder reports that this word cannot be decoded. It may then be necessary to send this sequence again. <br> | ||

| + | *In the case of the "m-BEC" model, an error decision $(\underline{z} \ne\underline{c})$ is excluded ⇒ »'''block error probability'''« ${\rm Pr}(\underline{z}\ne\underline{c}) = 0$ ⇒ ${\rm Pr}(\underline{v}\ne\underline{u}) = 0$. | ||

| + | :*The reconstructed information word results according to the block diagram $($yellow background$)$ to $\underline{v} = {\rm enc}^{-1}(\underline{z})$. | ||

| + | :*With the generator matrix $\boldsymbol{\rm G}$ can also be written for this: | ||

| + | :::<math>\underline {c} = \underline {u} \cdot { \boldsymbol{\rm G}} | ||

| + | \hspace{0.3cm} \Rightarrow \hspace{0.3cm}\underline {z} = \underline {\upsilon} \cdot { \boldsymbol{\rm G}} | ||

| + | \hspace{0.3cm} \Rightarrow \hspace{0.3cm}\underline {\upsilon} = \underline {z} \cdot { \boldsymbol{\rm G}}^{\rm T} | ||

| + | \hspace{0.05cm}. </math> | ||

| − | + | == Decoding procedure using the RSC (7, 3, 5)<sub>8</sub> as an example == | |

| + | <br> | ||

| + | In order to be able to represent the Reed–Solomon decoding at the extinction channel as simply as possible, we start from a concrete task: | ||

| − | + | *A Reed–Solomon code with parameters $n= 7$, $k= 3$ and $q= 2^3 = 8$ is used. | |

| − | |||

| − | * | + | *Thus, for the information word $\underline{u}$ and the code word $\underline{c}$: |

| + | ::<math>\underline {u} = (u_0, u_1, u_2) \hspace{0.05cm},\hspace{0.15cm} | ||

| + | \underline {c} = (c_0, c_1, c_2,c_3,c_4,c_5,c_6)\hspace{0.05cm},\hspace{0.15cm} | ||

| + | u_i, c_i \in {\rm GF}(2^3) = \{0, 1, \alpha, \alpha^2, \text{...}\hspace{0.05cm} , \alpha^6\} | ||

| + | \hspace{0.05cm}.</math> | ||

| − | + | *The parity-check matrix $\boldsymbol{\rm H}$ is: | |

| − | :<math>\ | + | ::<math>{ \boldsymbol{\rm H}} = |

| − | \ | + | \begin{pmatrix} |

| − | \ | + | 1 & \alpha^1 & \alpha^2 & \alpha^3 & \alpha^4 & \alpha^5 & \alpha^6\\ |

| − | \hspace{0.05cm}. </math> | + | 1 & \alpha^2 & \alpha^4 & \alpha^6 & \alpha^1 & \alpha^{3} & \alpha^{5}\\ |

| + | 1 & \alpha^3 & \alpha^6 & \alpha^2 & \alpha^{5} & \alpha^{1} & \alpha^{4}\\ | ||

| + | 1 & \alpha^4 & \alpha^1 & \alpha^{5} & \alpha^{2} & \alpha^{6} & \alpha^{3} | ||

| + | \end{pmatrix}\hspace{0.05cm}. </math> | ||

| + | ⇒ For example, the received vector | ||

| + | :$$\underline {y} = (\alpha, \hspace{0.03cm} 1, \hspace{0.03cm}{\rm E}, \hspace{0.03cm}{\rm E}, \hspace{0.03cm}\alpha^2,{\rm E}, \hspace{0.03cm}\alpha^5)$$ | ||

| + | is assumed here. Then holds: | ||

| + | *Since the erasure channel produces no errors, four of the code symbols are known to the decoder: | ||

| + | ::<math>c_0 = \alpha^1 \hspace{0.05cm},\hspace{0.2cm} | ||

| + | c_1 = 1 \hspace{0.05cm},\hspace{0.2cm} | ||

| + | c_4 = \alpha^2 \hspace{0.05cm},\hspace{0.2cm} | ||

| + | c_6 = \alpha^5 | ||

| + | \hspace{0.05cm}.</math> | ||

| + | *It is obvious that the block "code word finder" $\rm (CWF)$ is to provide a vector of the form $\underline {z} = (c_0, \hspace{0.03cm}c_1, \hspace{0.03cm}z_2, \hspace{0.03cm}z_3,\hspace{0.03cm}c_4,\hspace{0.03cm}z_5,\hspace{0.03cm}c_6)$ with $z_2,\hspace{0.03cm}z_3,\hspace{0.03cm}z_5 \in \rm GF(2^3)$.<br> | ||

| + | *But since the code word $\underline {z}$ found by the decoder is also supposed to be a valid Reed–Solomon code word ⇒ $\underline {z} ∈ \mathcal{C}_{\rm RS}$, it must hold as well: | ||

| + | ::<math>{ \boldsymbol{\rm H}} \cdot \underline {z}^{\rm T} = \underline {0}^{\rm T} \hspace{0.3cm} \Rightarrow \hspace{0.3cm} | ||

| + | \begin{pmatrix} | ||

| + | 1 & \alpha^1 & \alpha^2 & \alpha^3 & \alpha^4 & \alpha^5 & \alpha^6\\ | ||

| + | 1 & \alpha^2 & \alpha^4 & \alpha^6 & \alpha^1 & \alpha^{3} & \alpha^{5}\\ | ||

| + | 1 & \alpha^3 & \alpha^6 & \alpha^2 & \alpha^{5} & \alpha^{1} & \alpha^{4}\\ | ||

| + | 1 & \alpha^4 & \alpha^1 & \alpha^{5} & \alpha^{2} & \alpha^{6} & \alpha^{3} | ||

| + | \end{pmatrix} \cdot | ||

| + | \begin{pmatrix} | ||

| + | c_0\\ | ||

| + | c_1\\ | ||

| + | z_2\\ | ||

| + | z_3\\ | ||

| + | c_4\\ | ||

| + | z_5\\ | ||

| + | c_6 | ||

| + | \end{pmatrix} = \begin{pmatrix} | ||

| + | 0\\ | ||

| + | 0\\ | ||

| + | 0\\ | ||

| + | 0 | ||

| + | \end{pmatrix} | ||

| + | \hspace{0.05cm}. </math> | ||

| + | *This gives four equations for the unknowns $z_2$, $z_3$ and $z_5$. With unique solution – and only with such – the decoding is successful and one can then say with certainty that indeed $\underline {c} = \underline {z} $ was sent.<br><br> | ||

| + | == Solution of the matrix equations using the example of the RSC (7, 3, 5)<sub>8</sub> == | ||

| + | <br> | ||

| + | Thus, it is necessary to find the admissible code word $\underline {z}$ that satisfies the determination equation | ||

| + | :$$\boldsymbol{\rm H} \cdot \underline {z}^{\rm T}. $$ | ||

| + | *For convenience, we split the vector $\underline {z}$ into two partial vectors, viz. | ||

| + | # the vector $\underline {z}_{\rm E} = (z_2, z_3, z_5)$ of the erased symbols $($subscript "$\rm E$" for "erasures"$)$,<br> | ||

| + | # the vector $\underline {z}_{\rm K} = (c_0, c_1,c_4, c_6)$ of known symbols $($subscript "$\rm K$" for "korrect" ⇒ "correct" $)$.<br><br> | ||

| + | *With the associated partial matrices $($each with $n-k = 4$ rows$)$ | ||

| + | ::<math>{ \boldsymbol{\rm H}}_{\rm E} = | ||

| + | \begin{pmatrix} | ||

| + | \alpha^2 & \alpha^3 & \alpha^5 \\ | ||

| + | \alpha^4 & \alpha^6 & \alpha^{3} \\ | ||

| + | \alpha^6 & \alpha^2 & \alpha^{1} \\ | ||

| + | \alpha^1 & \alpha^{5} & \alpha^{6} | ||

| + | \end{pmatrix} \hspace{0.05cm},\hspace{0.4cm} | ||

| + | { \boldsymbol{\rm H}}_{\rm K} | ||

| + | \begin{pmatrix} | ||

| + | 1 & \alpha^1 & \alpha^4 & \alpha^6\\ | ||

| + | 1 & \alpha^2 & \alpha^1 & \alpha^{5}\\ | ||

| + | 1 & \alpha^3 & \alpha^{5} & \alpha^{4}\\ | ||

| + | 1 & \alpha^4 & \alpha^{2} & \alpha^{3} | ||

| + | \end{pmatrix}</math> | ||

| + | :the equation of determination is thus: | ||

| + | ::<math>{ \boldsymbol{\rm H}}_{\rm E} \cdot \underline {z}_{\rm E}^{\rm T} + | ||

| + | { \boldsymbol{\rm H}}_{\rm K} \cdot \underline {z}_{\rm K}^{\rm T} | ||

| + | = \underline {0}^{\rm T} \hspace{0.5cm} \Rightarrow \hspace{0.5cm} | ||

| + | { \boldsymbol{\rm H}}_{\rm E} \cdot \underline {z}_{\rm E}^{\rm T} = - | ||

| + | { \boldsymbol{\rm H}}_{\rm K} \cdot \underline {z}_{\rm K}^{\rm T}\hspace{0.05cm}. </math> | ||

| + | *Since for all elements $z_i ∈ {\rm GF}(2^m)$ ⇒ the [[Channel_Coding/Some_Basics_of_Algebra#Definition_of_a_Galois_field |$\text{additive inverse}$]] ${\rm Inv_A}(z_i)= (- z_i) = z_i$ holds in the same way | ||

| + | ::<math>{ \boldsymbol{\rm H}}_{\rm E} \cdot \underline {z}_{\rm E}^{\rm T} = | ||

| + | { \boldsymbol{\rm H}}_{\rm K} \cdot \underline {z}_{\rm K}^{\rm T} = | ||

| + | \begin{pmatrix} | ||

| + | 1 & \alpha^1 & \alpha^4 & \alpha^6\\ | ||

| + | 1 & \alpha^2 & \alpha^1 & \alpha^{5}\\ | ||

| + | 1 & \alpha^3 & \alpha^{5} & \alpha^{4}\\ | ||

| + | 1 & \alpha^4 & \alpha^{2} & \alpha^{3} | ||

| + | \end{pmatrix} \cdot | ||

| + | \begin{pmatrix} | ||

| + | \alpha^1\\ | ||

| + | 1\\ | ||

| + | \alpha^{2}\\ | ||

| + | \alpha^{6} | ||

| + | \end{pmatrix} = \hspace{0.45cm}... \hspace{0.45cm}= | ||

| + | \begin{pmatrix} | ||

| + | \alpha^3\\ | ||

| + | \alpha^{4}\\ | ||

| + | \alpha^{2}\\ | ||

| + | 0 | ||

| + | \end{pmatrix} | ||

| + | \hspace{0.05cm}.</math> | ||

| + | *The right–hand side of the equation results for the considered example ⇒ $\underline {z}_{\rm K} = (c_0, c_1,c_4, c_6)$ and is based on the polynomial $p(x) = x^3 + x +1$, which leads to the following powers $($in $\alpha)$ : | ||

| + | ::<math>\alpha^3 =\alpha + 1\hspace{0.05cm}, | ||

| + | \hspace{0.3cm} \alpha^4 = \alpha^2 + \alpha\hspace{0.05cm}, | ||

| + | \hspace{0.3cm} \alpha^5 = \alpha^2 + \alpha + 1\hspace{0.05cm}, | ||

| + | \hspace{0.3cm} \alpha^6 = \alpha^2 + 1\hspace{0.05cm}, | ||

| + | \hspace{0.3cm} \alpha^7 \hspace{-0.15cm} = \hspace{-0.15cm} 1\hspace{0.05cm}, | ||

| + | \hspace{0.3cm} \alpha^8 = \alpha^1 \hspace{0.05cm}, | ||

| + | \hspace{0.3cm} \alpha^9 = \alpha^2 \hspace{0.05cm}, | ||

| + | \hspace{0.3cm} \alpha^{10} = \alpha^3 = \alpha + 1\hspace{0.05cm},\hspace{0.1cm} \text{...}</math> | ||

| + | *Thus, the matrix equation for determining the vector $\underline {z}_{\rm E}$ we are looking for: | ||

| + | ::<math>\begin{pmatrix} | ||

| + | \alpha^2 & \alpha^3 & \alpha^5 \\ | ||

| + | \alpha^4 & \alpha^6 & \alpha^{3} \\ | ||

| + | \alpha^6 & \alpha^2 & \alpha^{1} \\ | ||

| + | \alpha^1 & \alpha^{5} & \alpha^{6} | ||

| + | \end{pmatrix} \cdot | ||

| + | \begin{pmatrix} | ||

| + | z_2\\ | ||

| + | z_3\\ | ||

| + | z_5 | ||

| + | \end{pmatrix} \stackrel{!}{=} | ||

| + | \begin{pmatrix} | ||

| + | \alpha^3\\ | ||

| + | \alpha^{4}\\ | ||

| + | \alpha^{2}\\ | ||

| + | 0 | ||

| + | \end{pmatrix} | ||

| + | \hspace{0.05cm}. </math> | ||

| + | *Solving this matrix equation $($most easily by program$)$, we get | ||

| + | ::<math>z_2 = \alpha^2\hspace{0.05cm},\hspace{0.25cm}z_3 = \alpha^1\hspace{0.05cm},\hspace{0.25cm}z_5 = \alpha^5 | ||

| + | \hspace{0.5cm} \Rightarrow \hspace{0.5cm}\underline {z} = \left ( \hspace{0.05cm} \alpha^1, \hspace{0.05cm}1, \hspace{0.05cm}\alpha^2, \hspace{0.05cm}\alpha^1, \hspace{0.05cm}\alpha^2, \hspace{0.05cm}\alpha^5, \hspace{0.05cm}\alpha^5 \hspace{0.05cm}\right ) | ||

| + | \hspace{0.05cm}.</math> | ||

| + | *The result is correct, as the following control calculations show: | ||

| + | ::<math>\alpha^2 \cdot \alpha^2 + \alpha^3 \cdot \alpha^1 + \alpha^5 \cdot \alpha^5 = | ||

| + | \alpha^4 + \alpha^4 + \alpha^{10} = \alpha^{10} = \alpha^3\hspace{0.05cm},</math> | ||

| + | ::<math>\alpha^4 \cdot \alpha^2 + \alpha^6 \cdot \alpha^1 + \alpha^3 \cdot \alpha^5 = | ||

| + | (\alpha^2 + 1) + (1) + (\alpha) = \alpha^{2} + \alpha = \alpha^4\hspace{0.05cm},</math> | ||

| + | ::<math>\alpha^6 \cdot \alpha^2 + \alpha^2 \cdot \alpha^1 + \alpha^1 \cdot \alpha^5 = | ||

| + | (\alpha) + (\alpha + 1) + (\alpha^2 + 1) = \alpha^{2} \hspace{0.05cm},</math> | ||

| + | ::<math>\alpha^1 \cdot \alpha^2 + \alpha^5 \cdot \alpha^1 + \alpha^6 \cdot \alpha^5 = | ||

| + | (\alpha + 1) + (\alpha^2 + 1) + (\alpha^2 + \alpha) = 0\hspace{0.05cm}.</math> | ||

| + | *The corresponding information word is obtained with the [[Channel_Coding/General_Description_of_Linear_Block_Codes#Code_definition_by_the_generator_matrix| $\text{generator matrix}$]] $\boldsymbol{\rm G}$: | ||

| + | :$$\underline {v} = \underline {z} \cdot \boldsymbol{\rm G}^{\rm T} = (\alpha^1,\hspace{0.05cm}1,\hspace{0.05cm}\alpha^3).$$ | ||

| + | == Exercises for the chapter == | ||

| + | <br> | ||

| + | [[Aufgaben:Exercise_2.11:_Reed-Solomon_Decoding_according_to_"Erasures"|Exercise 2.11: Reed-Solomon Decoding according to "Erasures"]] | ||

| + | [[Aufgaben:Exercise_2.11Z:_Erasure_Channel_for_Symbols|Exercise 2.11Z: Erasure Channel for Symbols]] | ||

{{Display}} | {{Display}} | ||

Latest revision as of 12:36, 24 November 2022

Contents

Block diagram and requirements for Reed-Solomon error detection

In the "Decoding at the Binary Erasure Channel" chapter we showed for the binary block codes, which calculations the decoder has to perform to decode from an incomplete received word $\underline{y}$ the transmitted code word $\underline{x}$ in the best possible way. In the Reed–Solomon chapter we renamed $\underline{x}$ to $\underline{c}$.

- This chapter is based on the $\text{BEC model}$ ("Binary Erasure Channel"), which marks an uncertain bit as "erasure" $\rm E$.

- In contrast to the $\text{BSC model}$ ("Binary Symmetric Channel") and $\text{AWGN model}$ ("Additive White Gaussian Noise"), bit errors $(y_i ≠ c_i)$ are excluded here.

Each bit of a received word

- thus matches the corresponding bit of the code word $(y_i = c_i)$, or

- is already marked as a cancellation $(y_i = \rm E)$.

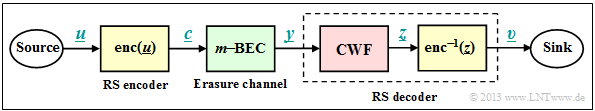

The graphic shows the block diagram, which is slightly different from the model in chapter $\text{Decoding oflinear block codes}$:

- Since Reed–Solomon codes are linear block codes, the information word $\underline{u}$ and the code word $\underline{c}$ are also related via the generator matrix $\boldsymbol{\rm G}$ and following equation:

- \[\underline {c} = {\rm enc}(\underline {u}) = \underline {u} \cdot { \boldsymbol{\rm G}} \hspace{0.3cm} {\rm with} \hspace{0.3cm}\underline {u} = (u_0, u_1,\hspace{0.05cm}\text{ ... }\hspace{0.1cm}, u_i, \hspace{0.05cm}\text{ ... }\hspace{0.1cm}, u_{k-1})\hspace{0.05cm}, \hspace{0.2cm} \underline {c} = (c_0, c_1, \hspace{0.05cm}\text{ ... }\hspace{0.1cm}, c_i, \hspace{0.05cm}\text{ ... }\hspace{0.1cm}, c_{n-1}) \hspace{0.05cm}.\]

- For the individual symbols of information block and code word, Reed–Solomon coding applies:

- \[u_i \in {\rm GF}(q)\hspace{0.05cm},\hspace{0.2cm}c_i \in {\rm GF}(q)\hspace{0.3cm}{\rm with}\hspace{0.3cm} q = n+1 = 2^m \hspace{0.3cm} \Rightarrow \hspace{0.3cm} n = 2^m - 1\hspace{0.05cm}. \]

- Each code symbol $c_i$ is thus represented by $m ≥ 2$ binary symbols. For comparison: For the binary block codes hold $q=2$, $m=1$ and the code word length $n$ is freely selectable.

- When encoding at symbol level, the BEC model must be extended to the "m-BEC model":

- With probability $\lambda_m ≈ m \cdot\lambda$ a code symbol $c_i$ is erased $(y_i = \rm E)$ and it holds ${\rm Pr}(y_i = c_i) = 1 - \lambda_m$.

- For more details on the conversion of the two models, see "Exercise 2.11Z".

- In the following, we deal only with the block "code word finder" $\rm (CWF)$, which extracts from the received vector $\underline{y}$ the vector $\underline{z} ∈ \mathcal{C}_{\rm RS}$:

- If the number $e$ of erasures in the vector $\underline{y}$ is sufficiently small, the entire code word can be found with certainty: $(\underline{z}=\underline{c})$.

- If too many symbols of the received word $\underline{y}$ are erased, the decoder reports that this word cannot be decoded. It may then be necessary to send this sequence again.

- In the case of the "m-BEC" model, an error decision $(\underline{z} \ne\underline{c})$ is excluded ⇒ »block error probability« ${\rm Pr}(\underline{z}\ne\underline{c}) = 0$ ⇒ ${\rm Pr}(\underline{v}\ne\underline{u}) = 0$.

- The reconstructed information word results according to the block diagram $($yellow background$)$ to $\underline{v} = {\rm enc}^{-1}(\underline{z})$.

- With the generator matrix $\boldsymbol{\rm G}$ can also be written for this:

- \[\underline {c} = \underline {u} \cdot { \boldsymbol{\rm G}} \hspace{0.3cm} \Rightarrow \hspace{0.3cm}\underline {z} = \underline {\upsilon} \cdot { \boldsymbol{\rm G}} \hspace{0.3cm} \Rightarrow \hspace{0.3cm}\underline {\upsilon} = \underline {z} \cdot { \boldsymbol{\rm G}}^{\rm T} \hspace{0.05cm}. \]

Decoding procedure using the RSC (7, 3, 5)8 as an example

In order to be able to represent the Reed–Solomon decoding at the extinction channel as simply as possible, we start from a concrete task:

- A Reed–Solomon code with parameters $n= 7$, $k= 3$ and $q= 2^3 = 8$ is used.

- Thus, for the information word $\underline{u}$ and the code word $\underline{c}$:

- \[\underline {u} = (u_0, u_1, u_2) \hspace{0.05cm},\hspace{0.15cm} \underline {c} = (c_0, c_1, c_2,c_3,c_4,c_5,c_6)\hspace{0.05cm},\hspace{0.15cm} u_i, c_i \in {\rm GF}(2^3) = \{0, 1, \alpha, \alpha^2, \text{...}\hspace{0.05cm} , \alpha^6\} \hspace{0.05cm}.\]

- The parity-check matrix $\boldsymbol{\rm H}$ is:

- \[{ \boldsymbol{\rm H}} = \begin{pmatrix} 1 & \alpha^1 & \alpha^2 & \alpha^3 & \alpha^4 & \alpha^5 & \alpha^6\\ 1 & \alpha^2 & \alpha^4 & \alpha^6 & \alpha^1 & \alpha^{3} & \alpha^{5}\\ 1 & \alpha^3 & \alpha^6 & \alpha^2 & \alpha^{5} & \alpha^{1} & \alpha^{4}\\ 1 & \alpha^4 & \alpha^1 & \alpha^{5} & \alpha^{2} & \alpha^{6} & \alpha^{3} \end{pmatrix}\hspace{0.05cm}. \]

⇒ For example, the received vector

- $$\underline {y} = (\alpha, \hspace{0.03cm} 1, \hspace{0.03cm}{\rm E}, \hspace{0.03cm}{\rm E}, \hspace{0.03cm}\alpha^2,{\rm E}, \hspace{0.03cm}\alpha^5)$$

is assumed here. Then holds:

- Since the erasure channel produces no errors, four of the code symbols are known to the decoder:

- \[c_0 = \alpha^1 \hspace{0.05cm},\hspace{0.2cm} c_1 = 1 \hspace{0.05cm},\hspace{0.2cm} c_4 = \alpha^2 \hspace{0.05cm},\hspace{0.2cm} c_6 = \alpha^5 \hspace{0.05cm}.\]

- It is obvious that the block "code word finder" $\rm (CWF)$ is to provide a vector of the form $\underline {z} = (c_0, \hspace{0.03cm}c_1, \hspace{0.03cm}z_2, \hspace{0.03cm}z_3,\hspace{0.03cm}c_4,\hspace{0.03cm}z_5,\hspace{0.03cm}c_6)$ with $z_2,\hspace{0.03cm}z_3,\hspace{0.03cm}z_5 \in \rm GF(2^3)$.

- But since the code word $\underline {z}$ found by the decoder is also supposed to be a valid Reed–Solomon code word ⇒ $\underline {z} ∈ \mathcal{C}_{\rm RS}$, it must hold as well:

- \[{ \boldsymbol{\rm H}} \cdot \underline {z}^{\rm T} = \underline {0}^{\rm T} \hspace{0.3cm} \Rightarrow \hspace{0.3cm} \begin{pmatrix} 1 & \alpha^1 & \alpha^2 & \alpha^3 & \alpha^4 & \alpha^5 & \alpha^6\\ 1 & \alpha^2 & \alpha^4 & \alpha^6 & \alpha^1 & \alpha^{3} & \alpha^{5}\\ 1 & \alpha^3 & \alpha^6 & \alpha^2 & \alpha^{5} & \alpha^{1} & \alpha^{4}\\ 1 & \alpha^4 & \alpha^1 & \alpha^{5} & \alpha^{2} & \alpha^{6} & \alpha^{3} \end{pmatrix} \cdot \begin{pmatrix} c_0\\ c_1\\ z_2\\ z_3\\ c_4\\ z_5\\ c_6 \end{pmatrix} = \begin{pmatrix} 0\\ 0\\ 0\\ 0 \end{pmatrix} \hspace{0.05cm}. \]

- This gives four equations for the unknowns $z_2$, $z_3$ and $z_5$. With unique solution – and only with such – the decoding is successful and one can then say with certainty that indeed $\underline {c} = \underline {z} $ was sent.

Solution of the matrix equations using the example of the RSC (7, 3, 5)8

Thus, it is necessary to find the admissible code word $\underline {z}$ that satisfies the determination equation

- $$\boldsymbol{\rm H} \cdot \underline {z}^{\rm T}. $$

- For convenience, we split the vector $\underline {z}$ into two partial vectors, viz.

- the vector $\underline {z}_{\rm E} = (z_2, z_3, z_5)$ of the erased symbols $($subscript "$\rm E$" for "erasures"$)$,

- the vector $\underline {z}_{\rm K} = (c_0, c_1,c_4, c_6)$ of known symbols $($subscript "$\rm K$" for "korrect" ⇒ "correct" $)$.

- With the associated partial matrices $($each with $n-k = 4$ rows$)$

- \[{ \boldsymbol{\rm H}}_{\rm E} = \begin{pmatrix} \alpha^2 & \alpha^3 & \alpha^5 \\ \alpha^4 & \alpha^6 & \alpha^{3} \\ \alpha^6 & \alpha^2 & \alpha^{1} \\ \alpha^1 & \alpha^{5} & \alpha^{6} \end{pmatrix} \hspace{0.05cm},\hspace{0.4cm} { \boldsymbol{\rm H}}_{\rm K} \begin{pmatrix} 1 & \alpha^1 & \alpha^4 & \alpha^6\\ 1 & \alpha^2 & \alpha^1 & \alpha^{5}\\ 1 & \alpha^3 & \alpha^{5} & \alpha^{4}\\ 1 & \alpha^4 & \alpha^{2} & \alpha^{3} \end{pmatrix}\]

- the equation of determination is thus:

- \[{ \boldsymbol{\rm H}}_{\rm E} \cdot \underline {z}_{\rm E}^{\rm T} + { \boldsymbol{\rm H}}_{\rm K} \cdot \underline {z}_{\rm K}^{\rm T} = \underline {0}^{\rm T} \hspace{0.5cm} \Rightarrow \hspace{0.5cm} { \boldsymbol{\rm H}}_{\rm E} \cdot \underline {z}_{\rm E}^{\rm T} = - { \boldsymbol{\rm H}}_{\rm K} \cdot \underline {z}_{\rm K}^{\rm T}\hspace{0.05cm}. \]

- Since for all elements $z_i ∈ {\rm GF}(2^m)$ ⇒ the $\text{additive inverse}$ ${\rm Inv_A}(z_i)= (- z_i) = z_i$ holds in the same way

- \[{ \boldsymbol{\rm H}}_{\rm E} \cdot \underline {z}_{\rm E}^{\rm T} = { \boldsymbol{\rm H}}_{\rm K} \cdot \underline {z}_{\rm K}^{\rm T} = \begin{pmatrix} 1 & \alpha^1 & \alpha^4 & \alpha^6\\ 1 & \alpha^2 & \alpha^1 & \alpha^{5}\\ 1 & \alpha^3 & \alpha^{5} & \alpha^{4}\\ 1 & \alpha^4 & \alpha^{2} & \alpha^{3} \end{pmatrix} \cdot \begin{pmatrix} \alpha^1\\ 1\\ \alpha^{2}\\ \alpha^{6} \end{pmatrix} = \hspace{0.45cm}... \hspace{0.45cm}= \begin{pmatrix} \alpha^3\\ \alpha^{4}\\ \alpha^{2}\\ 0 \end{pmatrix} \hspace{0.05cm}.\]

- The right–hand side of the equation results for the considered example ⇒ $\underline {z}_{\rm K} = (c_0, c_1,c_4, c_6)$ and is based on the polynomial $p(x) = x^3 + x +1$, which leads to the following powers $($in $\alpha)$ :

- \[\alpha^3 =\alpha + 1\hspace{0.05cm}, \hspace{0.3cm} \alpha^4 = \alpha^2 + \alpha\hspace{0.05cm}, \hspace{0.3cm} \alpha^5 = \alpha^2 + \alpha + 1\hspace{0.05cm}, \hspace{0.3cm} \alpha^6 = \alpha^2 + 1\hspace{0.05cm}, \hspace{0.3cm} \alpha^7 \hspace{-0.15cm} = \hspace{-0.15cm} 1\hspace{0.05cm}, \hspace{0.3cm} \alpha^8 = \alpha^1 \hspace{0.05cm}, \hspace{0.3cm} \alpha^9 = \alpha^2 \hspace{0.05cm}, \hspace{0.3cm} \alpha^{10} = \alpha^3 = \alpha + 1\hspace{0.05cm},\hspace{0.1cm} \text{...}\]

- Thus, the matrix equation for determining the vector $\underline {z}_{\rm E}$ we are looking for:

- \[\begin{pmatrix} \alpha^2 & \alpha^3 & \alpha^5 \\ \alpha^4 & \alpha^6 & \alpha^{3} \\ \alpha^6 & \alpha^2 & \alpha^{1} \\ \alpha^1 & \alpha^{5} & \alpha^{6} \end{pmatrix} \cdot \begin{pmatrix} z_2\\ z_3\\ z_5 \end{pmatrix} \stackrel{!}{=} \begin{pmatrix} \alpha^3\\ \alpha^{4}\\ \alpha^{2}\\ 0 \end{pmatrix} \hspace{0.05cm}. \]

- Solving this matrix equation $($most easily by program$)$, we get

- \[z_2 = \alpha^2\hspace{0.05cm},\hspace{0.25cm}z_3 = \alpha^1\hspace{0.05cm},\hspace{0.25cm}z_5 = \alpha^5 \hspace{0.5cm} \Rightarrow \hspace{0.5cm}\underline {z} = \left ( \hspace{0.05cm} \alpha^1, \hspace{0.05cm}1, \hspace{0.05cm}\alpha^2, \hspace{0.05cm}\alpha^1, \hspace{0.05cm}\alpha^2, \hspace{0.05cm}\alpha^5, \hspace{0.05cm}\alpha^5 \hspace{0.05cm}\right ) \hspace{0.05cm}.\]

- The result is correct, as the following control calculations show:

- \[\alpha^2 \cdot \alpha^2 + \alpha^3 \cdot \alpha^1 + \alpha^5 \cdot \alpha^5 = \alpha^4 + \alpha^4 + \alpha^{10} = \alpha^{10} = \alpha^3\hspace{0.05cm},\]

- \[\alpha^4 \cdot \alpha^2 + \alpha^6 \cdot \alpha^1 + \alpha^3 \cdot \alpha^5 = (\alpha^2 + 1) + (1) + (\alpha) = \alpha^{2} + \alpha = \alpha^4\hspace{0.05cm},\]

- \[\alpha^6 \cdot \alpha^2 + \alpha^2 \cdot \alpha^1 + \alpha^1 \cdot \alpha^5 = (\alpha) + (\alpha + 1) + (\alpha^2 + 1) = \alpha^{2} \hspace{0.05cm},\]

- \[\alpha^1 \cdot \alpha^2 + \alpha^5 \cdot \alpha^1 + \alpha^6 \cdot \alpha^5 = (\alpha + 1) + (\alpha^2 + 1) + (\alpha^2 + \alpha) = 0\hspace{0.05cm}.\]

- The corresponding information word is obtained with the $\text{generator matrix}$ $\boldsymbol{\rm G}$:

- $$\underline {v} = \underline {z} \cdot \boldsymbol{\rm G}^{\rm T} = (\alpha^1,\hspace{0.05cm}1,\hspace{0.05cm}\alpha^3).$$

Exercises for the chapter

Exercise 2.11: Reed-Solomon Decoding according to "Erasures"

Exercise 2.11Z: Erasure Channel for Symbols