Difference between revisions of "Aufgaben:Exercise 1.8: Synthetically Generated Texts"

| (20 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{quiz-Header|Buchseite= | + | {{quiz-Header|Buchseite=Information_Theory/Natural_Discrete_Sources |

}} | }} | ||

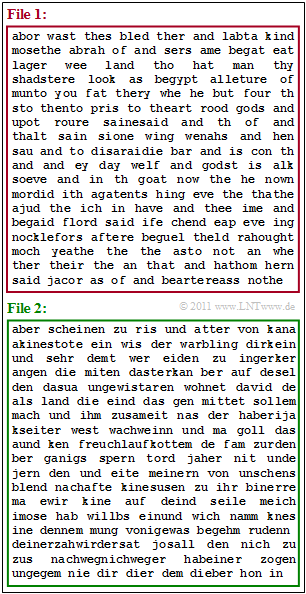

| − | [[File: | + | [[File:EN_Inf_A_1_8.png|right|frame|Two synthetically generated text files]] |

| − | + | With the Windows programme "Discrete-Value Information Theory" from the Chair of Communications Engineering at the TU Munich | |

| − | * | + | *one can determine the frequencies of character triplets such as "aaa", "aab", ... , "xyz", ... from a given text file "TEMPLATE" and save them in an auxiliary file, |

| − | * | + | * then create a file "SYNTHESIS" whereby the new character is generated from the last two generated characters and the stored triple frequencies. |

| − | + | Starting with the German and English Bible translations, we have thus synthesised two files, which are indicated in the graphic: | |

| − | * | + | * $\text{File 1}$ (red border), |

| − | * | + | * $\text{File 2}$ (green border). |

| − | + | It is not indicated which file comes from which template. Determining this is your first task. | |

| − | + | The two templates are based on the natural alphabet $(26$ letters$)$ and the "Blank Space" ("BS") ⇒ $M = 27$. In the German Bible, the umlauts have been replaced, for example "ä" ⇒ "ae". | |

| − | + | $\text{File 1}$ has the following characteristics: | |

| − | * | + | * The most frequent characters are "BS" with $19.8\%$, followed by "e" with $10.2\%$ and "a" with $8.5\%$. |

| − | * | + | * After "BS", "t" occurs most frequently with $17.8\%$. |

| − | * | + | * Before "BS", "d" is most likely. |

| − | * | + | * The entropy approximations in each case with the unit "bit/character" were determined as follows: |

:$$H_0 = 4.76\hspace{0.05cm},\hspace{0.2cm} | :$$H_0 = 4.76\hspace{0.05cm},\hspace{0.2cm} | ||

H_1 = 4.00\hspace{0.05cm},\hspace{0.2cm} | H_1 = 4.00\hspace{0.05cm},\hspace{0.2cm} | ||

| Line 32: | Line 32: | ||

H_4 = 2.81\hspace{0.05cm}. $$ | H_4 = 2.81\hspace{0.05cm}. $$ | ||

| − | + | In contrast, the analysis of $\text{File 2}$: | |

| − | * | + | * The most frequent characters are "BS" with $17.6\%$ followed by "e" with $14.4\%$ and "n" with $8.9\%$. |

| − | * | + | * After "BS", "d" is most likely $(15.1\%)$ followed by "s" with $10.8\%$. |

| − | * | + | * After "BS" and "d", the vowels "e" $(48.3\%)$, "i" $(23\%)$ and "a" $(20.2\%)$ are dominant. |

| − | * | + | * The entropy approximations differ only slightly from those of $\text{File 1}$. |

| − | * | + | * For larger $k$–values, these are slightly larger, for example $H_3 = 3.17$ instead of $H_3 = 3.11$. |

| − | |||

| − | |||

| − | |||

| + | ''Hints:'' | ||

| + | *The exercise belongs to the chapter [[Information_Theory/Natural_Discrete_Sources|Natural Discrete Sources]]. | ||

| + | *Reference is also made to the page [[Information_Theory/Natural_Discrete_Sources#Synthetically_generated_texts|Synthetically Generated Texts]]. | ||

| − | === | + | |

| + | ===Questions=== | ||

<quiz display=simple> | <quiz display=simple> | ||

| − | { | + | {Which templates were used for the text synthesis shown here?? |

| − | |type=" | + | |type="()"} |

| − | + | + | + $\text{File 1}$ (red) is based on an English template. |

| − | - | + | - $\text{File 1}$ (red) is based on a German template. |

| − | |||

| − | { | + | {Compare the mean word lengths of $\text{File 1}$ and $\text{File 2}$. |

|type="[]"} | |type="[]"} | ||

| − | - | + | - The words of the "English" file are longer on average. |

| − | + | + | + The words of the "German" file are longer on average. |

| − | { | + | {Which statements apply to the entropy approximations? |

|type="[]"} | |type="[]"} | ||

| − | + | + | + "TEMPLATE" and "SYNTHESIS" provide a nearly equal $H_1$. |

| − | + | + | + "TEMPLATE" and "SYNTHESIS" provide a nearly equal $H_2$. |

| − | + | + | + "TEMPLATE" and "SYNTHESIS" provide a nearly equal $H_3$. |

| − | - | + | - "TEMPLATE" and "SYNTHESIS" provide a nearly equal $H_4$. |

| − | { | + | {Which statements are true for the "English" text? |

|type="[]"} | |type="[]"} | ||

| − | + | + | + Most words begin with "t". |

| − | - | + | - Most words end with "t". |

| − | { | + | {Which statements could be true forthe "German" texts? |

|type="[]"} | |type="[]"} | ||

| − | + | + | + After "de", "r" is most likely. |

| − | + | + | + After "da", "s" is most likely. |

| − | + | + | + After "di", "e" is most likely. |

| Line 88: | Line 88: | ||

</quiz> | </quiz> | ||

| − | === | + | ===Solution=== |

{{ML-Kopf}} | {{ML-Kopf}} | ||

| − | '''(1)''' | + | '''(1)''' The correct solution is <u>suggestion 1</u>. |

| + | *In $\text{File 1}$ you can recognise many English words, in $\text{File 2}$ many German words. | ||

| + | *Neither text makes sense. | ||

| + | |||

| + | |||

| + | '''(2)''' Correct is <u>suggestions 2</u>. The estimations of Shannon and Küpfmüller confirm our result: | ||

| + | *The probability of a blank space "BS" in $\text{File 1}$ (English) $19.8\%$. | ||

| + | *So on average every $1/0.198 = 5.05$–th character is "BS". | ||

| + | *The average word length is therefore | ||

| + | :$$L_{\rm M} = \frac{1}{0.198}-1 \approx 4.05\,{\rm characters}\hspace{0.05cm}.$$ | ||

| + | *Correspondingly, for $\text{File 2}$ (German): | ||

| + | :$$L_{\rm M} = \frac{1}{0.176}-1 \approx 4.68\,{\rm characters}\hspace{0.05cm}.$$ | ||

| + | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | '''(3)''' The <u>first three statements</u> are correct, but not statement '''(4)''': | ||

| + | *To determine the entropy approximation $H_k$ , $k$–tuples must be evaluated, for example, for $k = 3$ the triples "aaa", "aab", .... | ||

| + | *According to the generation rule "New character depends on the two predecessors", $H_1$, $H_2$ and $H_3$ of "TEMPLATE" and "SYNTHESIS" will match, <br>but only approximately due to the finite file length. | ||

| + | *In contrast, the $H_4$ approximations differ more strongly because the third predecessor is not taken into account during generation. | ||

| + | *It is only known that $H_4 < H_3$ must also apply with regard to "SYNTHESIS". | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | '''(4)''' | + | '''(4)''' Only <u>statement 1</u> is correct here: |

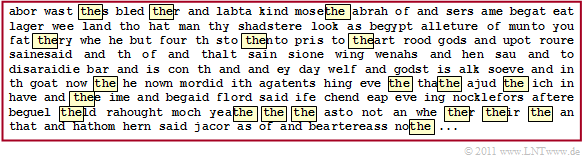

| − | * | + | [[File:Inf_A_1_8d_vers2.png|right|frame|Occurrence of "...the..." in the English text]] |

| − | * | + | *After a "BS" (beginning of a word), "t" follows with $17.8\%$, while at the end of a word (before a space), "t" occurs only with the frequency $8.3\%$. |

| − | * | + | *Overall, the probability of "t" averaged over all positions in the word is $7.4\%$. |

| + | *The third letter after "BS" and "t" is "h" with almost $82\%$ and after "th", "e" is most likeky with $62\%$. | ||

| + | *This suggests that "the" occurs more often than average in an English text and thus also in the synthetic $\text{File 1}$, as the following graph shows. | ||

| + | *But "the" does not occur in isolation in all marks ⇒ immediately preceded and followed by a space. | ||

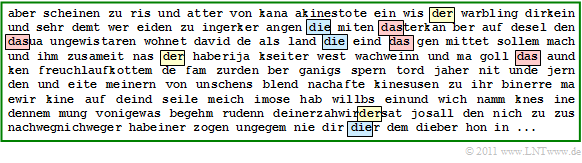

| + | [[File:Inf_A_1_8e_vers2.png|right|frame|Occurrence of "der", "die", "das" in the German text]] | ||

| − | |||

| − | '''(5)''' <u> | + | '''(5)''' <u>All statements</u> are true: |

| − | * | + | *After "de", "r" is indeed most likely $(32.8\%)$, followed by "n" $(28.5\%)$, "s" $(9.3\%)$ and "m" $(9.7\%)$. |

| − | * | + | *This could be responsible for "der", "den", "des" und "dem". |

| − | * | + | * "da" is most likely followed by "s" $(48.2\%)$. |

| − | * | + | * After "di" follows "e" with the highest probability $(78.7\%)$. |

| − | |||

| − | |||

| + | The graph shows $\text{File 2}$ with all occurrences of "der", "die", "das". | ||

| + | |||

{{ML-Fuß}} | {{ML-Fuß}} | ||

| − | [[Category: | + | [[Category:Information Theory: Exercises|^1.3 Natural Discrete Sources^]] |

Latest revision as of 13:07, 10 August 2021

With the Windows programme "Discrete-Value Information Theory" from the Chair of Communications Engineering at the TU Munich

- one can determine the frequencies of character triplets such as "aaa", "aab", ... , "xyz", ... from a given text file "TEMPLATE" and save them in an auxiliary file,

- then create a file "SYNTHESIS" whereby the new character is generated from the last two generated characters and the stored triple frequencies.

Starting with the German and English Bible translations, we have thus synthesised two files, which are indicated in the graphic:

- $\text{File 1}$ (red border),

- $\text{File 2}$ (green border).

It is not indicated which file comes from which template. Determining this is your first task.

The two templates are based on the natural alphabet $(26$ letters$)$ and the "Blank Space" ("BS") ⇒ $M = 27$. In the German Bible, the umlauts have been replaced, for example "ä" ⇒ "ae".

$\text{File 1}$ has the following characteristics:

- The most frequent characters are "BS" with $19.8\%$, followed by "e" with $10.2\%$ and "a" with $8.5\%$.

- After "BS", "t" occurs most frequently with $17.8\%$.

- Before "BS", "d" is most likely.

- The entropy approximations in each case with the unit "bit/character" were determined as follows:

- $$H_0 = 4.76\hspace{0.05cm},\hspace{0.2cm} H_1 = 4.00\hspace{0.05cm},\hspace{0.2cm} H_2 = 3.54\hspace{0.05cm},\hspace{0.2cm} H_3 = 3.11\hspace{0.05cm},\hspace{0.2cm} H_4 = 2.81\hspace{0.05cm}. $$

In contrast, the analysis of $\text{File 2}$:

- The most frequent characters are "BS" with $17.6\%$ followed by "e" with $14.4\%$ and "n" with $8.9\%$.

- After "BS", "d" is most likely $(15.1\%)$ followed by "s" with $10.8\%$.

- After "BS" and "d", the vowels "e" $(48.3\%)$, "i" $(23\%)$ and "a" $(20.2\%)$ are dominant.

- The entropy approximations differ only slightly from those of $\text{File 1}$.

- For larger $k$–values, these are slightly larger, for example $H_3 = 3.17$ instead of $H_3 = 3.11$.

Hints:

- The exercise belongs to the chapter Natural Discrete Sources.

- Reference is also made to the page Synthetically Generated Texts.

Questions

Solution

- In $\text{File 1}$ you can recognise many English words, in $\text{File 2}$ many German words.

- Neither text makes sense.

(2) Correct is suggestions 2. The estimations of Shannon and Küpfmüller confirm our result:

- The probability of a blank space "BS" in $\text{File 1}$ (English) $19.8\%$.

- So on average every $1/0.198 = 5.05$–th character is "BS".

- The average word length is therefore

- $$L_{\rm M} = \frac{1}{0.198}-1 \approx 4.05\,{\rm characters}\hspace{0.05cm}.$$

- Correspondingly, for $\text{File 2}$ (German):

- $$L_{\rm M} = \frac{1}{0.176}-1 \approx 4.68\,{\rm characters}\hspace{0.05cm}.$$

(3) The first three statements are correct, but not statement (4):

- To determine the entropy approximation $H_k$ , $k$–tuples must be evaluated, for example, for $k = 3$ the triples "aaa", "aab", ....

- According to the generation rule "New character depends on the two predecessors", $H_1$, $H_2$ and $H_3$ of "TEMPLATE" and "SYNTHESIS" will match,

but only approximately due to the finite file length. - In contrast, the $H_4$ approximations differ more strongly because the third predecessor is not taken into account during generation.

- It is only known that $H_4 < H_3$ must also apply with regard to "SYNTHESIS".

(4) Only statement 1 is correct here:

- After a "BS" (beginning of a word), "t" follows with $17.8\%$, while at the end of a word (before a space), "t" occurs only with the frequency $8.3\%$.

- Overall, the probability of "t" averaged over all positions in the word is $7.4\%$.

- The third letter after "BS" and "t" is "h" with almost $82\%$ and after "th", "e" is most likeky with $62\%$.

- This suggests that "the" occurs more often than average in an English text and thus also in the synthetic $\text{File 1}$, as the following graph shows.

- But "the" does not occur in isolation in all marks ⇒ immediately preceded and followed by a space.

(5) All statements are true:

- After "de", "r" is indeed most likely $(32.8\%)$, followed by "n" $(28.5\%)$, "s" $(9.3\%)$ and "m" $(9.7\%)$.

- This could be responsible for "der", "den", "des" und "dem".

- "da" is most likely followed by "s" $(48.2\%)$.

- After "di" follows "e" with the highest probability $(78.7\%)$.

The graph shows $\text{File 2}$ with all occurrences of "der", "die", "das".