Difference between revisions of "Aufgaben:Exercise 1.1: Entropy of the Weather"

| (24 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{quiz-Header|Buchseite= | + | {{quiz-Header|Buchseite=Information_Theory/Discrete_Memoryless_Sources |

}} | }} | ||

| − | [[File: | + | [[File:EN_Inf_A_1_1_v2.png|right|frame|Five different binary sources]] |

| − | + | A weather station queries different regions every day and receives a message $x$ back as a response in each case, namely | |

| − | * $x = \rm B$: | + | * $x = \rm B$: The weather is rather bad. |

| − | * $x = \rm G$: | + | * $x = \rm G$: The weather is rather good. |

| − | + | The data were stored in files over many years for different regions, so that the entropies of the $\rm B/G$–sequences can be determined: | |

:$$H = p_{\rm B} \cdot {\rm log}_2\hspace{0.1cm}\frac{1}{p_{\rm B}} + p_{\rm G} \cdot {\rm log}_2\hspace{0.1cm}\frac{1}{p_{\rm G}}$$ | :$$H = p_{\rm B} \cdot {\rm log}_2\hspace{0.1cm}\frac{1}{p_{\rm B}} + p_{\rm G} \cdot {\rm log}_2\hspace{0.1cm}\frac{1}{p_{\rm G}}$$ | ||

| − | + | with the base-2 logarithm | |

| − | :$${\rm log}_2\hspace{0.1cm}p=\frac{{\rm lg}\hspace{0.1cm}p}{{\rm lg}\hspace{0.1cm}2 | + | :$${\rm log}_2\hspace{0.1cm}p=\frac{{\rm lg}\hspace{0.1cm}p}{{\rm lg}\hspace{0.1cm}2}.$$ |

| − | & | + | Here, "lg" denotes the logarithm to the base $10$. It should also be mentioned that the pseudo-unit $\text{bit/enquiry}$ must be added in each case. |

| − | + | The graph shows these binary sequences for $60$ days and the following regions: | |

| − | * Region & | + | * Region "Mixed": $p_{\rm B} = p_{\rm G} =0.5$, |

| − | * Region & | + | * Region "Rainy": $p_{\rm B} = 0.8, \; p_{\rm G} =0.2$, |

| − | * Region & | + | * Region "Enjoyable": $p_{\rm B} = 0.2, \; p_{\rm G} =0.8$, |

| − | * Region & | + | * Region "Paradise": $p_{\rm B} = 1/30, \; p_{\rm G} =29/30$. |

| − | + | Finally, the file "Unknown" is also given, whose statistical properties are to be estimated. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | === | + | |

| + | |||

| + | |||

| + | |||

| + | ''Hinss:'' | ||

| + | *This task belongs to the chapter [[Information_Theory/Gedächtnislose_Nachrichtenquellen|Discrete Memoryless Sources]]. | ||

| + | |||

| + | *For the first four files it is assumed that the events $\rm B$ and $\rm G$ are statistically independent, a rather unrealistic assumption for weather practice. | ||

| + | |||

| + | *The task was designed at a time when [https://en.wikipedia.org/wiki/Greta_Thunberg Greta] was just starting school. We leave it to you to rename "Paradise" to "Hell". | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | ===Questions=== | ||

<quiz display=simple> | <quiz display=simple> | ||

| − | { | + | {What is the entropy $H_{\rm M}$ of the file "Mixed"? |

|type="{}"} | |type="{}"} | ||

| − | $H_{\rm | + | $H_{\rm M}\ = \ $ { 1 3% } $\ \rm bit/enquiry$ |

| − | { | + | {What is the entropy $H_{\rm R}$ of the file "Rainy"? |

|type="{}"} | |type="{}"} | ||

| − | $H_{\rm R}\ = $ { 0.722 3% } $\ \rm bit/ | + | $H_{\rm R}\ = \ $ { 0.722 3% } $\ \rm bit/enquiry$ |

| − | { | + | {What is the entropy $H_{\rm E}$ of the file "Enjoyable"? |

|type="{}"} | |type="{}"} | ||

| − | $H_{\rm | + | $H_{\rm E}\ = \ $ { 0.722 3% } $\ \rm bit/enquiry$ |

| − | { | + | {How large are the information contents of events $\rm B$ and $\rm G$ in relation to the file "Paradise"? |

|type="{}"} | |type="{}"} | ||

| − | $I_{\rm B}\ = $ { 4.907 3% } $\ \rm bit/ | + | $I_{\rm B}\ = \ $ { 4.907 3% } $\ \rm bit/enquiry$ |

| − | $I_{\rm G}\ = $ { 0.049 3% } $\ \rm bit/ | + | $I_{\rm G}\ = \ $ { 0.049 3% } $\ \rm bit/enquiry$ |

| − | { | + | {What is the entropy (that is: the average information content) $H_{\rm P}$ of the file "paradise"? Interpret the result. |

|type="{}"} | |type="{}"} | ||

| − | $H_{\rm P}\ = $ { 0.211 3% } $\ \rm bit/ | + | $H_{\rm P}\ = \ $ { 0.211 3% } $\ \rm bit/enquiry$ |

| − | { | + | {Which statements could be true for the file "Unknown"? |

|type="[]"} | |type="[]"} | ||

| − | + | + | + Events $\rm B$ and $\rm G$ are approximately equally probable. |

| − | - | + | - The sequence elements are statistically independent of each other. |

| − | + | + | + The entropy of this file is $H_\text{U} \approx 0.7 \; \rm bit/enquiry$. |

| − | - | + | - The entropy of this file is $H_\text{U} = 1.5 \; \rm bit/enquiry$. |

| Line 74: | Line 84: | ||

</quiz> | </quiz> | ||

| − | === | + | ===Solution=== |

{{ML-Kopf}} | {{ML-Kopf}} | ||

| − | '''(1)''' | + | '''(1)''' For the file "Mixed" the two probabilities are the same: $p_{\rm B} = p_{\rm G} =0.5$. This gives us for the entropy: |

| − | :$$H_{\rm | + | :$$H_{\rm M} = 0.5 \cdot {\rm log}_2\hspace{0.1cm}\frac{1}{0.5} + 0.5 \cdot |

| − | {\rm log}_2\hspace{0.1cm}\frac{1}{0.5} \hspace{0.15cm}\underline {= 1\,{\rm bit/ | + | {\rm log}_2\hspace{0.1cm}\frac{1}{0.5} \hspace{0.15cm}\underline {= 1\,{\rm bit/enquiry}}\hspace{0.05cm}.$$ |

| + | |||

| + | |||

| + | '''(2)''' With $p_{\rm B} = 0.8$ and $p_{\rm G} =0.2$, a smaller entropy value is obtained: | ||

| + | :$$H_{\rm R} \hspace{-0.05cm}= \hspace{-0.05cm}0.8 \cdot {\rm log}_2\hspace{0.05cm}\frac{5}{4} \hspace{-0.05cm}+ \hspace{-0.05cm}0.2 \cdot {\rm log}_2\hspace{0.05cm}\frac{5}{1}\hspace{-0.05cm}=\hspace{-0.05cm} | ||

| + | 0.8 \cdot{\rm log}_2\hspace{0.05cm}5\hspace{-0.05cm} - \hspace{-0.05cm}0.8 \cdot {\rm log}_2\hspace{0.05cm}4 \hspace{-0.05cm}+ \hspace{-0.05cm}0.2 \cdot {\rm log}_2 \hspace{0.05cm} 5 \hspace{-0.05cm}=\hspace{-0.05cm} | ||

| + | {\rm log}_2\hspace{0.05cm}5\hspace{-0.05cm} -\hspace{-0.05cm} 0.8 \cdot | ||

| + | {\rm log}_2\hspace{0.1cm}4\hspace{-0.05cm} = \hspace{-0.05cm} \frac{{\rm lg} \hspace{0.1cm}5}{{\rm lg}\hspace{0.1cm}2} \hspace{-0.05cm}-\hspace{-0.05cm} 1.6 \hspace{0.15cm} | ||

| + | \underline {= 0.722\,{\rm bit/enquiry}}\hspace{0.05cm}.$$ | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | '''(3)''' In | + | '''(3)''' In the file "Enjoyable" the probabilities are exactly swapped compared to the file "Rainy" . However, this swap does not change the entropy: |

| − | :$$H_{\rm | + | :$$H_{\rm E} = H_{\rm R} \hspace{0.15cm} \underline {= 0.722\,{\rm bit/enquiry}}\hspace{0.05cm}.$$ |

| − | '''(4)''' | + | |

| + | '''(4)''' With $p_{\rm B} = 1/30$ and $p_{\rm G} =29/30$, the information contents are as follows: | ||

:$$I_{\rm B} \hspace{0.1cm} = \hspace{0.1cm} {\rm log}_2\hspace{0.1cm}30 = | :$$I_{\rm B} \hspace{0.1cm} = \hspace{0.1cm} {\rm log}_2\hspace{0.1cm}30 = | ||

\frac{{\rm lg}\hspace{0.1cm}30}{{\rm lg}\hspace{0.1cm}2} = \frac{1.477}{0.301} \hspace{0.15cm} | \frac{{\rm lg}\hspace{0.1cm}30}{{\rm lg}\hspace{0.1cm}2} = \frac{1.477}{0.301} \hspace{0.15cm} | ||

| − | \underline {= 4.907\,{\rm bit/ | + | \underline {= 4.907\,{\rm bit/enquiry}}\hspace{0.05cm},$$ |

:$$I_{\rm G} \hspace{0.1cm} = \hspace{0.1cm} {\rm log}_2\hspace{0.1cm}\frac{30}{29} = | :$$I_{\rm G} \hspace{0.1cm} = \hspace{0.1cm} {\rm log}_2\hspace{0.1cm}\frac{30}{29} = | ||

\frac{{\rm lg}\hspace{0.1cm}1.034}{{\rm lg}\hspace{0.1cm}2} = \frac{1.477}{0.301} \hspace{0.15cm} | \frac{{\rm lg}\hspace{0.1cm}1.034}{{\rm lg}\hspace{0.1cm}2} = \frac{1.477}{0.301} \hspace{0.15cm} | ||

| − | \underline {= 0.049\,{\rm bit/ | + | \underline {= 0.049\,{\rm bit/enquiry}}\hspace{0.05cm}.$$ |

| + | |||

| − | '''(5)''' | + | '''(5)''' The entropy $H_{\rm P}$ is the average information content of the two events $\rm B$ and $\rm G$: |

:$$H_{\rm P} = \frac{1}{30} \cdot 4.907 + \frac{29}{30} \cdot 0.049 = 0.164 + 0.047 | :$$H_{\rm P} = \frac{1}{30} \cdot 4.907 + \frac{29}{30} \cdot 0.049 = 0.164 + 0.047 | ||

\hspace{0.15cm} | \hspace{0.15cm} | ||

| − | \underline {= 0.211\,{\rm bit/ | + | \underline {= 0.211\,{\rm bit/enquiry}}\hspace{0.05cm}.$$ |

| − | + | *Although (more precisely: because) event $\rm B$ occurs less frequently than $\rm G$, its contribution to entropy is much greater. | |

| − | '''(6)''' | + | '''(6)''' Statements <u>1 and 3</u> are correct: |

| − | * | + | *$\rm B$ and $\rm G$ are indeed equally probable in the "unknown" file: The $60$ symbols shown divide into $30$ times $\rm B$ and $30$ times $\rm G$. |

| − | * | + | *However, there are now strong statistical ties within the temporal sequence. Long periods of good weather are usually followed by many bad days in a row. |

| − | * | + | *Because of this statistical dependence within the $\rm B/G$ sequence $H_\text{U} = 0.722 \; \rm bit/enquiry$ is smaller than $H_\text{M} = 1 \; \rm bit/enquiry$. |

| − | *$H_\text{ | + | *$H_\text{M}$ is at the same time the maximum for $M = 2$ ⇒ the last statement is certainly wrong. |

{{ML-Fuß}} | {{ML-Fuß}} | ||

| − | [[Category: | + | [[Category:Information Theory: Exercises|^1.1 Memoryless Sources^]] |

Latest revision as of 12:59, 10 August 2021

A weather station queries different regions every day and receives a message $x$ back as a response in each case, namely

- $x = \rm B$: The weather is rather bad.

- $x = \rm G$: The weather is rather good.

The data were stored in files over many years for different regions, so that the entropies of the $\rm B/G$–sequences can be determined:

- $$H = p_{\rm B} \cdot {\rm log}_2\hspace{0.1cm}\frac{1}{p_{\rm B}} + p_{\rm G} \cdot {\rm log}_2\hspace{0.1cm}\frac{1}{p_{\rm G}}$$

with the base-2 logarithm

- $${\rm log}_2\hspace{0.1cm}p=\frac{{\rm lg}\hspace{0.1cm}p}{{\rm lg}\hspace{0.1cm}2}.$$

Here, "lg" denotes the logarithm to the base $10$. It should also be mentioned that the pseudo-unit $\text{bit/enquiry}$ must be added in each case.

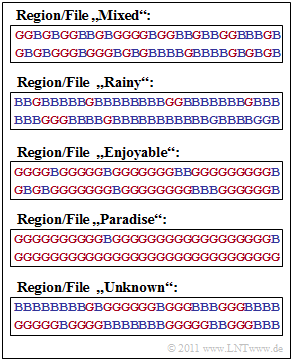

The graph shows these binary sequences for $60$ days and the following regions:

- Region "Mixed": $p_{\rm B} = p_{\rm G} =0.5$,

- Region "Rainy": $p_{\rm B} = 0.8, \; p_{\rm G} =0.2$,

- Region "Enjoyable": $p_{\rm B} = 0.2, \; p_{\rm G} =0.8$,

- Region "Paradise": $p_{\rm B} = 1/30, \; p_{\rm G} =29/30$.

Finally, the file "Unknown" is also given, whose statistical properties are to be estimated.

Hinss:

- This task belongs to the chapter Discrete Memoryless Sources.

- For the first four files it is assumed that the events $\rm B$ and $\rm G$ are statistically independent, a rather unrealistic assumption for weather practice.

- The task was designed at a time when Greta was just starting school. We leave it to you to rename "Paradise" to "Hell".

Questions

Solution

- $$H_{\rm M} = 0.5 \cdot {\rm log}_2\hspace{0.1cm}\frac{1}{0.5} + 0.5 \cdot {\rm log}_2\hspace{0.1cm}\frac{1}{0.5} \hspace{0.15cm}\underline {= 1\,{\rm bit/enquiry}}\hspace{0.05cm}.$$

(2) With $p_{\rm B} = 0.8$ and $p_{\rm G} =0.2$, a smaller entropy value is obtained:

- $$H_{\rm R} \hspace{-0.05cm}= \hspace{-0.05cm}0.8 \cdot {\rm log}_2\hspace{0.05cm}\frac{5}{4} \hspace{-0.05cm}+ \hspace{-0.05cm}0.2 \cdot {\rm log}_2\hspace{0.05cm}\frac{5}{1}\hspace{-0.05cm}=\hspace{-0.05cm} 0.8 \cdot{\rm log}_2\hspace{0.05cm}5\hspace{-0.05cm} - \hspace{-0.05cm}0.8 \cdot {\rm log}_2\hspace{0.05cm}4 \hspace{-0.05cm}+ \hspace{-0.05cm}0.2 \cdot {\rm log}_2 \hspace{0.05cm} 5 \hspace{-0.05cm}=\hspace{-0.05cm} {\rm log}_2\hspace{0.05cm}5\hspace{-0.05cm} -\hspace{-0.05cm} 0.8 \cdot {\rm log}_2\hspace{0.1cm}4\hspace{-0.05cm} = \hspace{-0.05cm} \frac{{\rm lg} \hspace{0.1cm}5}{{\rm lg}\hspace{0.1cm}2} \hspace{-0.05cm}-\hspace{-0.05cm} 1.6 \hspace{0.15cm} \underline {= 0.722\,{\rm bit/enquiry}}\hspace{0.05cm}.$$

(3) In the file "Enjoyable" the probabilities are exactly swapped compared to the file "Rainy" . However, this swap does not change the entropy:

- $$H_{\rm E} = H_{\rm R} \hspace{0.15cm} \underline {= 0.722\,{\rm bit/enquiry}}\hspace{0.05cm}.$$

(4) With $p_{\rm B} = 1/30$ and $p_{\rm G} =29/30$, the information contents are as follows:

- $$I_{\rm B} \hspace{0.1cm} = \hspace{0.1cm} {\rm log}_2\hspace{0.1cm}30 = \frac{{\rm lg}\hspace{0.1cm}30}{{\rm lg}\hspace{0.1cm}2} = \frac{1.477}{0.301} \hspace{0.15cm} \underline {= 4.907\,{\rm bit/enquiry}}\hspace{0.05cm},$$

- $$I_{\rm G} \hspace{0.1cm} = \hspace{0.1cm} {\rm log}_2\hspace{0.1cm}\frac{30}{29} = \frac{{\rm lg}\hspace{0.1cm}1.034}{{\rm lg}\hspace{0.1cm}2} = \frac{1.477}{0.301} \hspace{0.15cm} \underline {= 0.049\,{\rm bit/enquiry}}\hspace{0.05cm}.$$

(5) The entropy $H_{\rm P}$ is the average information content of the two events $\rm B$ and $\rm G$:

- $$H_{\rm P} = \frac{1}{30} \cdot 4.907 + \frac{29}{30} \cdot 0.049 = 0.164 + 0.047 \hspace{0.15cm} \underline {= 0.211\,{\rm bit/enquiry}}\hspace{0.05cm}.$$

- Although (more precisely: because) event $\rm B$ occurs less frequently than $\rm G$, its contribution to entropy is much greater.

(6) Statements 1 and 3 are correct:

- $\rm B$ and $\rm G$ are indeed equally probable in the "unknown" file: The $60$ symbols shown divide into $30$ times $\rm B$ and $30$ times $\rm G$.

- However, there are now strong statistical ties within the temporal sequence. Long periods of good weather are usually followed by many bad days in a row.

- Because of this statistical dependence within the $\rm B/G$ sequence $H_\text{U} = 0.722 \; \rm bit/enquiry$ is smaller than $H_\text{M} = 1 \; \rm bit/enquiry$.

- $H_\text{M}$ is at the same time the maximum for $M = 2$ ⇒ the last statement is certainly wrong.