Difference between revisions of "Aufgaben:Exercise 1.7: Entropy of Natural Texts"

| Line 8: | Line 8: | ||

* Shannon assumed $26$ letters and the space ⇒ $M = 27$. | * Shannon assumed $26$ letters and the space ⇒ $M = 27$. | ||

| − | * Küpfmüller assumed only $M = 26$ letters (i.e. without the | + | * Küpfmüller assumed only $M = 26$ letters (i.e. without the "Blank Space"). |

| Line 14: | Line 14: | ||

This task is intended to show how | This task is intended to show how | ||

| − | * Erasures ⇒ one knows the location of an error, resp. | + | * "Erasures" ⇒ one knows the location of an error, resp. |

| − | * Errors ⇒ it is not clear to the reader what is wrong and what is right, | + | * "Errors" ⇒ it is not clear to the reader what is wrong and what is right, |

| − | have an effect on the comprehensibility of a text. Our text also contains the typical German letters "ä", "ö", "ü" and "ß" as well as numbers and punctuation. In addition, a distinction is made between upper and lower case. | + | have an effect on the comprehensibility of a text. Our text also contains the typical German letters "ä", "ö", "ü" and "ß" as well as numbers and punctuation. In addition, a distinction is made between upper and lower case. |

| − | In the figure, a text dealing with Küpfmüller's approach is divided into six blocks of length between $N = 197$ and $N = 319$ | + | In the figure, a text dealing with Küpfmüller's approach is divided into six blocks of length between $N = 197$ and $N = 319$. Described is the check of his [[Information_Theory/Natürliche_wertdiskrete_Nachrichtenquellen#A_further_entropy_estimation_by_K.C3.BCpfm.C3.BCller|first analysis]] in a completely different way, which led to the result $H =1.51\ \rm bit/character$. |

| − | * In the upper five blocks one recognises "Erasures" with different erasure probabilities between $10\%$ and $50\%$. | + | * In the upper five blocks one recognises "Erasures" with different erasure probabilities between $10\%$ and $50\%$. |

* In the last block, "character errors" with $20$–error probability are inserted. | * In the last block, "character errors" with $20$–error probability are inserted. | ||

| − | The influence of such character errors on the readability of a text is to be compared in subtask '''(4)''' with the second block (outlined in red), for which the probability of an erasure is also $20\%$ | + | The influence of such character errors on the readability of a text is to be compared in subtask '''(4)''' with the second block (outlined in red), for which the probability of an erasure is also $20\%$. |

| Line 38: | Line 38: | ||

*Bezug genommen wird insbesondere auf die beiden Seiten | *Bezug genommen wird insbesondere auf die beiden Seiten | ||

::[[Information_Theory/Natürliche_wertdiskrete_Nachrichtenquellen#Entropy_estimation_according_to_K.C3.BCpfm.C3.BCller|Entropy estimation according to Küpfmüller]], sowie | ::[[Information_Theory/Natürliche_wertdiskrete_Nachrichtenquellen#Entropy_estimation_according_to_K.C3.BCpfm.C3.BCller|Entropy estimation according to Küpfmüller]], sowie | ||

| − | ::[[Information_Theory/ | + | ::[[Information_Theory/Natural_Discrete_Sources#A_further_entropy_estimation_by_K.C3.BCpfm.C3.BCller|Another entropy estimation by Küpfmüller]]. |

| − | *For the ''relative redundancy'' of a sequence | + | *For the '''relative redundancy''' of a sequence with the decision content $H_0$ and the entropy $H$ applies |

:$$r = \frac{H_0 - H}{H_0}\hspace{0.05cm}.$$ | :$$r = \frac{H_0 - H}{H_0}\hspace{0.05cm}.$$ | ||

Revision as of 15:15, 22 June 2021

In the early 1950s, Claude E. Shannon estimated the entropy $H$ of the English language at one bit per character. A short time later, Karl Küpfmüller arrived at an entropy value of $H =1.3\ \rm bit/character$, i.e. a somewhat larger value, in an empirical study of the German language. Interestingly, the results of Shannon and Küpfmüller are based on two completely different methods.

The differing results cannot be explained by the small differences in the range of symbols $M$ :

- Shannon assumed $26$ letters and the space ⇒ $M = 27$.

- Küpfmüller assumed only $M = 26$ letters (i.e. without the "Blank Space").

Both made no distinction between upper and lower case.

This task is intended to show how

- "Erasures" ⇒ one knows the location of an error, resp.

- "Errors" ⇒ it is not clear to the reader what is wrong and what is right,

have an effect on the comprehensibility of a text. Our text also contains the typical German letters "ä", "ö", "ü" and "ß" as well as numbers and punctuation. In addition, a distinction is made between upper and lower case.

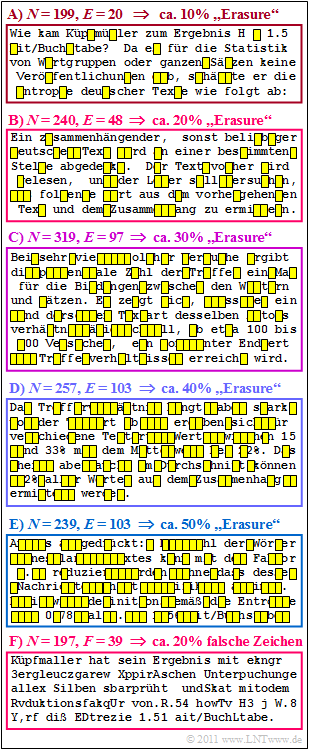

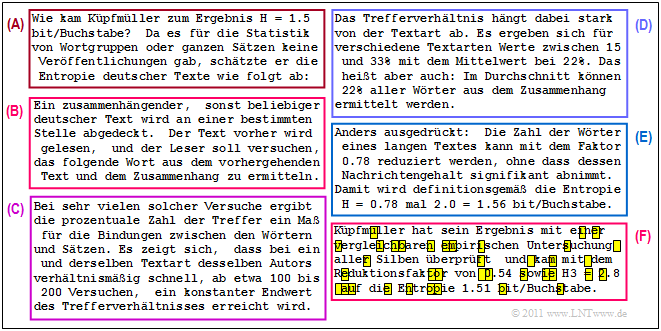

In the figure, a text dealing with Küpfmüller's approach is divided into six blocks of length between $N = 197$ and $N = 319$. Described is the check of his first analysis in a completely different way, which led to the result $H =1.51\ \rm bit/character$.

- In the upper five blocks one recognises "Erasures" with different erasure probabilities between $10\%$ and $50\%$.

- In the last block, "character errors" with $20$–error probability are inserted.

The influence of such character errors on the readability of a text is to be compared in subtask (4) with the second block (outlined in red), for which the probability of an erasure is also $20\%$.

Hints:

- The task belongs to the chapter Natural Discrete Sources.

- Bezug genommen wird insbesondere auf die beiden Seiten

- For the relative redundancy of a sequence with the decision content $H_0$ and the entropy $H$ applies

- $$r = \frac{H_0 - H}{H_0}\hspace{0.05cm}.$$

Questions

Solution

In the given German text of this task, the symbol range is significantly larger,

- since the typical German characters "ä", "ö", "ü" and "ß" also occur,

- there is a distinction between upper and lower case,

- and there are also numbers and punctuation marks.

(2) With the decision content $H_0 = \log_2 \ (31) \approx 4.7 \ \rm bit/character$ and the entropy $H = 1.3\ \rm bit/character $ , one obtains for the relative redundancy:

- $$r = \frac{H_0 - H}{H_0}= \frac{4.7 - 1.3}{4.7}\underline {\hspace{0.1cm}\approx 72.3\,\%}\hspace{0.05cm}.$$

(3) Only the first suggested solution is correct:

- According to Küpfmüller, one only needs $1.3$ binary characters per source character.

- With a file of length $N$ , $1.3 \cdot N$ binary symbols would therefore be sufficient, but only if the source symbol sequence is infinitely long $(N \to \infty)$ and this was encoded in the best possible way.

- In contrast, Küpfmüller's result and the relative redundancy of more than $70\%$ calculated in subtask (2) do not mean that a reader can still understand the text if $70\%$ of the characters have been erased.

- Such a text is neither infinitely long nor has it been optimally encoded beforehand.

(4) Correct is statement 2:

- Test it yourself: The second block of the graphic on the information page is easier to decode than the last block, because you know where there are errors.

- If you want to keep trying: The exact same sequence of character errors was used for the bottom block (F) as for block (B) , that is, there are errors at characters $6$, $35$, $37$, , and so on.

Finally, the original text is given, which is only reproduced on the information page distorted by erasures or real character errors.