Difference between revisions of "Aufgaben:Exercise 4.2: Channel Log Likelihood Ratio at AWGN"

m (Guenter moved page Exercise 4.2: Channel LLR at AWGN to Exercise 4.2: Channel Log Likelihood Ratio at AWGN) |

|||

| Line 1: | Line 1: | ||

| − | {{quiz-Header|Buchseite= | + | {{quiz-Header|Buchseite=Channel_Coding/Soft-in_Soft-Out_Decoder}} |

| − | [[File:P_ID2980__KC_A_4_2_v2.png|right|frame| | + | [[File:P_ID2980__KC_A_4_2_v2.png|right|frame|Conditional Gaussian functions]] |

| − | + | We consider two channels $\rm A$ and $\rm B$ , each with. | |

| − | * | + | * binary bipolar input $x ∈ \{+1, \, -1\}$, and |

| − | * | + | * continuous-valued output $y ∈ {\rm \mathcal{R}}$ (real number). |

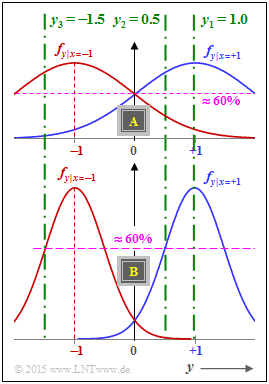

| − | + | The graph shows for both channels | |

| − | * | + | * as blue curve the density functions $f_{y\hspace{0.05cm}|\hspace{0.05cm}x=+1}$, |

| − | * | + | * as red curve the density functions $f_{y\hspace{0.05cm}|\hspace{0.05cm}x=-1}$. |

| − | + | In [[Channel_Coding/Soft-in_Soft-Out_Decoder#Reliability_information_-_Log_Likelihood_Ratio| "theory section"]] the channel LLR was derived for this AWGN constellation as follows: | |

:$$L_{\rm K}(y) = L(y\hspace{0.05cm}|\hspace{0.05cm}x) = {\rm ln} \hspace{0.15cm} \frac{{\rm Pr}(y \hspace{0.05cm}|\hspace{0.05cm}x=+1) }{{\rm Pr}(y \hspace{0.05cm}|\hspace{0.05cm}x = -1)} | :$$L_{\rm K}(y) = L(y\hspace{0.05cm}|\hspace{0.05cm}x) = {\rm ln} \hspace{0.15cm} \frac{{\rm Pr}(y \hspace{0.05cm}|\hspace{0.05cm}x=+1) }{{\rm Pr}(y \hspace{0.05cm}|\hspace{0.05cm}x = -1)} | ||

\hspace{0.05cm}.$$ | \hspace{0.05cm}.$$ | ||

| − | + | Evaluating this equation analytically, we obtain with the proportionality constant $K_{\rm L} = 2/\sigma^2$: | |

:$$L_{\rm K}(y) = | :$$L_{\rm K}(y) = | ||

K_{\rm L} \cdot y | K_{\rm L} \cdot y | ||

| Line 28: | Line 28: | ||

| − | + | Hints: | |

| − | * | + | * This exercise belongs to the chapter [[Channel_Coding/Soft-in_Soft-Out_Decoder| "Soft–in Soft–out Decoder"]]. |

| − | * | + | * Reference is made in particular to the pages [[Channel_Coding/Soft-in_Soft-Out_Decoder#Reliability_information_-_Log_Likelihood_Ratio|"Reliability Information – Log Likelihood Ratio"]] and [[Channel_Coding/Channel_Models_and_Decision_Structures#AWGN_channel_at_Binary_Input|"AWGN–Channel at Binary Input"]. |

| Line 36: | Line 36: | ||

| − | === | + | ===Questions=== |

<quiz display=simple> | <quiz display=simple> | ||

| − | { | + | {What are the characteristics of the channels shown in the diagram? |

|type="[]"} | |type="[]"} | ||

| − | + | + | + They describe the binary transmission under Gaussian interference. |

| − | + | + | + The bit error probability without coding is ${\rm Q}(1/\sigma)$. |

| − | + | + | + The channel–LLR is given as $L_{\rm K}(y) = K_{\rm L} \cdot y$ representable. |

| − | { | + | {Which constant $K_{\rm L}$ characterizes the channel $\rm A$? |

|type="{}"} | |type="{}"} | ||

$K_{\rm L} \ = \ ${ 2 3% } | $K_{\rm L} \ = \ ${ 2 3% } | ||

| − | { | + | {For channel $\rm A$ what information do the received values $y_1 = 1, \ y_2 = 0.5$, $y_3 = \, -1.5$ provide about the transmitted binary symbols $x_1, \ x_2$ and $x_3$, respectively? |

|type="[]"} | |type="[]"} | ||

| − | + $y_1 = 1.0$ | + | + $y_1 = 1.0$ states that probably $x_1 = +1$ was sent. |

| − | + $y_2 = 0.5$ | + | + $y_2 = 0.5$ states that probably $x_2 = +1$ was sent. |

| − | + $y_3 = \, -1.5$ | + | + $y_3 = \, -1.5$ states that probably $x_3 = \, -1$ was sent. |

| − | + | + | + The decision "$y_1 → x_1$" is more certain than "$y_2 → x_2$". |

| − | - | + | - The decision "$y_1 → x_1$" is safer than "$y_3 → x_3$". |

| − | { | + | {Which $K_{\rm L}$ identifies the channel $\rm B$? |

|type="{}"} | |type="{}"} | ||

$K_{\rm L} \ = \ ${ 8 3% } | $K_{\rm L} \ = \ ${ 8 3% } | ||

| − | { | + | {What information does channel $\rm B$ provide about the received values $y_1 = 1, \ y_2 = 0.5$, $y_3 = -1.5$ about the transmitted binary symbols $x_1, \ x_2$ respectively. $x_3$? |

|type="[]"} | |type="[]"} | ||

| − | + | + | + For $x_1, \ x_2, \ x_3$ is decided the same as for channel $\rm A$. |

| − | + | + | + The estimate "$x_2 = +1$" is four times more certain than for channel $\rm A$. |

| − | - | + | - The estimate "$x_3 = \, -1$" at channel $\rm A$ is more reliable than the estimate "$x_2 = +1$" at channel $\rm B$. |

</quiz> | </quiz> | ||

| − | === | + | ===Solution=== |

{{ML-Kopf}} | {{ML-Kopf}} | ||

| − | '''(1)''' <u> | + | '''(1)''' <u>All proposed solutions</u> are correct: |

| − | * | + | * The transfer equation is always $y = x + n$, with $x ∈ \{+1, \, -1\}$; $n$ gives a Gaussian random variable with variance $\sigma$ ⇒ variance $\sigma^2$ ⇒ [[Channel_Coding/Channel_Models_and_Decision_Structures#AWGN_channel_at_Binary_Input| "AWGN Channel"]]. |

| − | * | + | * The [[Digital_Signal_Transmission/Error_Probability_for_Baseband_Transmission#Error_probability_with_Gaussian_noise|"AWGN Bit Error Probability"]] is calculated using the dispersion $\sigma$ to ${\rm Q}(1/\sigma)$ where ${\rm Q}(x)$ denotes the [[Theory_of_Stochastic_Signals/Gaussian_Distributed_Random_Variables#Exceedance_probability|"complementary Gaussian error function"]]. |

| − | * | + | * For each AWGN channel, according to the [[Channel_Coding/Soft-in_Soft-Out_Decoder#Reliability_information_-_Log_Likelihood_Ratio|"theory section"]], the channel–LLR always results in $L_{\rm K}(y) = L(y|x) = K_{\rm L} \cdot y$. |

| − | * | + | *The constant $K_{\rm L}$ is different for the two channels, however. |

| + | '''(2)''' For the AWGN channel, $L_{\rm K}(y) = K_{\rm L} \cdot y$ with constant $K_{\rm L} = 2/\sigma^2$. The standard deviation $\sigma$ can be read from the graph on the data page as the distance of the inflection points within the Gaussian curves from their respective midpoints. For '''channel A''', $\sigma = 1$ results. | ||

| − | + | *The same result is obtained by evaluating the Gaussian function | |

| − | |||

| − | * | ||

:$$\frac{f_{\rm G}( y = \sigma)}{f_{\rm G}( y = 0)} = {\rm e} ^{ - y^2/(2\sigma^2) } \Bigg |_{\hspace{0.05cm} y \hspace{0.05cm} = \hspace{0.05cm} \sigma} = {\rm e} ^{ -0.5} \approx 0.6065\hspace{0.05cm}.$$ | :$$\frac{f_{\rm G}( y = \sigma)}{f_{\rm G}( y = 0)} = {\rm e} ^{ - y^2/(2\sigma^2) } \Bigg |_{\hspace{0.05cm} y \hspace{0.05cm} = \hspace{0.05cm} \sigma} = {\rm e} ^{ -0.5} \approx 0.6065\hspace{0.05cm}.$$ | ||

| − | * | + | *This means: At the abscissa value $y = \sigma$ the mean-free Gaussian function $f_{\rm G}(y)$ has decayed to $60.65\%$ of its maximum value. Thus, for the constant at '''channel A''': $K_{\rm L} = 2/\sigma^2 \ \underline{= 2}$. |

| − | '''(3)''' | + | '''(3)''' The correct <u>solutions are 1 to 4</u>: |

| − | * | + | *We first give the respective LLRs of '''Channel A''': |

:$$L_{\rm K}(y_1 = +1.0) = +2\hspace{0.05cm},\hspace{0.3cm} | :$$L_{\rm K}(y_1 = +1.0) = +2\hspace{0.05cm},\hspace{0.3cm} | ||

L_{\rm K}(y_2 = +0.5) = +1\hspace{0.05cm},\hspace{0.3cm} | L_{\rm K}(y_2 = +0.5) = +1\hspace{0.05cm},\hspace{0.3cm} | ||

L_{\rm K}(y_3 = -1.5) = -3\hspace{0.05cm}. $$ | L_{\rm K}(y_3 = -1.5) = -3\hspace{0.05cm}. $$ | ||

| − | * | + | *This results in the following consequences: |

| − | # | + | # The decision for the (most probable) codebit $x_i$ is made based on the sign of $L_{\rm K}(y_i)$: $x_1 = +1, \ x_2 = +1, \ x_3 = \, -1$ ⇒ the <u>proposed solutions 1, 2 and 3</u> are correct. |

| − | # | + | # The decision "$x_1 = +1$" is more reliable than the decision "$x_2 = +1$" ⇒ <u>Proposition 4</u> is also correct. |

| − | # | + | # However, the decision "$x_1 = +1$" is less reliable than the decision "$x_3 = \, –1$" because $|L_{\rm K}(y_1)|$ is smaller than $|L_{\rm K}(y_3)|$ ⇒ Proposed solution 5 is incorrect. |

| − | + | This can also be interpreted as follows: The quotient between the red and the blue PDF value at $y_3 = \, -1.5$ is larger than the quotient between the blue and the red PDF value at $y_1 = +1$. | |

| − | '''(4)''' | + | '''(4)''' Following the same considerations as in subtask (2), the scattering of '''channel B'''' is given by: $\sigma = 1/2 \ \Rightarrow \ K_{\rm L} = 2/\sigma^2 \ \underline{= 8}$. |

| − | '''(5)''' | + | '''(5)''' For '''channel B''', the following applies: $L_{\rm K}(y_1 = +1.0) = +8, \ L_{\rm K}(y_2 = +0.5) = +4$ und $L_{\rm K}(y_3 = \, -1.5) = \, -12$. |

| − | * | + | *It is obvious that <u>the first two proposed solutions</u> are true, but not the third, because |

| − | :$$|L_{\rm K}(y_3 = -1.5, {\rm | + | :$$|L_{\rm K}(y_3 = -1.5, {\rm channel\hspace{0.15cm} A)}| = 3 |

\hspace{0.5cm} <\hspace{0.5cm} | \hspace{0.5cm} <\hspace{0.5cm} | ||

| − | |L_{\rm K}(y_2 = 0.5, {\rm | + | |L_{\rm K}(y_2 = 0.5, {\rm channel\hspace{0.15cm} B)}| = 4\hspace{0.05cm} . $$ |

{{ML-Fuß}} | {{ML-Fuß}} | ||

Revision as of 18:36, 27 October 2022

We consider two channels $\rm A$ and $\rm B$ , each with.

- binary bipolar input $x ∈ \{+1, \, -1\}$, and

- continuous-valued output $y ∈ {\rm \mathcal{R}}$ (real number).

The graph shows for both channels

- as blue curve the density functions $f_{y\hspace{0.05cm}|\hspace{0.05cm}x=+1}$,

- as red curve the density functions $f_{y\hspace{0.05cm}|\hspace{0.05cm}x=-1}$.

In "theory section" the channel LLR was derived for this AWGN constellation as follows:

- $$L_{\rm K}(y) = L(y\hspace{0.05cm}|\hspace{0.05cm}x) = {\rm ln} \hspace{0.15cm} \frac{{\rm Pr}(y \hspace{0.05cm}|\hspace{0.05cm}x=+1) }{{\rm Pr}(y \hspace{0.05cm}|\hspace{0.05cm}x = -1)} \hspace{0.05cm}.$$

Evaluating this equation analytically, we obtain with the proportionality constant $K_{\rm L} = 2/\sigma^2$:

- $$L_{\rm K}(y) = K_{\rm L} \cdot y \hspace{0.05cm}.$$

Hints:

- This exercise belongs to the chapter "Soft–in Soft–out Decoder".

- Reference is made in particular to the pages "Reliability Information – Log Likelihood Ratio" and [[Channel_Coding/Channel_Models_and_Decision_Structures#AWGN_channel_at_Binary_Input|"AWGN–Channel at Binary Input"].

Questions

Solution

- The transfer equation is always $y = x + n$, with $x ∈ \{+1, \, -1\}$; $n$ gives a Gaussian random variable with variance $\sigma$ ⇒ variance $\sigma^2$ ⇒ "AWGN Channel".

- The "AWGN Bit Error Probability" is calculated using the dispersion $\sigma$ to ${\rm Q}(1/\sigma)$ where ${\rm Q}(x)$ denotes the "complementary Gaussian error function".

- For each AWGN channel, according to the "theory section", the channel–LLR always results in $L_{\rm K}(y) = L(y|x) = K_{\rm L} \cdot y$.

- The constant $K_{\rm L}$ is different for the two channels, however.

(2) For the AWGN channel, $L_{\rm K}(y) = K_{\rm L} \cdot y$ with constant $K_{\rm L} = 2/\sigma^2$. The standard deviation $\sigma$ can be read from the graph on the data page as the distance of the inflection points within the Gaussian curves from their respective midpoints. For channel A, $\sigma = 1$ results.

- The same result is obtained by evaluating the Gaussian function

- $$\frac{f_{\rm G}( y = \sigma)}{f_{\rm G}( y = 0)} = {\rm e} ^{ - y^2/(2\sigma^2) } \Bigg |_{\hspace{0.05cm} y \hspace{0.05cm} = \hspace{0.05cm} \sigma} = {\rm e} ^{ -0.5} \approx 0.6065\hspace{0.05cm}.$$

- This means: At the abscissa value $y = \sigma$ the mean-free Gaussian function $f_{\rm G}(y)$ has decayed to $60.65\%$ of its maximum value. Thus, for the constant at channel A: $K_{\rm L} = 2/\sigma^2 \ \underline{= 2}$.

(3) The correct solutions are 1 to 4:

- We first give the respective LLRs of Channel A:

- $$L_{\rm K}(y_1 = +1.0) = +2\hspace{0.05cm},\hspace{0.3cm} L_{\rm K}(y_2 = +0.5) = +1\hspace{0.05cm},\hspace{0.3cm} L_{\rm K}(y_3 = -1.5) = -3\hspace{0.05cm}. $$

- This results in the following consequences:

- The decision for the (most probable) codebit $x_i$ is made based on the sign of $L_{\rm K}(y_i)$: $x_1 = +1, \ x_2 = +1, \ x_3 = \, -1$ ⇒ the proposed solutions 1, 2 and 3 are correct.

- The decision "$x_1 = +1$" is more reliable than the decision "$x_2 = +1$" ⇒ Proposition 4 is also correct.

- However, the decision "$x_1 = +1$" is less reliable than the decision "$x_3 = \, –1$" because $|L_{\rm K}(y_1)|$ is smaller than $|L_{\rm K}(y_3)|$ ⇒ Proposed solution 5 is incorrect.

This can also be interpreted as follows: The quotient between the red and the blue PDF value at $y_3 = \, -1.5$ is larger than the quotient between the blue and the red PDF value at $y_1 = +1$.

(4) Following the same considerations as in subtask (2), the scattering of channel B' is given by: $\sigma = 1/2 \ \Rightarrow \ K_{\rm L} = 2/\sigma^2 \ \underline{= 8}$.

(5) For channel B, the following applies: $L_{\rm K}(y_1 = +1.0) = +8, \ L_{\rm K}(y_2 = +0.5) = +4$ und $L_{\rm K}(y_3 = \, -1.5) = \, -12$.

- It is obvious that the first two proposed solutions are true, but not the third, because

- $$|L_{\rm K}(y_3 = -1.5, {\rm channel\hspace{0.15cm} A)}| = 3 \hspace{0.5cm} <\hspace{0.5cm} |L_{\rm K}(y_2 = 0.5, {\rm channel\hspace{0.15cm} B)}| = 4\hspace{0.05cm} . $$